How Do Machines Learn?

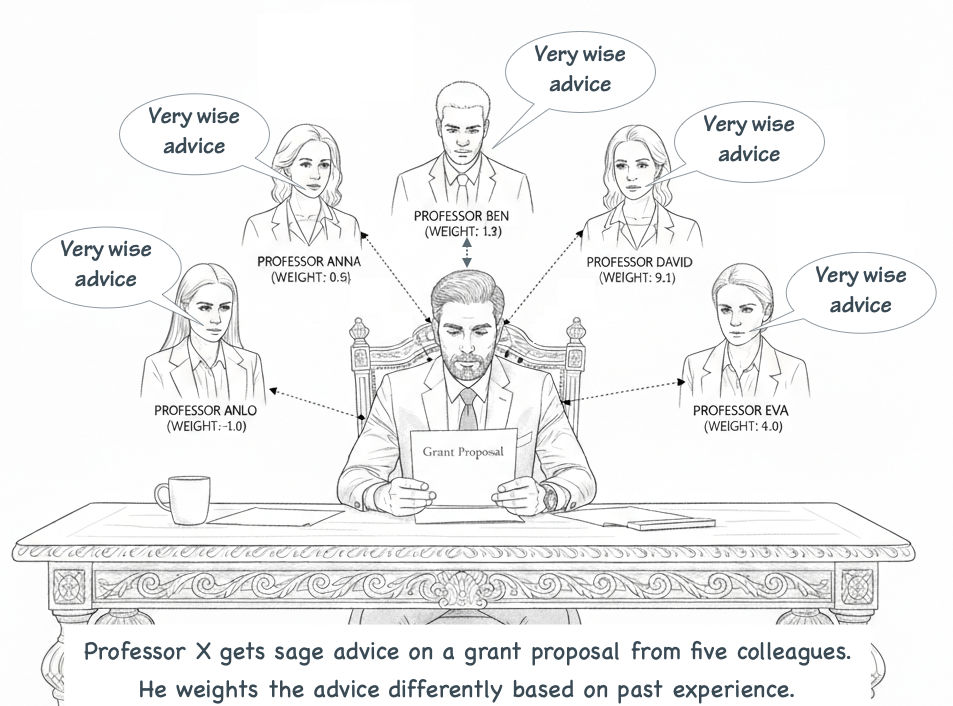

As our acknowledgements always say, a researcher depends on colleagues. I share my draft grant proposal with trusted colleagues. They offer advice. I value the advice of some colleagues more than others.

The grant I submit is a sort of weighted average of the advice I got.

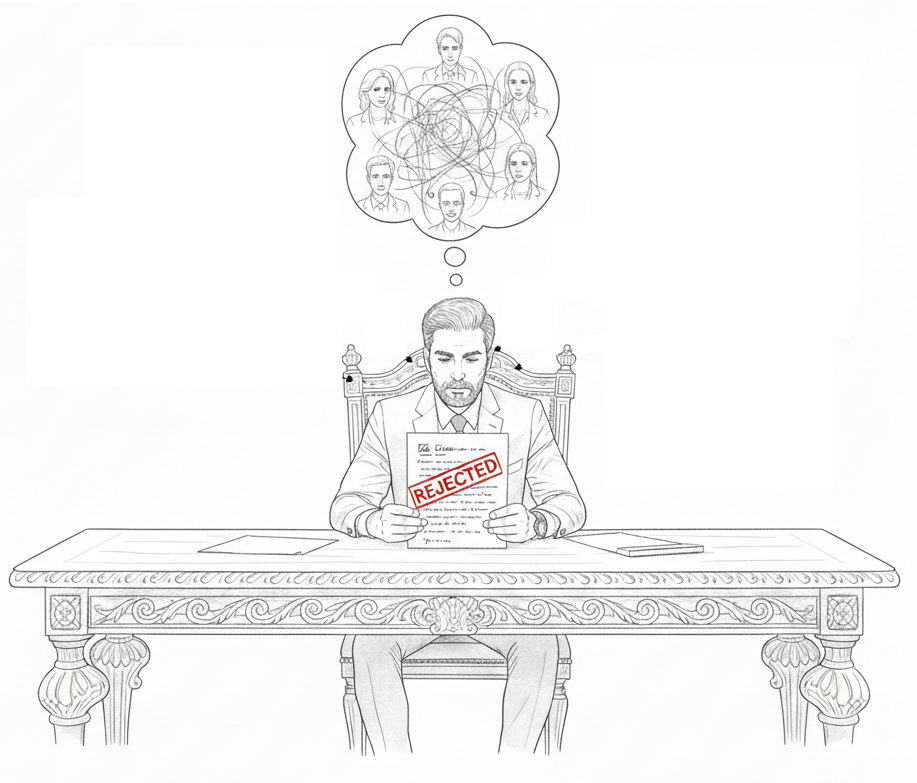

The Autopsy of Loss. When the grant is rejected I read the referees evaluation and score and think about the advice I got. I ask myself how the score might be different if I'd taken Eva's advice a bit more to heart? Or if I had relied less on Ben's suggestions? I intuitively compute how much the outcome might have changed with small changes in how I weighed the advice of each of my colleagues.

And I make a mental note to adjust my weights next time.

The Derivative of Blame

The Concept: Determining who contributed most to the mistake.

-

The Scenario: You look back at the committee. You ask: "If I had listened to Professor Smith just a tiny bit less, would our total error have gone down?"

-

The Calculus: This is the Partial Derivative ($\frac{\partial Loss}{\partial \omega}$). It tells you the sensitivity of the total error to that specific person's weight.

-

The Wisdom of Crowds: You aren't "teaching" the friends; you are calculating the "gradient" (the direction of steepest improvement) for the entire group's organization.

Coding Compliance vs Coding Learning

The Concept: Determining who contributed most to the mistake.

-

The Scenario: You look back at the committee. You ask: "If I had listened to Professor Smith just a tiny bit less, would our total error have gone down?"

-

The Calculus: This is the Partial Derivative ($\frac{\partial Loss}{\partial \omega}$). It tells you the sensitivity of the total error to that specific person's weight.

-

The Wisdom of Crowds: You aren't "teaching" the friends; you are calculating the "gradient" (the direction of steepest improvement) for the entire group's organization.

def evaluate_grant(impact_score, feasibility_score, budget_alignment):

# The human defines the rules and the logic flow

if impact_score > 0.8:

if feasibility_score > 0.7:

return "Approved"

elif budget_alignment == "High":

return "Waitlist"

else:

return "Rejected"

else:

return "Rejected"

# Logic is rigid; if the world changes, the code must be manually rewritten.import keras # or torch

# The human defines the architecture (the "committee")

model = Sequential([

Input(shape=(3,)), # 3 inputs: Impact, Feasibility, Budget

Dense(50, activation='relu'), # 50 "colleagues" processing the data

Dense(20, activation='relu'), # A sub-committee refining the views

Dense(1, activation='sigmoid') # The final decision (0 to 1)

])

# The human defines the learning process

model.compile(

optimizer='adam', # The strategy for adjusting weights

loss='binary_crossentropy', # How we measure the "error" or "blame"

metrics=['accuracy']

)

# The human provides data, not rules

model.fit(past_grant_data, outcomes, epochs=100)How Do Machines Learn?

By Dan Ryan

How Do Machines Learn?

- 10