Amazon SageMaker

Hands-On

Demo

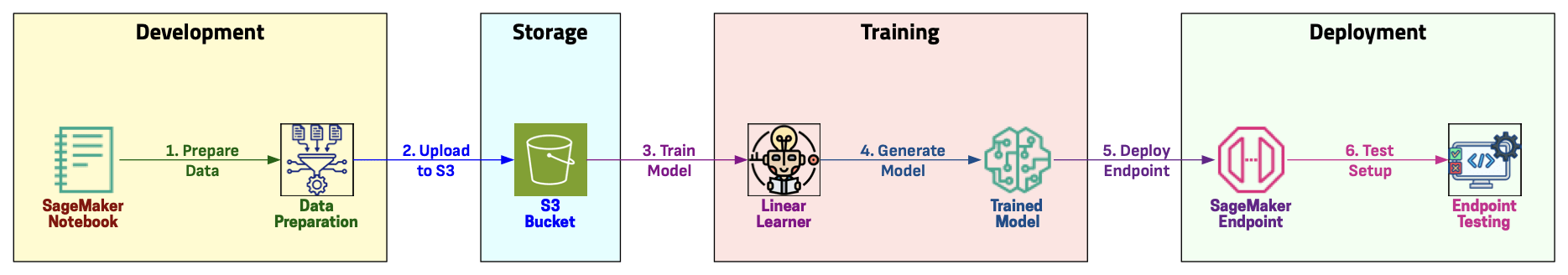

Agenda

In this demo, we will:

1. Create an Amazon S3 Bucket for storing data and model artifacts.

2. Create an Amazon SageMaker Notebook Instance.

3. Prepare data and upload it to S3 using the Notebook Instance.

4. Train a machine learning model using a SageMaker built-in algorithm (Linear Learner).

5. Deploy the trained model to a SageMaker Endpoint.

6. Test the setup by invoking the endpoint.

7. Clean up resources to avoid ongoing charges.

Visual Representation

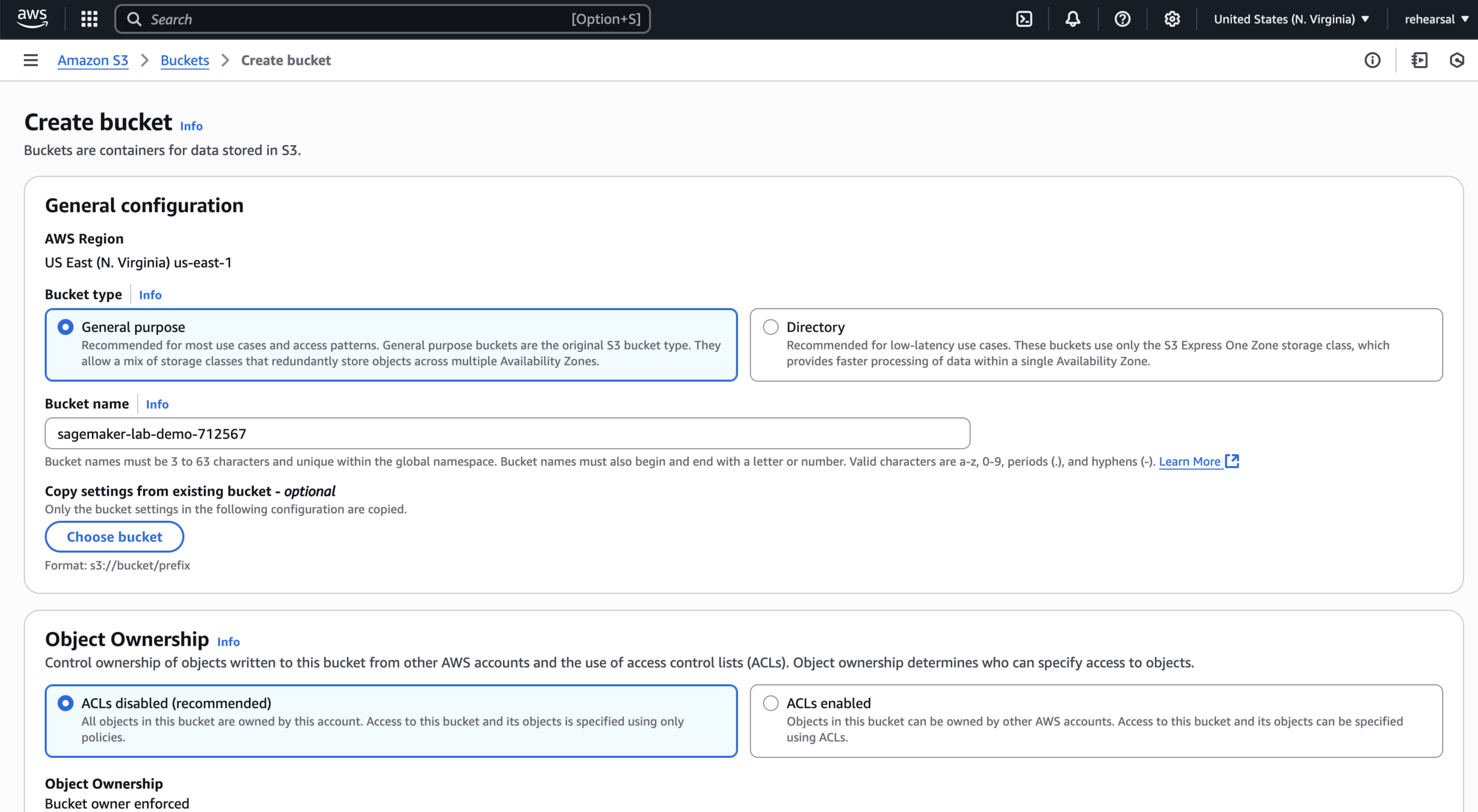

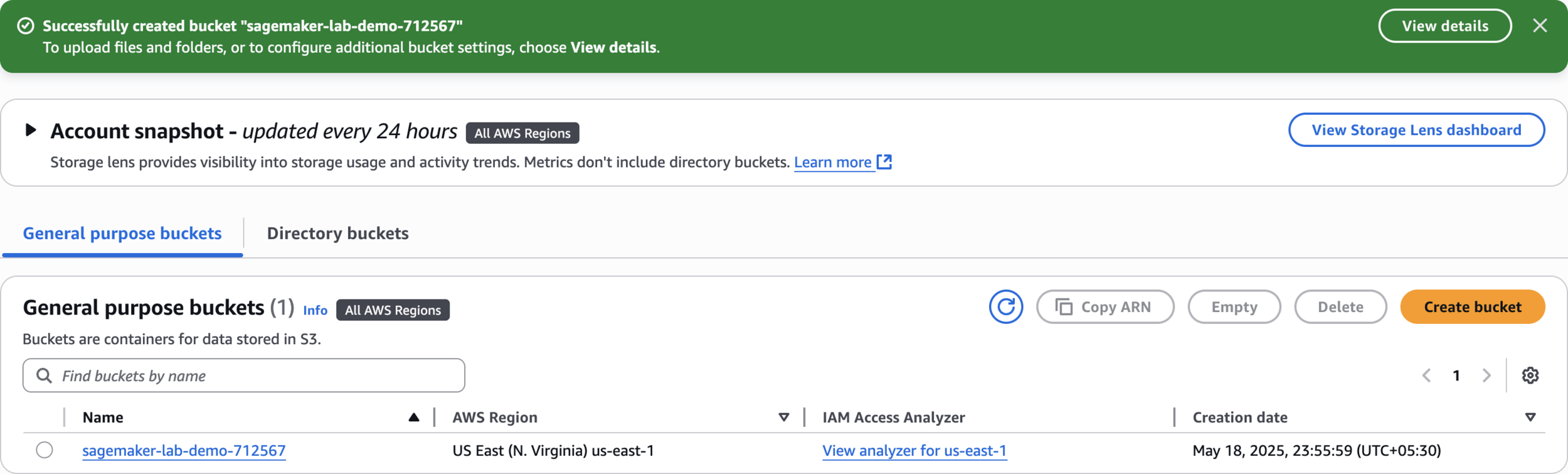

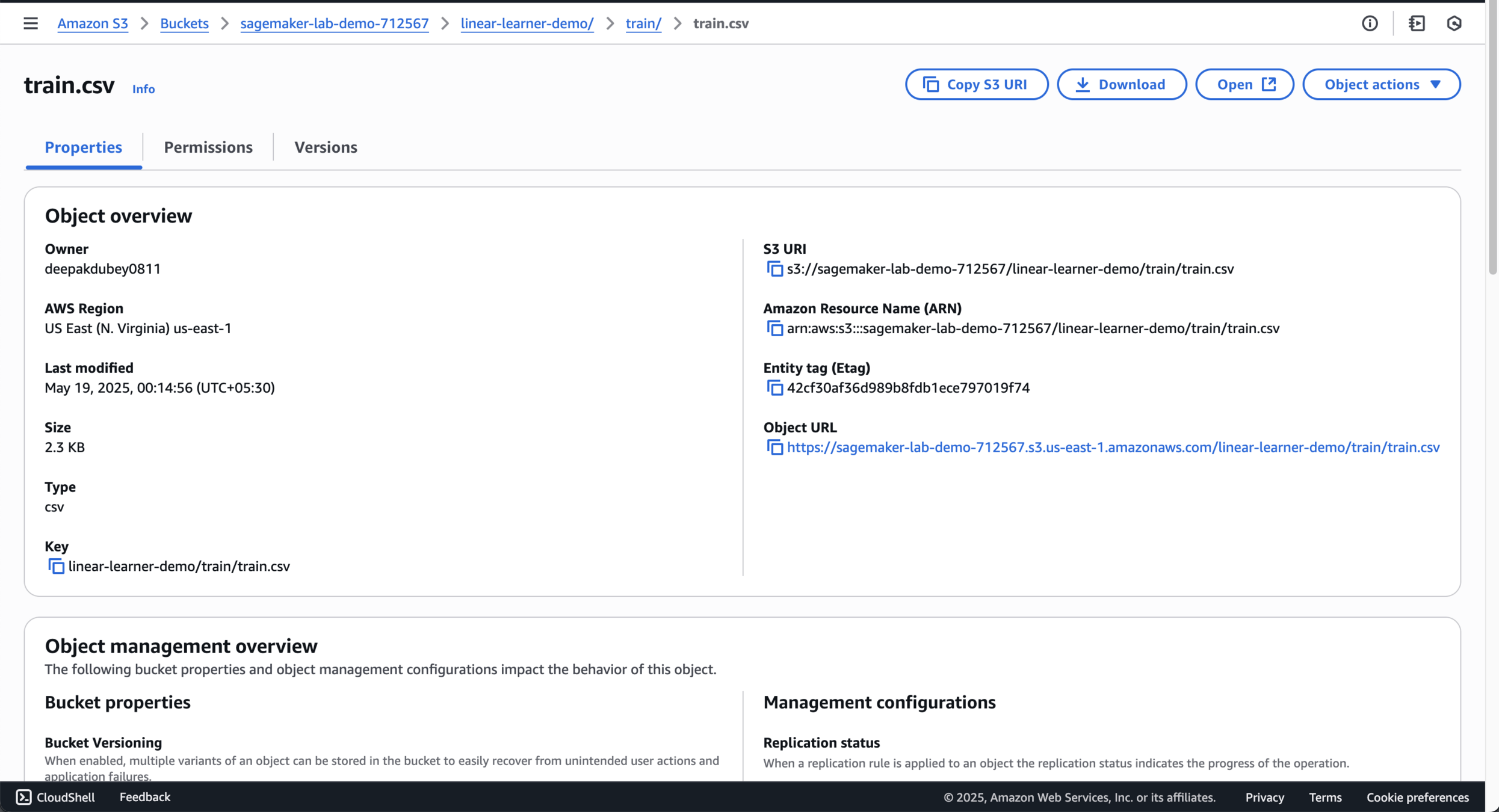

Create an Amazon S3 Bucket for storing data and model artifacts

Create S3 bucket

sagemaker-lab-demo-712567

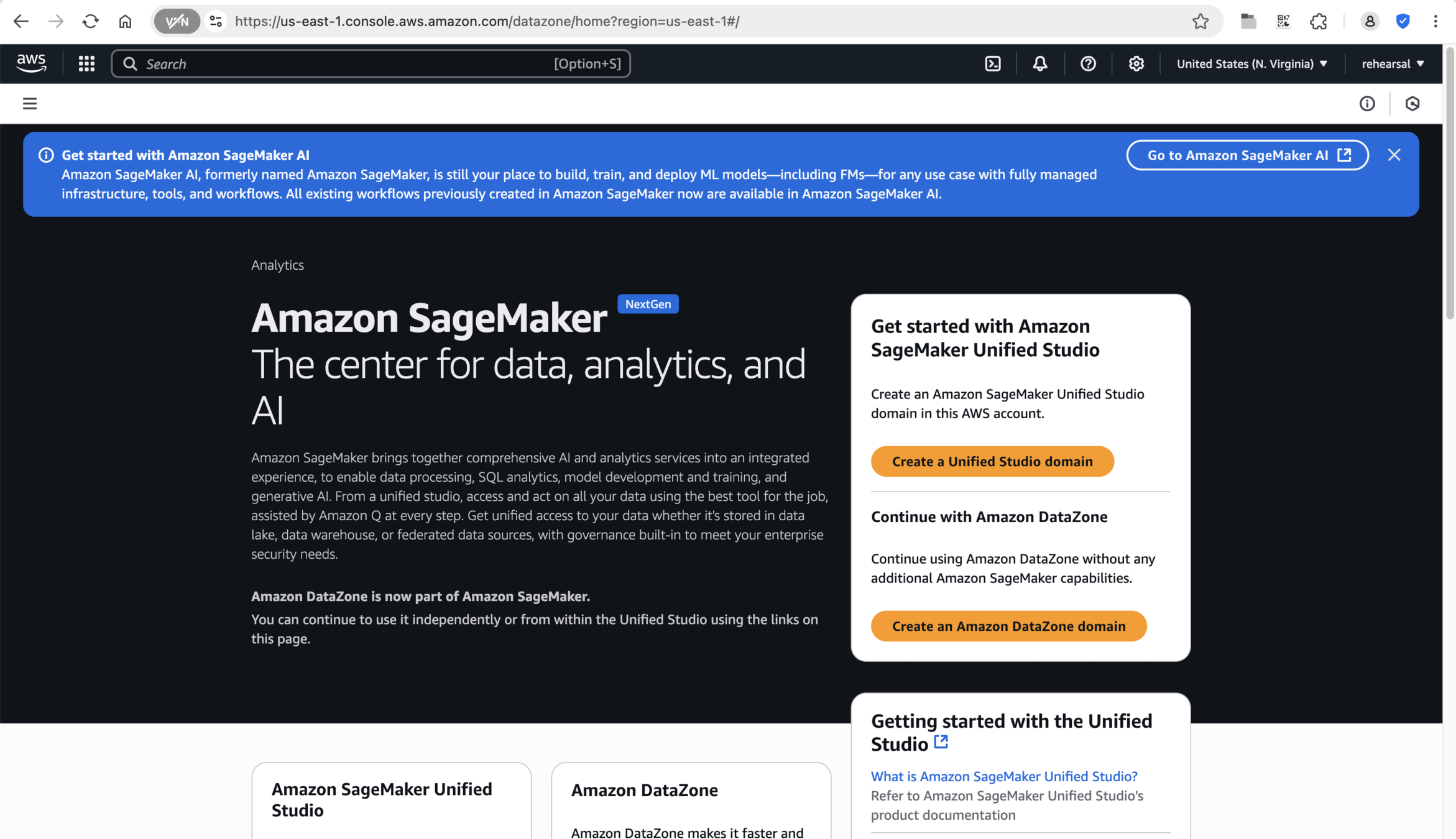

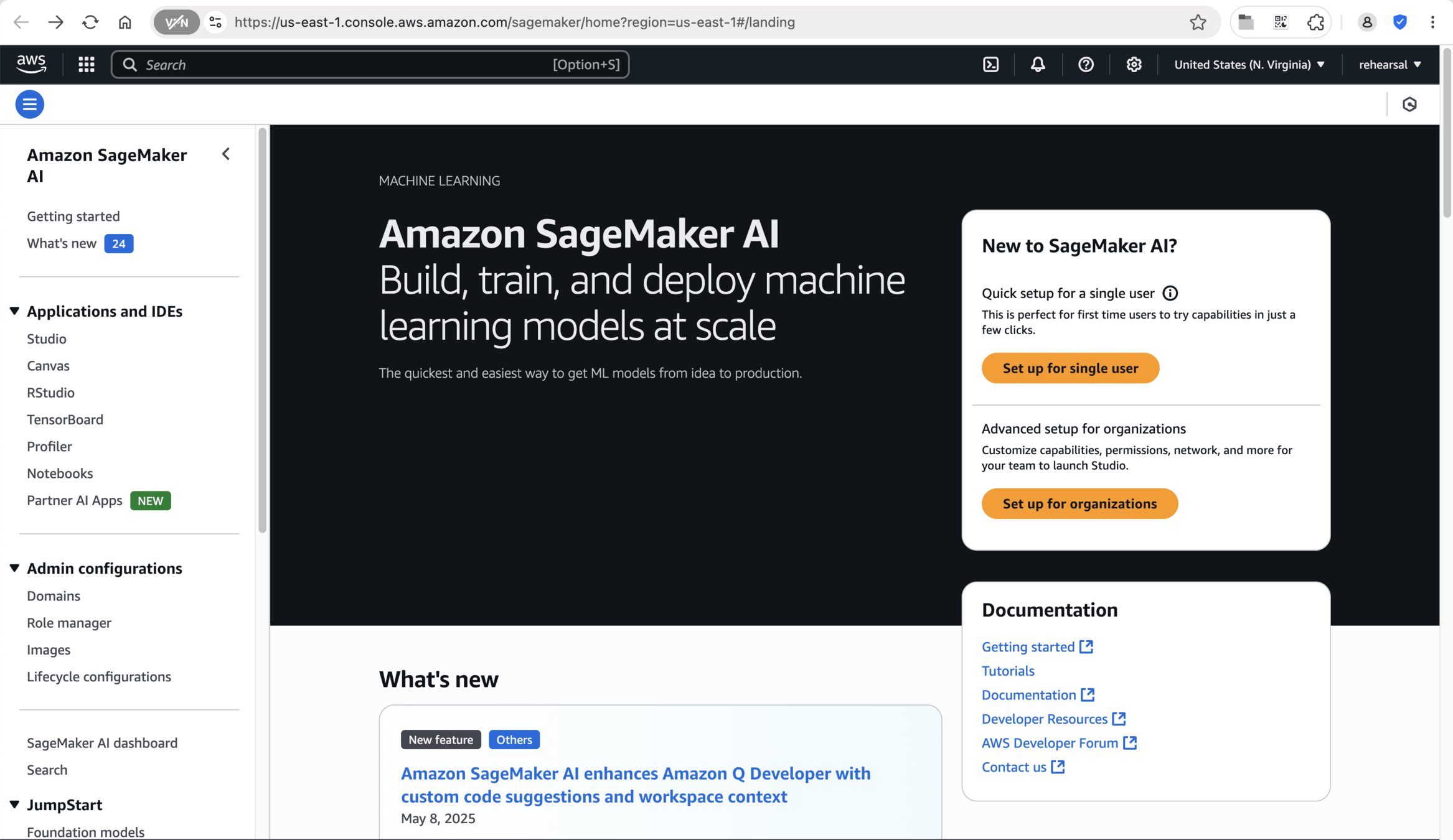

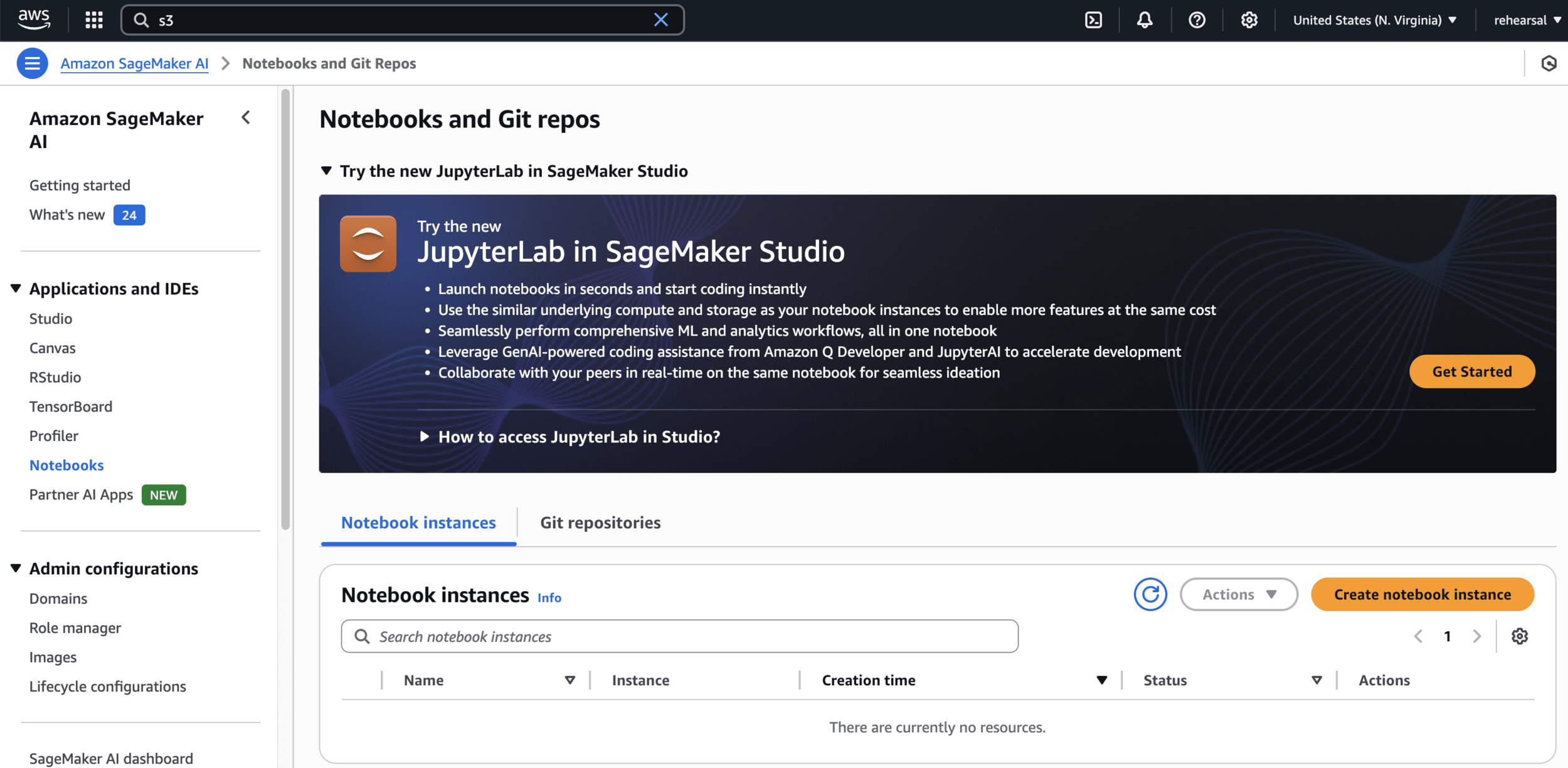

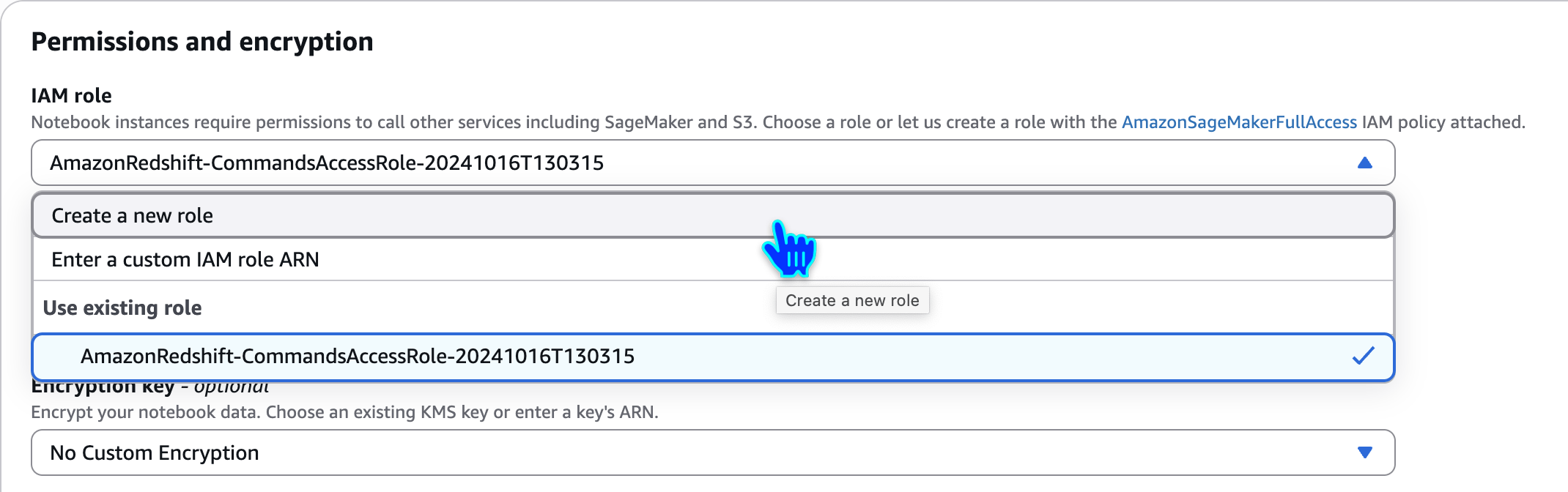

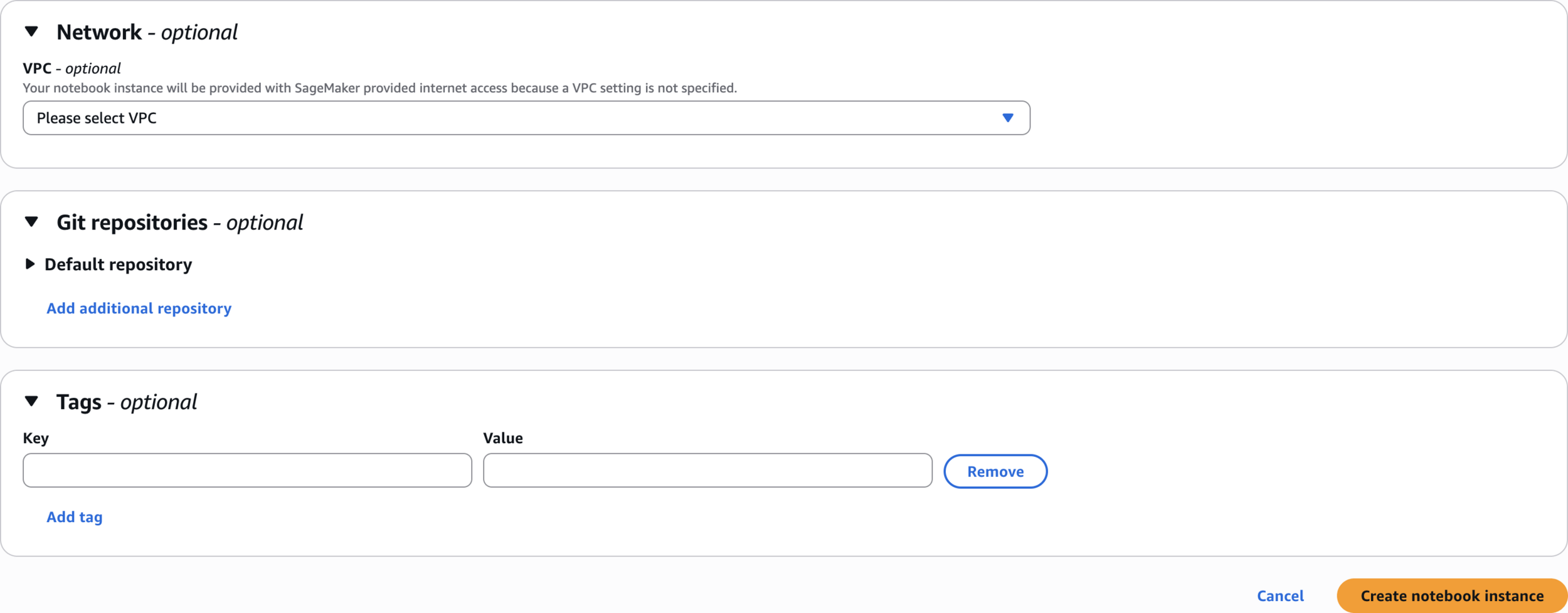

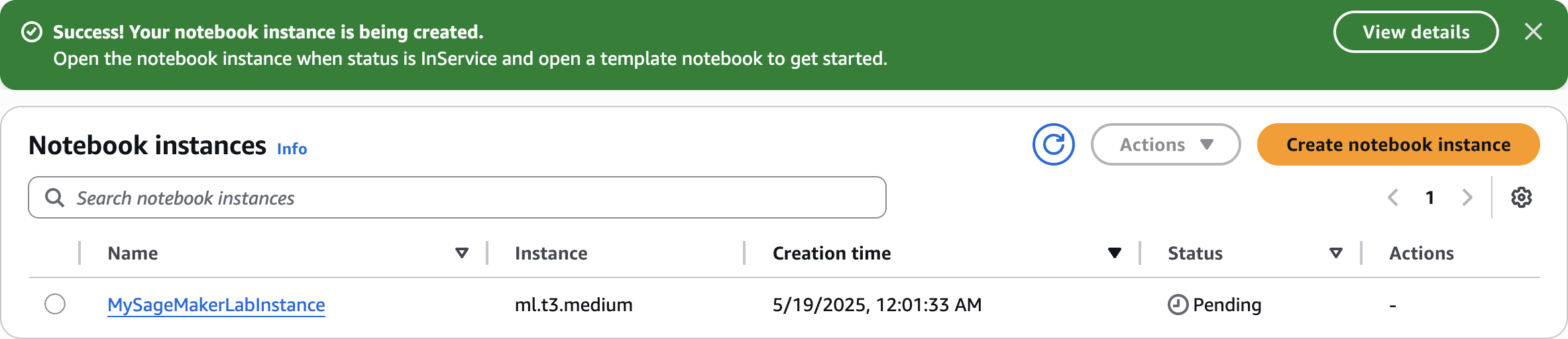

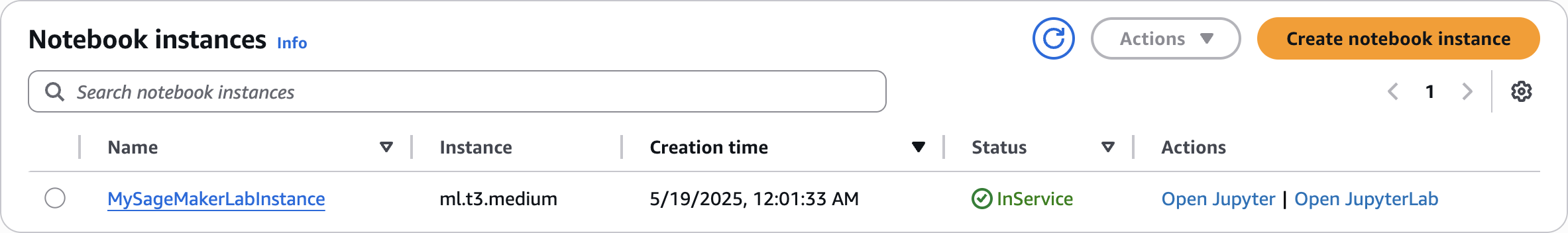

Create an Amazon SageMaker Notebook Instance

Notebooks

MySageMakerLabInstanceCreate notebook instance

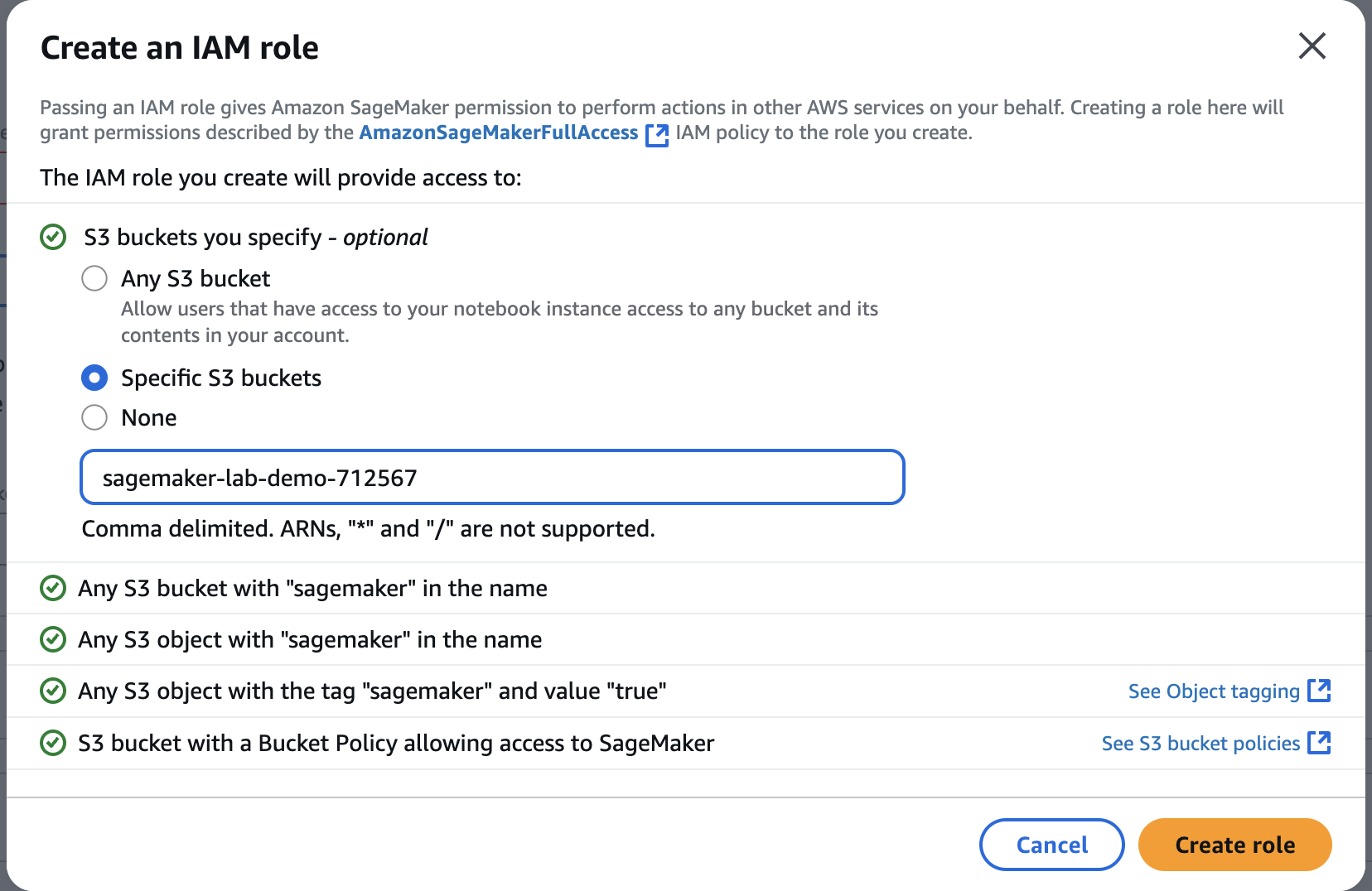

Create a new role

Specific S3 buckets

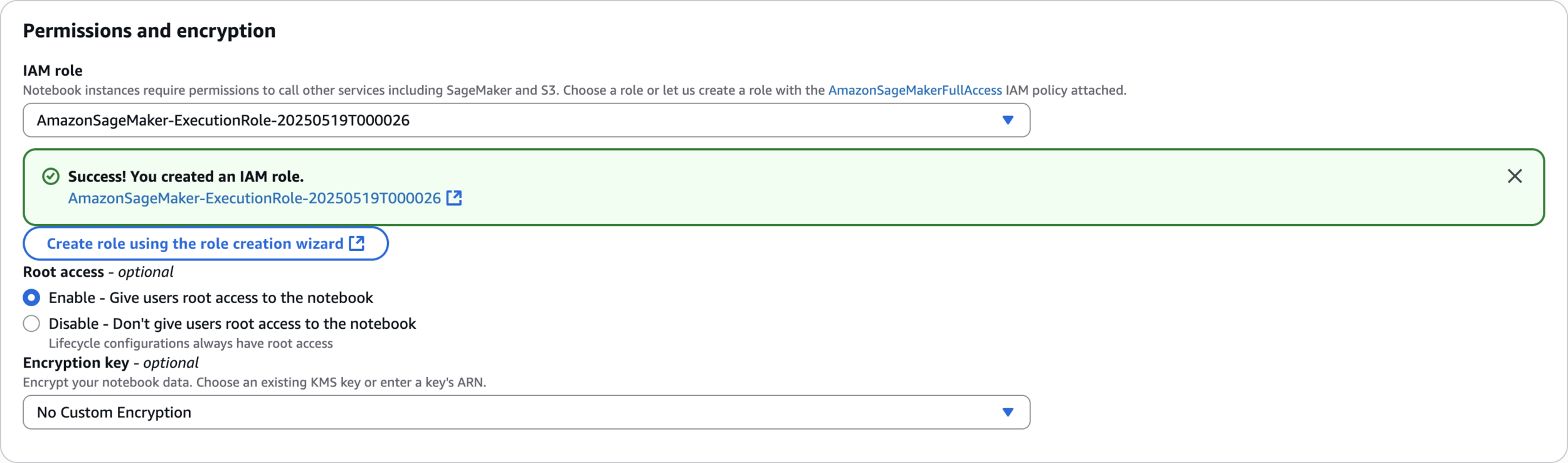

Role created successfully

Pending

InService

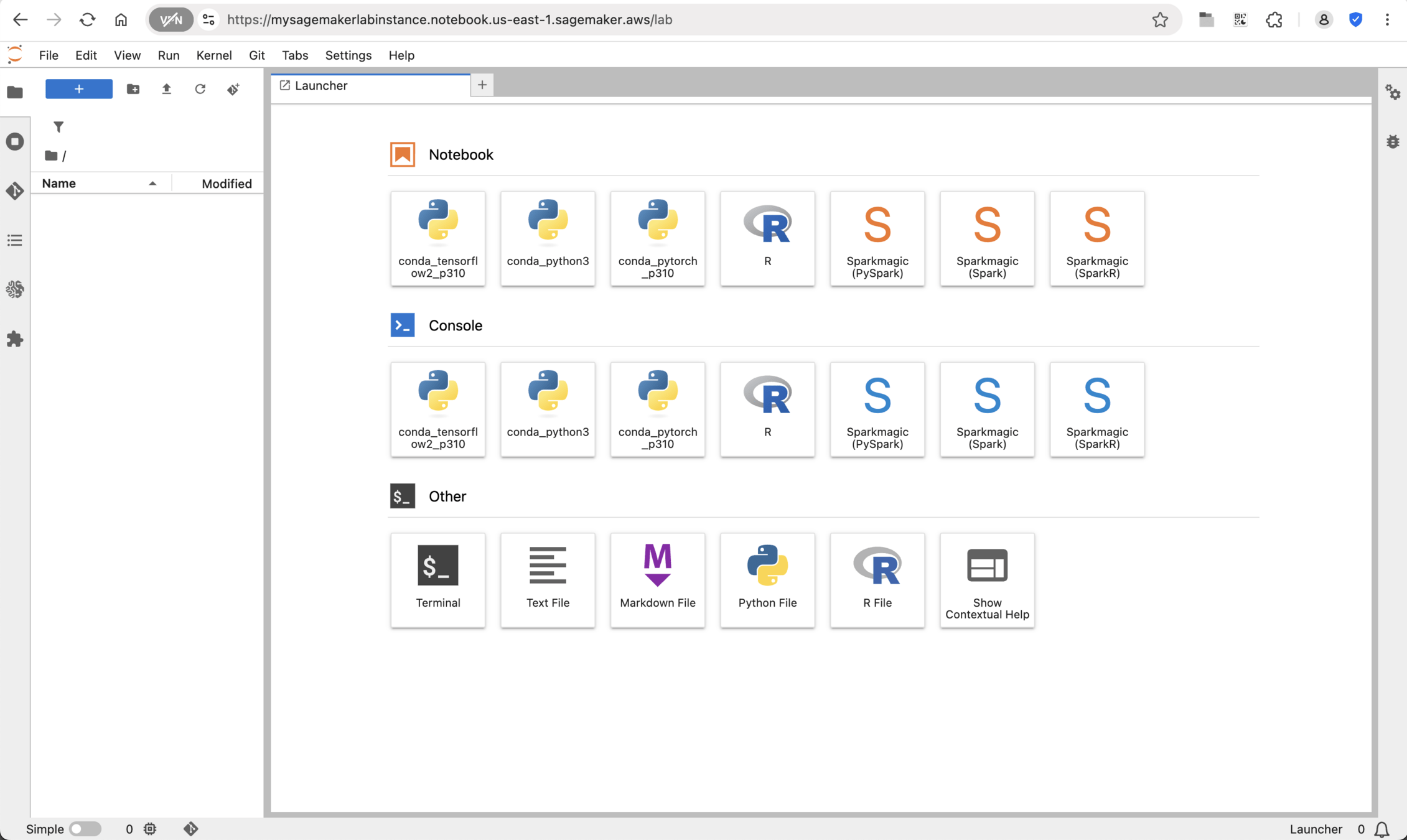

Prepare data and upload it to S3 using the Notebook Instance

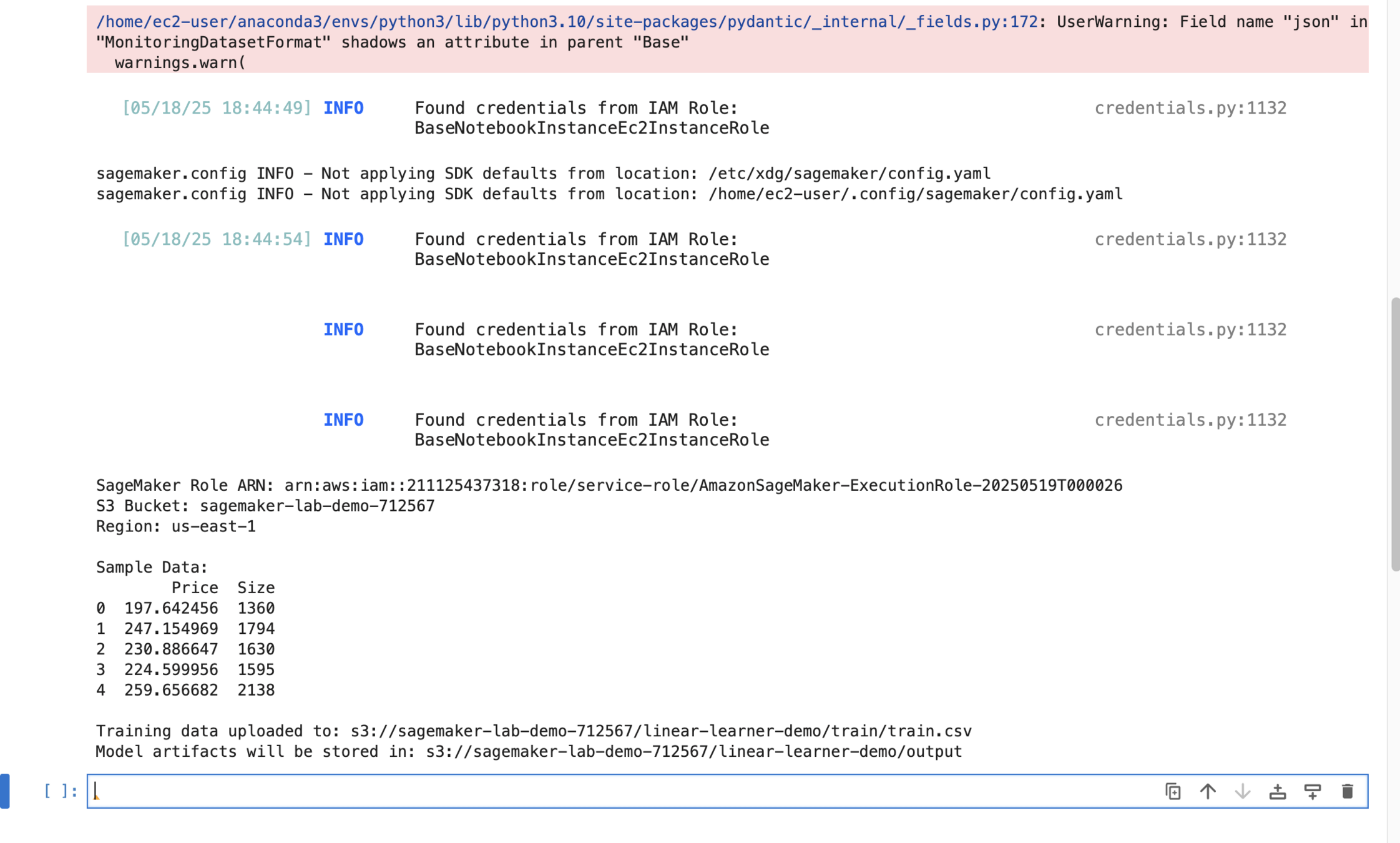

import pandas as pd

import numpy as np

import sagemaker

import boto3

import io

# Get the SageMaker session, execution role, and S3 bucket

sagemaker_session = sagemaker.Session()

role = sagemaker.get_execution_role()

bucket_name = '' # Replace with your S3 bucket name from Step 1

region = sagemaker_session.boto_region_name

s3_client = boto3.client('s3', region_name=region)

print(f"SageMaker Role ARN: {role}")

print(f"S3 Bucket: {bucket_name}")

print(f"Region: {region}")

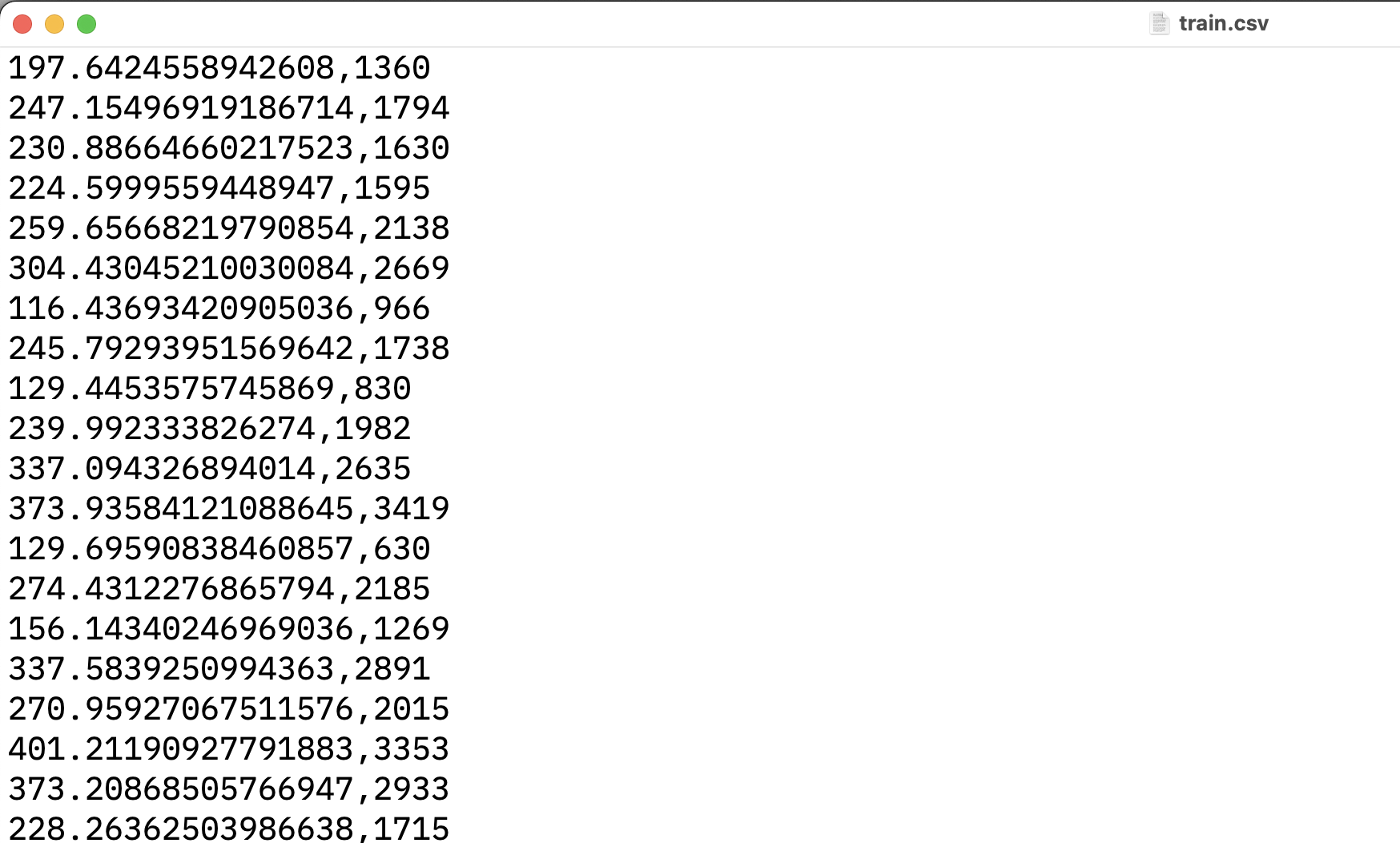

# Generate sample data: Predicting house price based on size

# Feature: Size (in square feet), Target: Price (in $1000s)

np.random.seed(42) # for reproducibility

num_samples = 100

house_size = np.random.randint(500, 3500, num_samples) # Size between 500 and 3500 sq ft

# Price = 50 + 0.1 * Size + some noise

# Convert to integer to have whole numbers for price

house_price = (50 + 0.1 * house_size + np.random.normal(0, 20, num_samples)).astype(int)

# Create a Pandas DataFrame

# Ensure 'Price' is the first column for SageMaker Linear Learner CSV input

df = pd.DataFrame({'Price': house_price, 'Size': house_size})

# Display the first few rows

print("\nSample Data (Price as whole numbers):")

print(df.head())

# Convert DataFrame to CSV string

csv_buffer = io.StringIO()

# No header, no index for SageMaker built-in algos like Linear Learner

df.to_csv(csv_buffer, header=False, index=False)

csv_content = csv_buffer.getvalue()

# Define S3 paths

prefix = 'linear-learner-demo' # A prefix (folder) in your S3 bucket

train_data_s3_path = f's3://{bucket_name}/{prefix}/train/train.csv'

# Upload the CSV data to S3

s3_client.put_object(Bucket=bucket_name, Key=f'{prefix}/train/train.csv', Body=csv_content)

print(f"\nTraining data uploaded to: {train_data_s3_path}")

# Define output path for model artifacts

s3_output_location = f's3://{bucket_name}/{prefix}/output'

print(f"Model artifacts will be stored in: {s3_output_location}")sagemaker-lab-demo-712567

train.csv

Sample Data

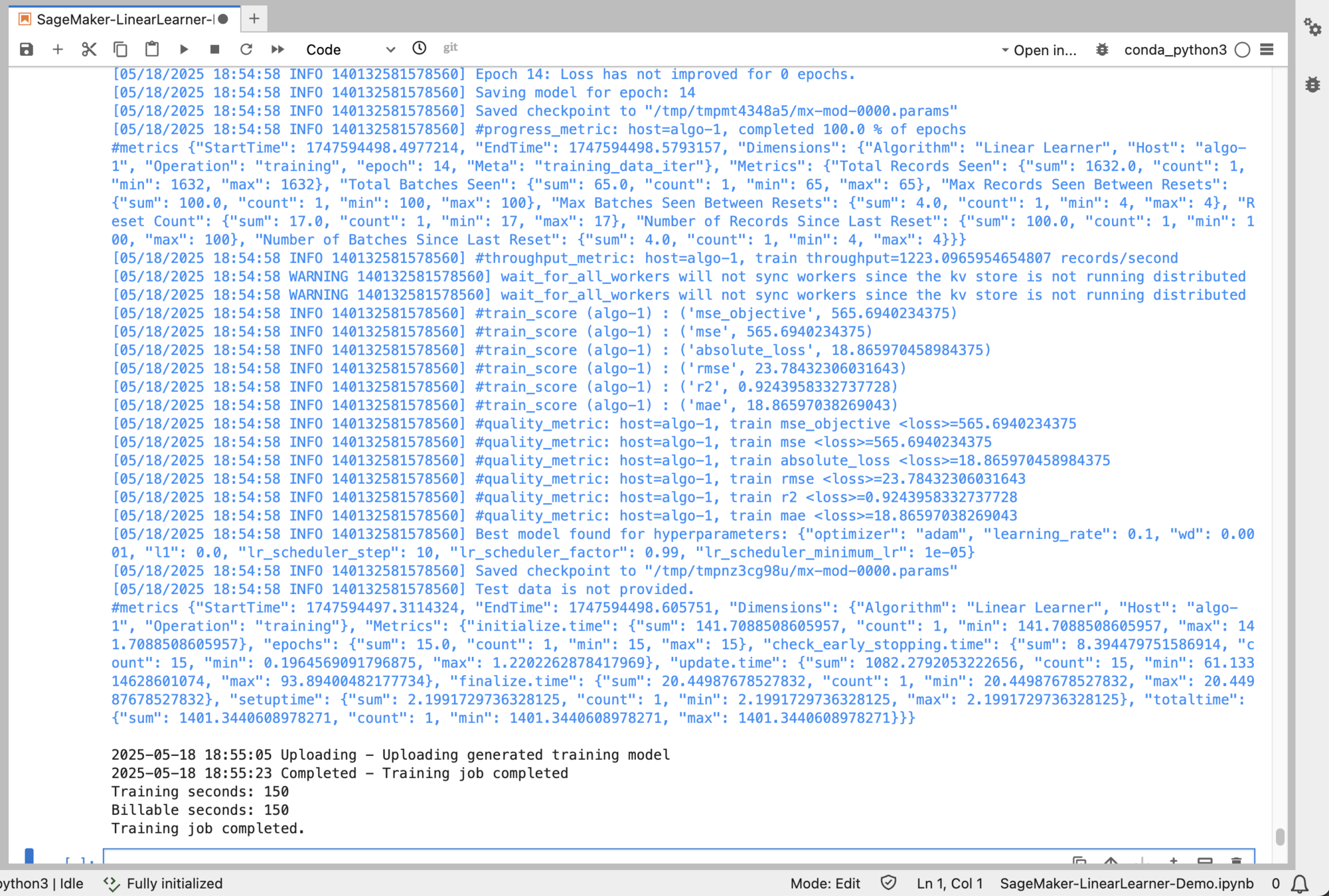

Train a Machine Learning Model

# Get the Docker image URI for SageMaker's Linear Learner algorithm

container = sagemaker.image_uris.retrieve(

framework='linear-learner',

region=region,

version='1' # Use 'latest' or a specific version

)

print(f"Linear Learner container URI: {container}")

# Configure the Linear Learner estimator

linear_estimator = sagemaker.estimator.Estimator(

image_uri=container,

role=role,

instance_count=1,

instance_type='ml.m5.large', # Choose a suitable instance type for training

output_path=s3_output_location,

sagemaker_session=sagemaker_session,

# Hyperparameters for Linear Learner

hyperparameters={

'feature_dim': 1, # Number of features (just 'Size' in our case)

'predictor_type': 'regressor', # For regression problems

'mini_batch_size': 32

}

)

# Define the input data channels

# The content_type 'text/csv;label_size=1' indicates CSV format where the first column is the target label.

train_input = sagemaker.inputs.TrainingInput(

s3_data=train_data_s3_path,

distribution='FullyReplicated',

content_type='text/csv;label_size=1', # label_size=1 means the first column is the target

s3_data_type='S3Prefix'

)

data_channels = {'train': train_input}

# Start the training job

print("\nStarting model training...")

linear_estimator.fit(inputs=data_channels, logs='All') # Set logs to 'All' to see training progress

print("Training job completed.")

Output

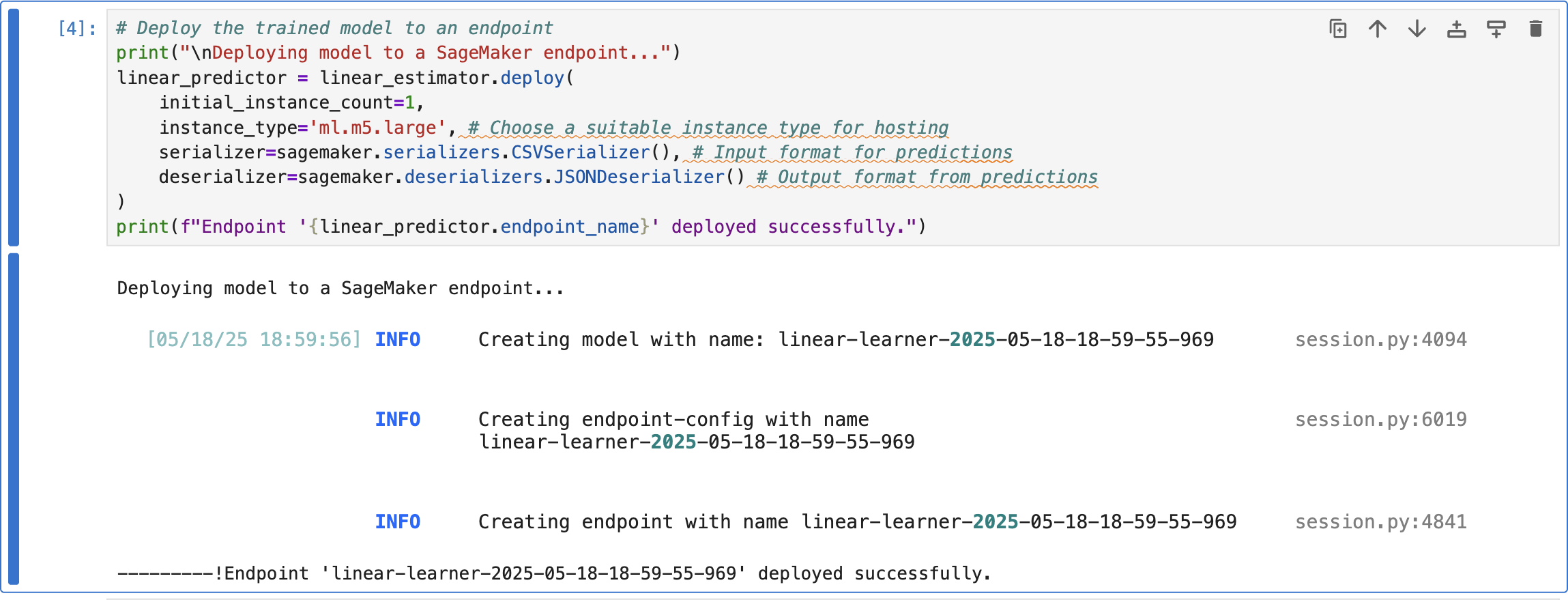

# Deploy the trained model to an endpoint

print("\nDeploying model to a SageMaker endpoint...")

linear_predictor = linear_estimator.deploy(

initial_instance_count=1,

instance_type='ml.m5.large', # Choose a suitable instance type for hosting

serializer=sagemaker.serializers.CSVSerializer(), # Input format for predictions

deserializer=sagemaker.deserializers.JSONDeserializer() # Output format from predictions

)

print(f"Endpoint '{linear_predictor.endpoint_name}' deployed successfully.")Deploy the Trained Model to a SageMaker Endpoint

Output

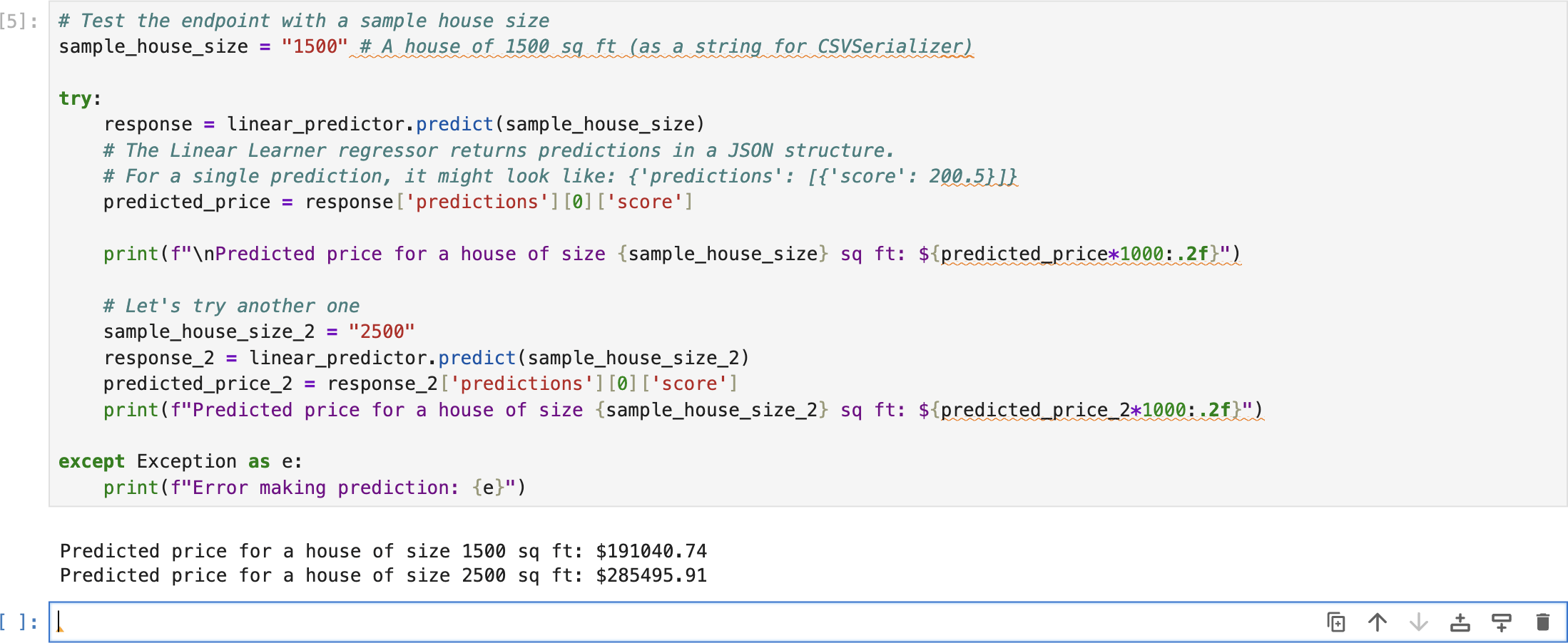

# Test the endpoint with a sample house size

sample_house_size = "1500"

# A house of 1500 sq ft (as a string for CSVSerializer)

try:

response = linear_predictor.predict(sample_house_size)

# The Linear Learner regressor returns predictions in a JSON

# structure. For a single prediction, it might look like:

# {'predictions': [{'score': 200.5}]}

predicted_price = response['predictions'][0]['score']

print(f"\nPredicted price for a house of size "

f"{sample_house_size} sq ft: ${predicted_price*1000:.2f}")

# Let's try another one

sample_house_size_2 = "2500"

response_2 = linear_predictor.predict(sample_house_size_2)

predicted_price_2 = response_2['predictions'][0]['score']

print(f"Predicted price for a house of size "

f"{sample_house_size_2} sq ft: ${predicted_price_2*1000:.2f}")

except Exception as e:

print(f"Error making prediction: {e}")Test the Setup

Output

Clean Up

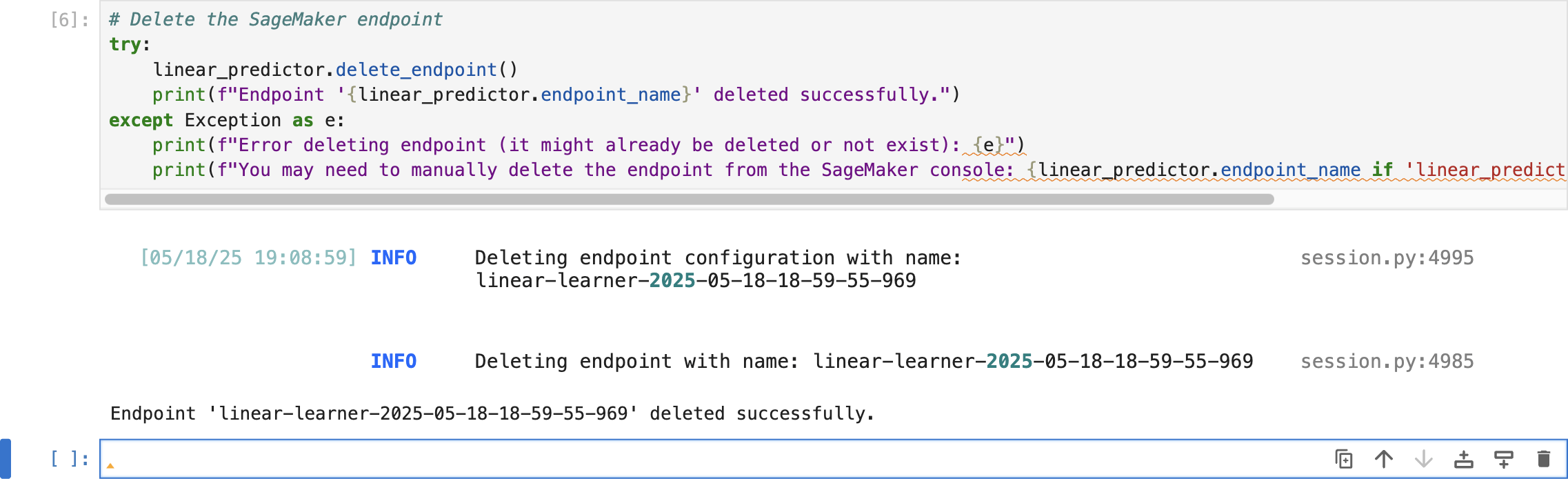

# Delete the SageMaker endpoint

try:

linear_predictor.delete_endpoint()

print(f"Endpoint '{linear_predictor.endpoint_name}'"

f" deleted successfully.")

except Exception as e:

print(f"Error deleting endpoint (it might already be "

f"deleted or not exist): {e}")

endpoint_name_to_log = (

linear_predictor.endpoint_name

if 'linear_predictor' in locals()

else 'Unknown'

)

print(f"You may need to manually delete the endpoint "

f"from the SageMaker console: {endpoint_name_to_log}")Delete the SageMaker Endpoint

Output

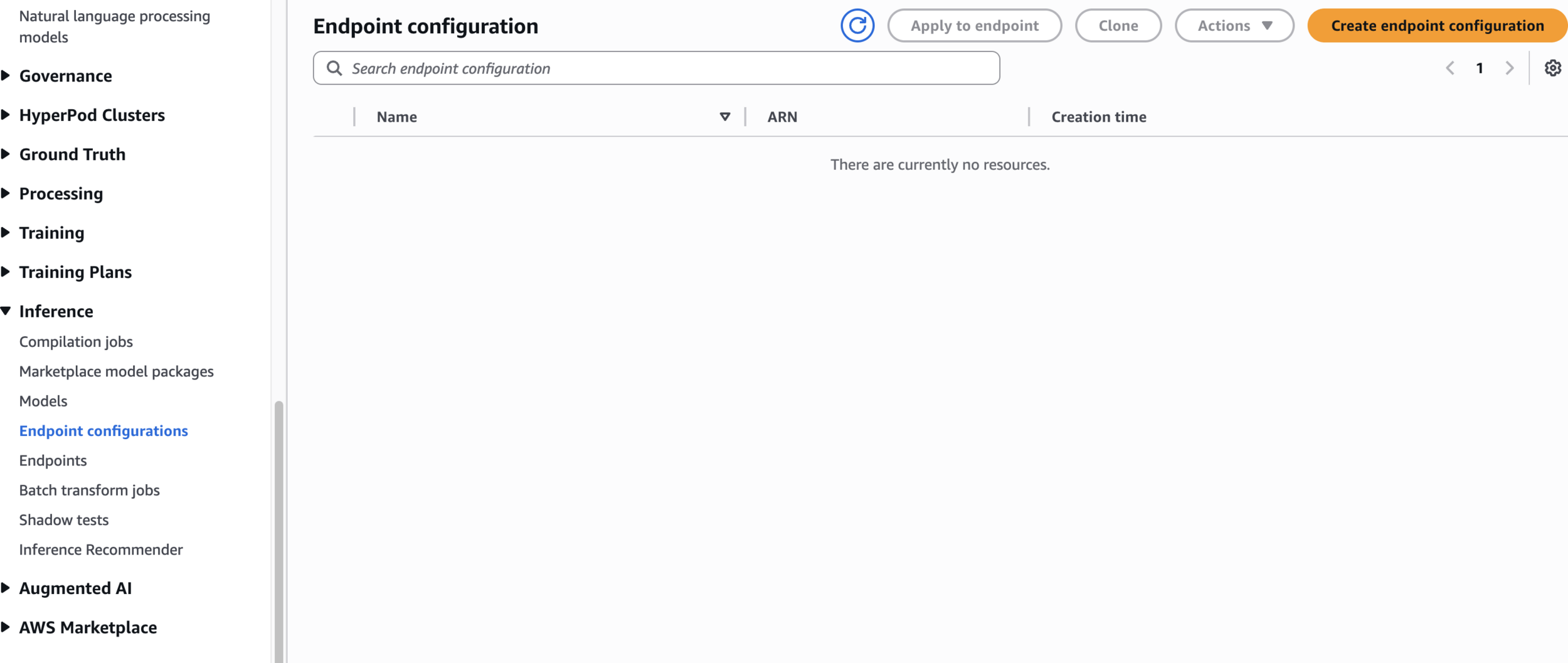

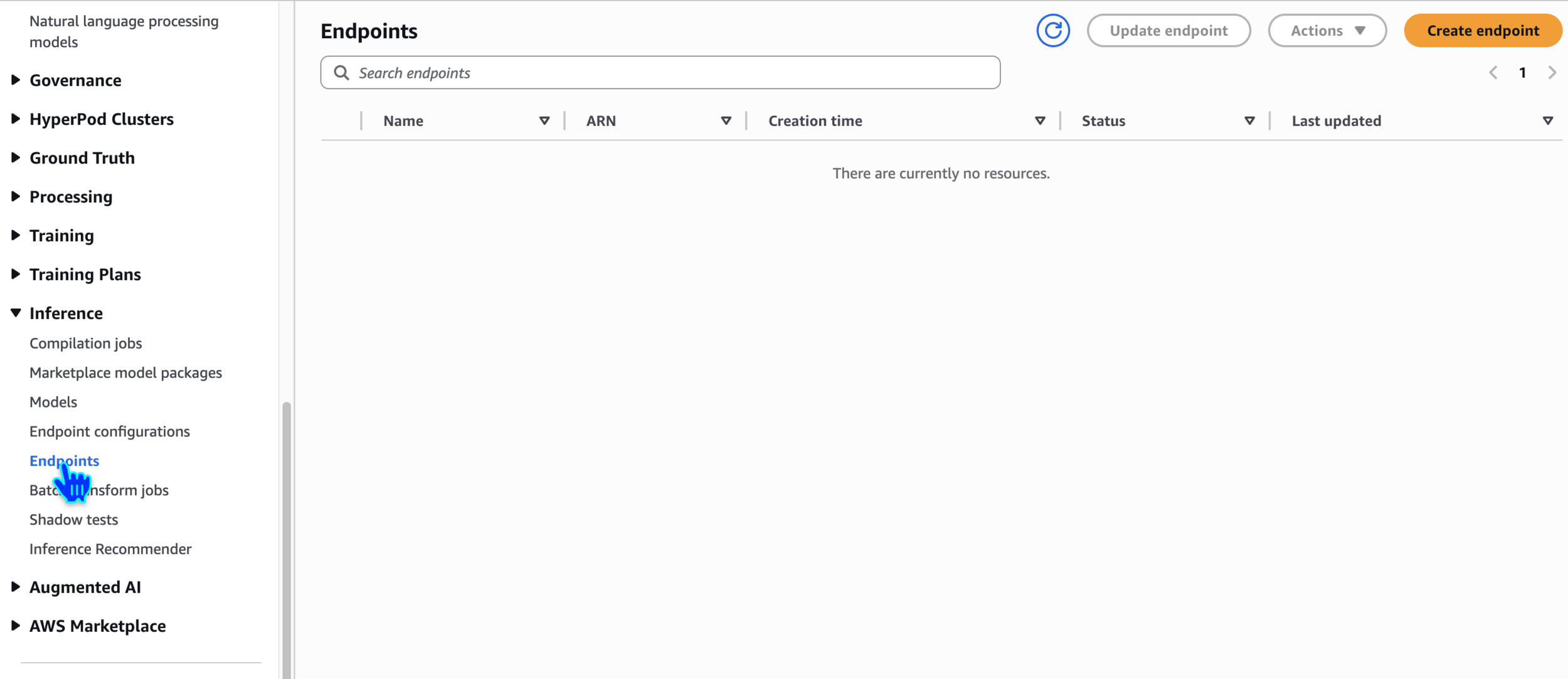

Verify

Verify

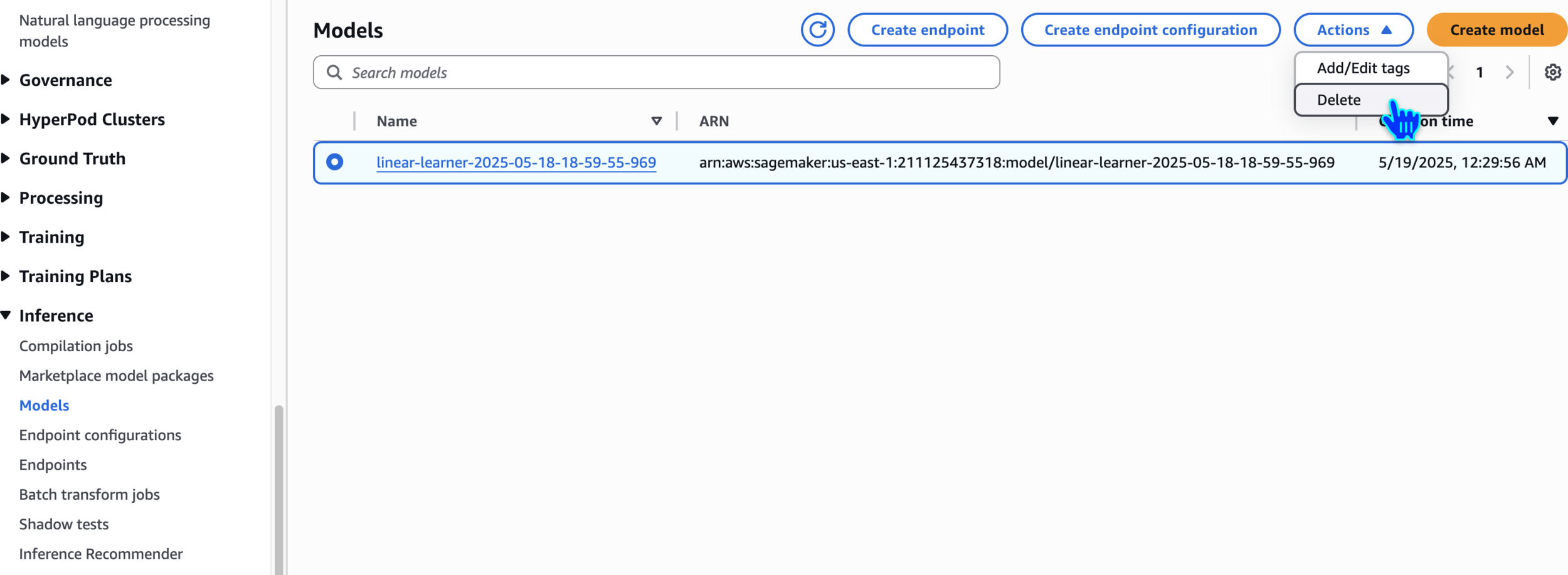

Delete the SageMaker Model

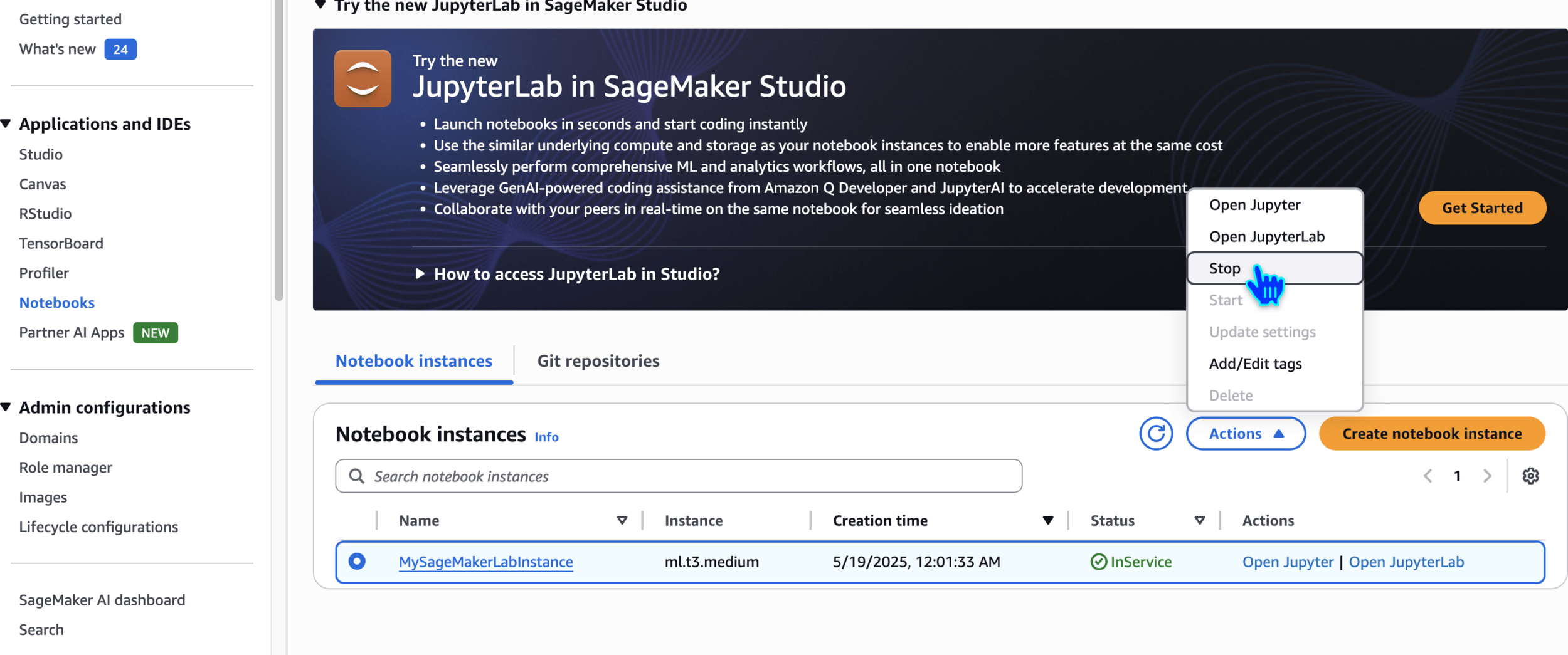

Stop the SageMaker Notebook Instance

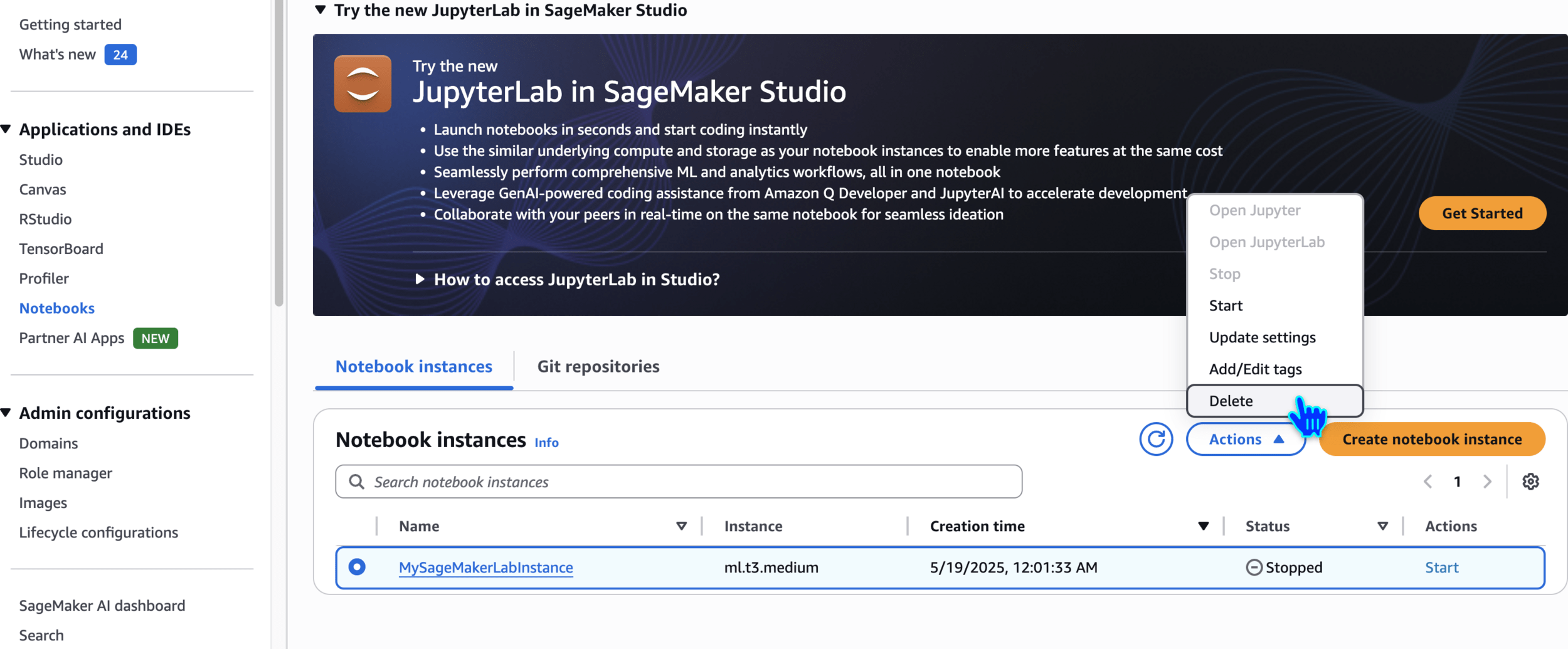

Delete the SageMaker Notebook Instance

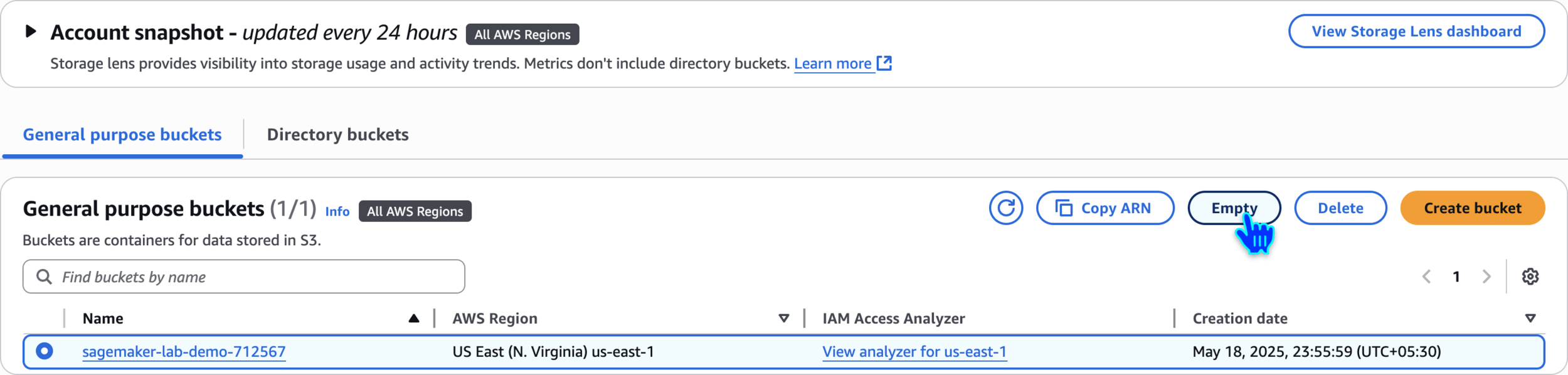

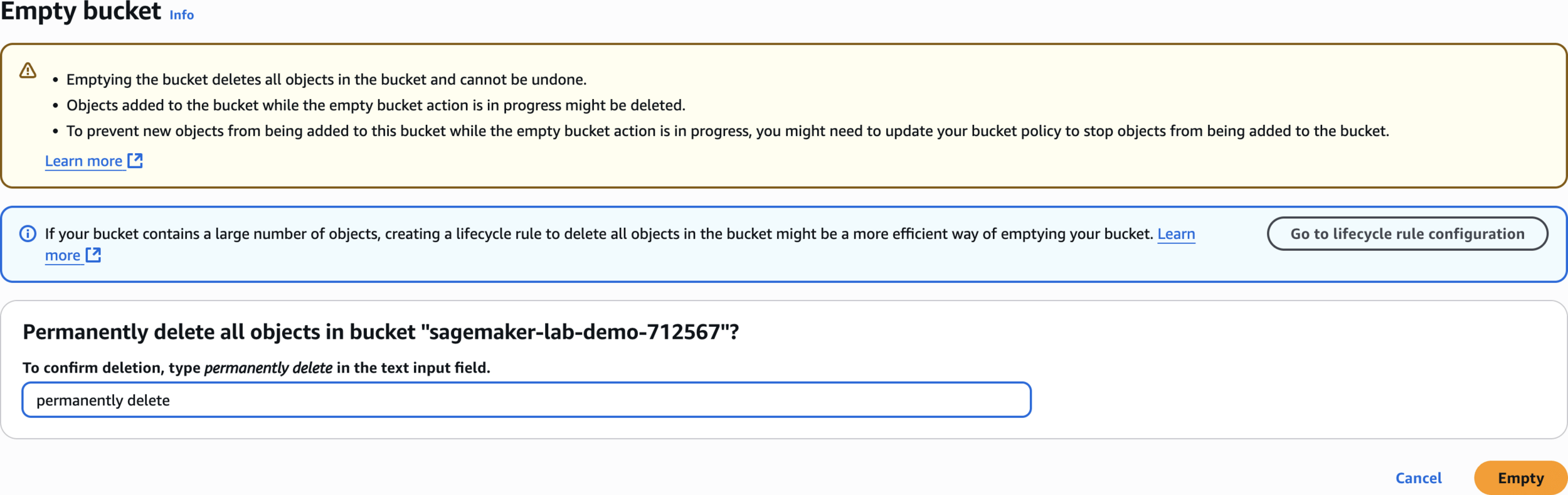

Empty the S3 bucket

permanently deletepermanently delete

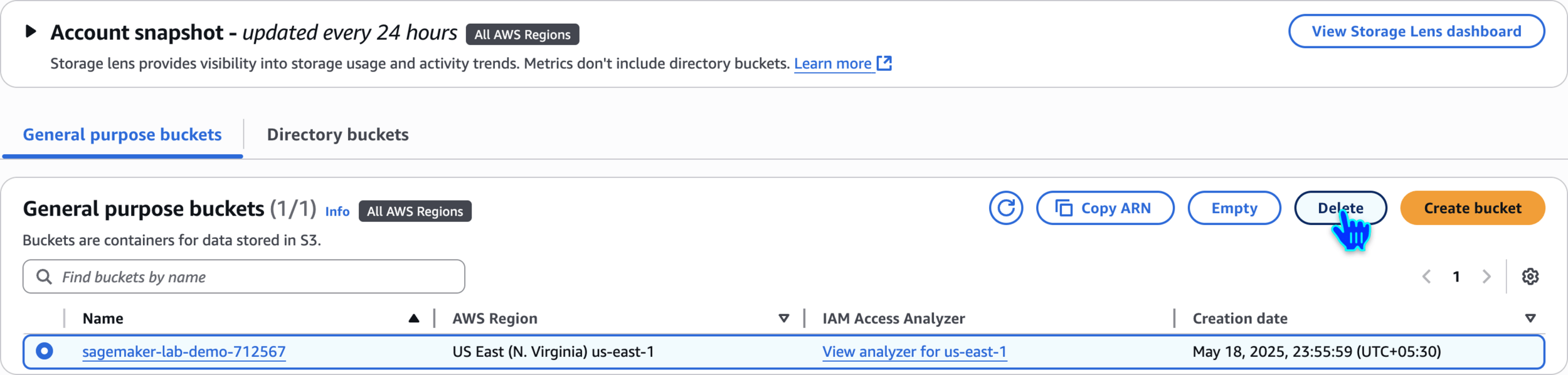

Delete bucket

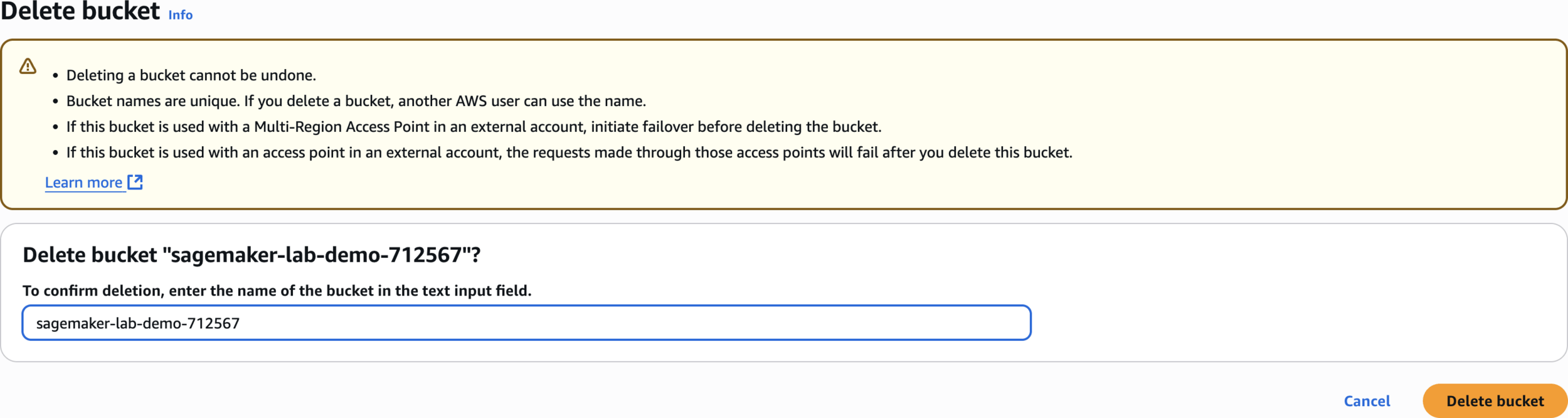

sagemaker-lab-demo-712567Delete bucket

Delete bucket

🙏

Thanks

for

Watching

Amazon SageMaker - Hands-On Demo

By Deepak Dubey

Amazon SageMaker - Hands-On Demo

Amazon SageMaker - Hands-On Demo

- 390