Thinking Ethically

HMIA 2025

Outline

HMIA 2025

Outline

HMIA 2025

The Hook

5

HMIA 2025

Is it not just one giant classification algorithm?

What is ethics/ethical/moral?

The Readings

5

HMIA 2025

STOP+THINK

Name and briefly explain the four major branches of ethics.

Ethics often involves resolving conflicts between equally valid principles. What's an example of that?

The Wikipedia article mentions consequentialism and deontology. Explain how they differ.

STOP+THINK

Professional ethics often grant experts both special authority and special obligations. Explain with an example.

Professions often define themselves through codes of ethics. What might the public be skeptical of that approach?

STOP+THINK

The article lists key concerns in AI ethics, such as bias, transparency, and accountability. Say briefly what each of these is.

Some AI ethicists argue for human-in-the-loop oversight, while others emphasize formal constraints or values built into systems. What do these terms mean? Where do you stand?

What did we read?

HMIA 2025

PRE-CLASS

Ethics

Normative Ethics

Descriptive Ethics

Meta-Ethics

Applied Ethics

Consequentialism

Deontology

Virtue Ethics

Categorical Imperative

Universalism

Relativism

Utilitarianism

Professional

Ethics

HMIA 2025

PRE-CLASS

HMIA 2025

PRE-CLASS

How one ought to act...

Descriptive/Empirical vs. Normative

Is vs Ought

Look back at our codes of conduct for wizards: about how wizards SHOULD act or SHOULD be.

STOP+THINK (handout)

5

HMIA 2025

PRE-CLASS

HMIA 2025

PRE-CLASS

HMIA 2025

PRE-CLASS

HMIA 2025

PRE-CLASS

HMIA 2025

PRE-CLASS

HMIA 2025

PRE-CLASS

10

HMIA 2025

STOP+THINK

How do the three major branches of ethics—normative, meta-ethics, and applied ethics—map onto the problem of aligning AI systems with human values?

Ethics often involves resolving conflicts between equally valid principles (e.g., freedom vs. safety). How might such ethical dilemmas show up in the design of professional codes or AI systems?

The Wikipedia article mentions moral relativism and moral universalism. Why might this distinction matter for aligning diverse human communities—or machines trained on their data?

STOP+THINK

Professional ethics often grant experts both special authority and special obligations. Why might this dual structure be important for aligning expert intelligence with the public good?

Professions often define themselves through codes of ethics. What alignment problems might arise if these codes are vague, unenforced, or in conflict with institutional incentives?"

STOP+THINK

The article lists key concerns in AI ethics, such as bias, transparency, and accountability. Which of these concern alignment failures, and which concern the limits of our ability to assess alignment?

Some AI ethicists argue for human-in-the-loop oversight, while others emphasize formal constraints or values built into systems. What are the trade-offs between these approaches as alignment strategies?

PRE-CLASS

HMIA 2025

CLASS

Two Thespians?

5

HMIA 2025

CLASS

You work in a nonprofit organization that advocates for environmental issues. A mid-level manager, Elena, has been promoting and giving high-visibility assignments to her personal friends within the team. The promotions don’t appear to be based on merit or performance. While this isn’t illegal, it’s creating resentment, weakening trust, and making other qualified staff feel sidelined.

5

What’s going on is that Elena seems to be favoring her friends for promotions and key projects, regardless of performance. The ethical issue is fairness — people who work hard and contribute meaningfully aren’t being recognized. It’s not illegal, but it undermines integrity, respect, and trust within the team. In the bigger context, we’re a nonprofit that asks for fairness and justice in society; if we don’t uphold those values internally, we risk damaging morale, losing talent, and even weakening our credibility with donors and the communities we serve.

Context

I got your email. But help me see what the problem is. What's going on here that has you worried?

So the stakes are bigger than just Elena’s team dynamic. We’re talking about fairness to individual employees, but also the reputation and effectiveness of the entire nonprofit, and the trust of donors and communities.

Exactly. That’s why I think it’s important to surface this carefully. It’s not just a personal conflict — it’s a systemic issue with ripple effects.

Our donors and partners. They expect us to run with integrity and professionalism. If they notice high turnover or underperforming teams, they’ll lose confidence. And ultimately, the communities we serve — who rely on us to advocate effectively — suffer if our strongest people aren’t given a fair chance to lead.

Well, first the organization itself. If talented people leave because they don’t see fair advancement, our ability to deliver on environmental campaigns is weakened. That’s a direct risk to our mission.

I’m worried that this favoritism isn’t just about morale — it’s about how it affects all the people tied to our work. Even staff who aren’t in Elena’s circle are starting to disengage.

Right. So internally, the staff are obvious stakeholders. But who else do you think is affected?

True. And external stakeholders?

Stakeholders

I see a few paths forward. One option is to do nothing and hope things even out, but if we ignore it, I think morale will continue to erode and we risk losing strong performers. Another option is to raise the issue directly with Elena — that might prompt her to reconsider, but it could also damage my relationship with her and make me look like I’m challenging authority. A third path would be to bring the concern to leadership or HR, framing it around fairness and organizational effectiveness. That might push for a more transparent promotion process, but it could also create defensiveness or mistrust if people feel blindsided.

Actions and Consequences

Good breakdown. Let’s think through stakeholders. Doing nothing probably benefits Elena and her favored team members in the short term, but it hurts everyone else — especially the overlooked staff. Raising it with Elena directly could give her a chance to adjust without escalation, but if she reacts badly, you could be seen as undermining her. Going to HR or leadership has the potential to protect fairness organization-wide and restore trust, but it risks escalating the conflict if it’s not handled delicately. The organization’s long-term reputation and talent retention are really at stake here.

What Was That?

5

What topics or issues did the two characters talk about?

agents/actors choices

context

stakeholders

ethical principles

courses of action predictions consequences

concrete course of action

5

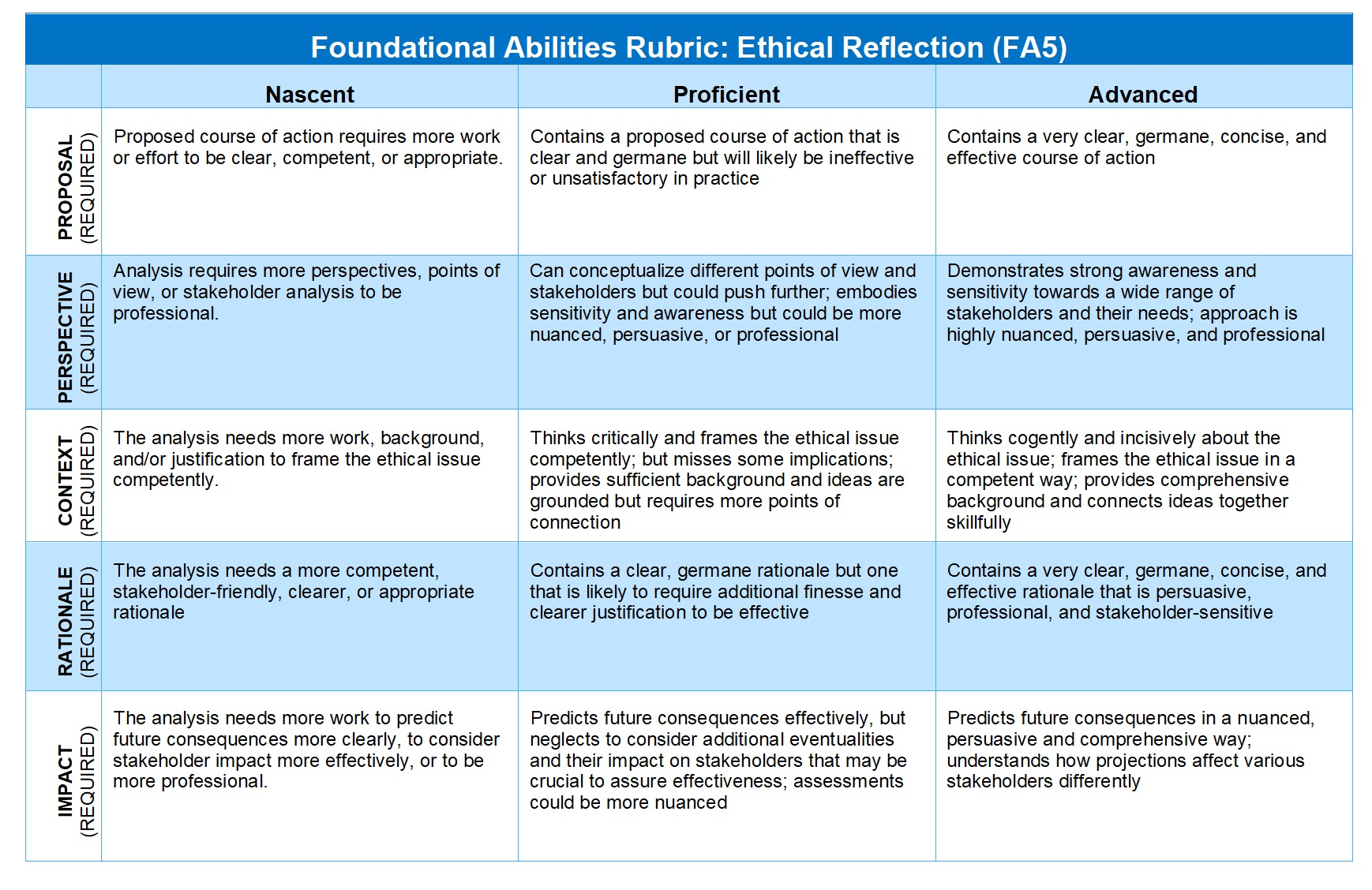

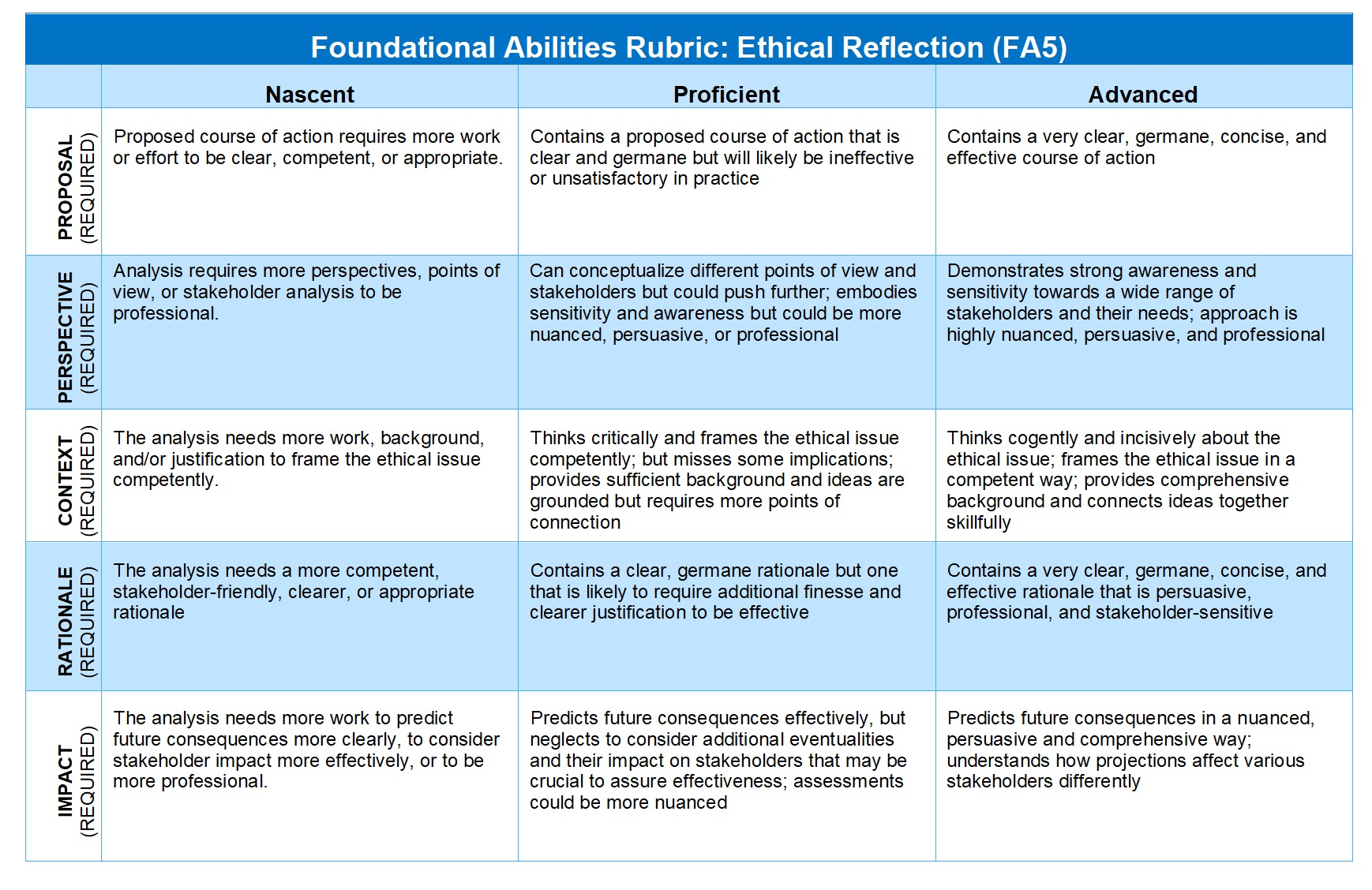

Our requirement is that you write a script for a conversation between you and a work superior or mentor about an ethically challenging situation that arises in your workplace.

The framework of the scenario will be supplied and you will be responsible for filling out the details. The conversation does not have to follow a particular sequence but it is expected to cover some specific issues and reach a conclusion in the form of a short memo from you about the action you recommend.

In the conversation we should hear you thinking clearly about the ethical issue in the situation. Tell us what is going on, what ethical issues are raised, how they fit into the context (what else is going on? what's the bigger picture here? what does one need to be aware of to think clearly about this?).

Who are the agents/actors in this situation? What behavioral choices do they face?

You should communicate an awareness of the full range of stakeholders this situation implicates and what their needs and interests are.

You should describe possible courses of action and make informed predictions about the consequences for different stakeholders.

You should lay out the ethical principles that could guide our recommendations.

Finally, you should write a short memo for your supervisor that recommends a concrete course of action and offers a concise, persuasive, professional and stakeholder sensitive rationale for the recommendation.

HMIA 2025

PRE-CLASS

Tell us what is going on, what ethical issues are raised, how they fit into the context (what else is going on? what's the bigger picture here? what does one need to be aware of to think clearly about this?).

Who are the agents/actors in this situation? What behavioral choices do they face?

You should communicate an awareness of the full range of stakeholders this situation implicates and what their needs and interests are.

You should describe possible courses of action and make informed predictions about the consequences for different stakeholders.

You should lay out the ethical principles that could guide our recommendations.

Recommends a concrete course of action and offers a concise, persuasive, professional and stakeholder sensitive rationale for the recommendation.

CONTEXT

AGENTS + CHOICES

STAKEHOLDERS

ETHICAL PRINCIPLES

ACTIONS + CONSEQUENCES

RECOMMENDATIONS

You may ask yourself

What is the context? What's going on here?

Who are the agents/actors in this situation? What behavioral choices do they face?

Who are all the stakeholders? What are their needs and interests?

What are our possible courses of action? What consequences do we predict for different stakeholders?

What ethical principles could guide our recommendations.

What do we put in the memo for our supervisor about recommending a concrete course of action and offers a concise, persuasive, professional and stakeholder sensitive rationale for the recommendation.

TWO MORE VOLUNTEERS

5

HMIA 2025

CLASS

So, you wanted to talk about something?

As you know, our team is preparing to launch a new productivity app. During testing, I've discovered that the app collects users' clipboard data—even when the app is running in the background—and uploads it to the company server.

This data could include sensitive information, such as passwords or personal messages.

HMIA 2025

CLASS

The feature was added to enable a cross-device sync function, but users were not explicitly informed.

Why does this happen?

HMIA 2025

CLASS

What's your take on this?

We’re in a competitive market where privacy concerns are increasingly important. Our company has previously marketed itself as a “privacy-conscious” alternative to larger tech firms. Failing to meet that standard now could feel like a betrayal to users. The engineering team may not have been aware of the ethical implications when they implemented this feature. It solved a problem we had at the time.

HMIA 2025

CLASS

So, let's think through this. Who are the stakeholders here?

Well, users, for one. But then there's the product team - they're under pressure. And legal and leadership have a reason to be concerned too.

HMIA 2025

CLASS

Users expect transparency and control over their personal data.

The product team might go bananas if we about delays and diminished features.

Legal/compliance staff may worry about GDPR or other privacy violations.

The company leadership likely wants to maintain a good reputation and avoid liability.

Walk me through what they care about, as you see it.

HMIA 2025

CLASS

That sounds right. What are our options here?

We could (1) disable the clipboard sync until we’ve added clear consent and disclosure, (2) redesign the feature so it only works with explicit user activation, or (3) keep it as is, but that risks violating privacy expectations and regulations.

HMIA 2025

CLASS

So, how are you thinking about this? I mean, what are the ethics, not the practical implications.

Well, the principles at stake include respect for user autonomy (through informed consent), privacy, and professional responsibility. The feature, as currently implemented, violates user trust and potentially breaches data protection laws or standards.

HMIA 2025

CLASS

In the short term, disabling the feature may delay the app launch or reduce a popular function. However, continuing with the current design risks user backlash, reputational damage, and legal exposure. Long-term trust with users is more valuable than the short-term benefit of seamless sync.

OK, now the pragmatics. What's the impact and consequences of our options here?

HMIA 2025

CLASS

And you recommend what?

I recommend disabling the clipboard data collection feature until we implement proper user consent and disclosure.

YOUR TURN

CONTEXT

AGENTS + CHOICES

STAKEHOLDERS

ETHICAL PRINCIPLES

ACTIONS + CONSEQUENCES

RECOMMENDATIONS

15

HMIA 2025

CLASS

REVIEW

15

HMIA 2025

CLASS

HMIA 2025

PRE-CLASS

HMIA 2025

PRE-CLASS

Context: what's going on here? what makes this an ethics case?

HMIA 2025

Resources

Downes, S. 2017. "An Ethics Primer" (blog post)

Wikipedia editors. "Ethics"

Wikipedia editors. "Professional Ethics"

Wikipedia editors. "Ethics of artificial intelligence"