Transparency and Record Keeping

HMIA 2025

Describe a time when things in your life (or recollection) went badly because there were no written records or when you were saved (or a story you can remember about someone else) by the fact that there was a written record.

Utah and California have passed laws requiring entities to disclose when they use AI. More states are considering similar legislation. Proponents say labels make it easier for people who don't like AI to opt out of using it.

"They just want to be able to know," says Utah Department of Commerce executive director Margaret Woolley Busse, who is implementing new state laws requiring state-regulated businesses to disclose when they use AI with their customers.

"If that person wants to know if it's human or not, they can ask. And the chatbot has to say."

California passed a similar law regarding chatbots back in 2019. This year it expanded disclosure rules, requiring police departments to specify when they use AI products to help write incident reports.

Transparency and Record Keeping

One of the central obstacles to alignment among intelligent agents—human or artificial—is the inscrutability of inner states and the opacity of the world: we cannot know what others are thinking, nor can we see everything that happens. Because intentions, reasoning, and perceptions are hidden—and even our own memories are fallible—alignment depends on creating external traces of cognition and action that others can inspect, verify, and learn from.

Transparency and recordkeeping counter the misalignment potential of secrecy, obscurity, and opacity. They make behavior visible, accountable, and interpretable across time and context. Records, disclosures, reporting requirements, and audits allow agents to see what is happening and what has happened; checklists proactively ensure that what will happen remains visible and verifiable. Together, these mechanisms transform private judgment into shared evidence, supporting trust, coordination, and corrigibility within complex systems.

.

Records

A xxx is a mechanism that

LOREM

Human Interaction

lorem ipsum

Organizational

Lorem ipsum

Legal/Political

lorem ipsum

Professional/Expert

Lorem ipsum

Machines

Lorem Ipsum

Disclosures

A xxx is a mechanism that

LOREM

Human Interaction

lorem ipsum

Organizational

Lorem ipsum

Legal/Political

lorem ipsum

Professional/Expert

Lorem ipsum

Machines

Lorem Ipsum

Disclosures

A xxx is a mechanism that

LOREM

Human Interaction

lorem ipsum

Organizational

Lorem ipsum

Legal/Political

lorem ipsum

Professional/Expert

Lorem ipsum

Machines

Lorem Ipsum

Reporting requirements

A xxx is a mechanism that

LOREM

Human Interaction

lorem ipsum

Organizational

Lorem ipsum

Legal/Political

lorem ipsum

Professional/Expert

Lorem ipsum

Machines

Lorem Ipsum

Audits

A xxx is a mechanism that

LOREM

Human Interaction

lorem ipsum

Organizational

Lorem ipsum

Legal/Political

lorem ipsum

Professional/Expert

Lorem ipsum

Machines

Lorem Ipsum

Checklists

A xxx is a mechanism that

LOREM

Human Interaction

lorem ipsum

Organizational

Lorem ipsum

Legal/Political

lorem ipsum

Professional/Expert

Lorem ipsum

Machines

Lorem Ipsum

THE READINGS

The piece explains how modern intensive care has pushed the boundaries of human survival—keeping patients alive through ventilators, dialysis, ECMO, feeding lines, and more—while creating a new problem: extreme complexity that routinely overwhelms even elite clinicians. Two vivid cases frame the point. A three-year-old Austrian girl is pulled pulseless from an icy pond after roughly two hours without a heartbeat; a tightly choreographed sequence—bypass, ECMO, sterile lines, pressure control, ventilation, neuro-monitoring—brings her all the way back. By contrast, a 48-year-old man with liver injury survives multi-organ failure but nearly dies from infections introduced via his life-sustaining lines—an emblem of how easily complex care harms as it heals.

Scale and fragility define the ICU. On any given day in the U.S., ~90,000 people are in intensive care; across a lifetime, most of us will see it from the inside. The average ICU stay is four days and survival is ~86%, but each patient requires ~178 distinct actions per day and teams still make about a 1% error rate—roughly two errors daily per patient. The risks compound over time: after 10 days, ~4% of central lines become infected (80,000 cases/year, with 5–28% mortality), ~4% of urinary catheters cause bladder infection, and ~6% of ventilated patients develop pneumonias with 40–55% mortality. ICU medicine works only when the odds of harm are kept consistently below the odds of benefit—no small feat amid alarms, crises next door, and fatigue.

Medicine’s reflexive answer to complexity has been ever deeper specialization—“intensivists” for the ICU, fellowships on fellowships. But the article argues that expertise alone cannot tame this level of complexity. Drawing an analogy from aviation’s “B-17 moment,” it recounts how Boeing’s revolutionary bomber first crashed due to “pilot error” born of complexity. The fix was not more heroics but a humble tool: the pilot’s checklist. With stepwise checks for takeoff, flight, landing, and taxiing, crews subsequently flew 1.8 million miles accident-free; the B-17 became a World War II workhorse.

Peter Pronovost’s work at Johns Hopkins brings that aviation lesson to the ICU. He started with one narrow, deadly problem: central-line infections. His five-step insertion checklist—wash hands; chlorhexidine skin prep; full sterile drape; sterile mask/hat/gown/gloves; sterile dressing—codified well-known basics. Yet when nurses observed physicians, at least one step was skipped in over a third of insertions. Empowering nurses to halt non-compliant procedures and to prompt daily line removal reviews drove the line-infection rate from 11% to ~0% at Hopkins, preventing dozens of infections and deaths and saving millions.

Could that work “in the wild”? In Michigan’s Keystone Initiative, hospitals paired checklists with executive support to fix supply gaps (chlorhexidine soap, full drapes) and vendor kits, tracked infection data, and sustained practice. Within three months, ICU line infections fell 66%; many units hit zero and stayed there. Over 18 months, the program saved an estimated 1,500 lives and $175 million. Parallel ventilator and pain-management checklists cut pneumonias, untreated pain, and ICU length of stay.

Why the resistance? Checklists can feel like an affront to professional audacity—the “right stuff” culture that valorizes improvisation under fire. But the article emphasizes that checklists don’t replace judgment; they reserve scarce cognitive bandwidth for the parts that truly require it, standardizing the mundane yet critical steps most vulnerable to omission. The larger indictment is that the “science of delivery”—how to ensure therapies are reliably executed—has been neglected relative to discovery and treatment science. For a few million dollars and national coordination, Pronovost estimates, line-infection checklists could be rolled out across U.S. ICUs; Spain, he notes, is moving faster.

The takeaway: in domains where lives hinge on intricate sequences, checklists are a deceptively powerful technology. They convert individual expertise into team reliability, reduce avoidable harm, and create the conditions in which skill and courage actually matter most. The question is less whether they work than whether medicine will accept the cultural shift from heroic memory to disciplined execution.

Which statement best captures the article’s central takeaway?

A. ICU outcomes improve primarily by adding more sub-specialists with longer training.

B. Checklists standardize critical but easily overlooked steps, enabling teams to manage ICU complexity more safely without replacing clinical judgment.

C. Most ICU harm comes from rare, unforeseeable equipment failures, so technology upgrades are the main solution.

D. Checklists are useful only in aviation because patients are too variable for medicine to benefit.

AI SUMMARY

A. To provide detailed technical specifications for model

replication and performance optimization.

B. To document and communicate a model’s intended uses,

performance across relevant groups, and ethical

considerations.

C. To ensure that models comply with open-source licensing

requirements.

D. To certify that a machine learning model has been

approved for deployment in sensitive domains.

According to Mitchell et al. (2019), “Model Cards for Model Reporting,” what is the primary purpose of a model card?

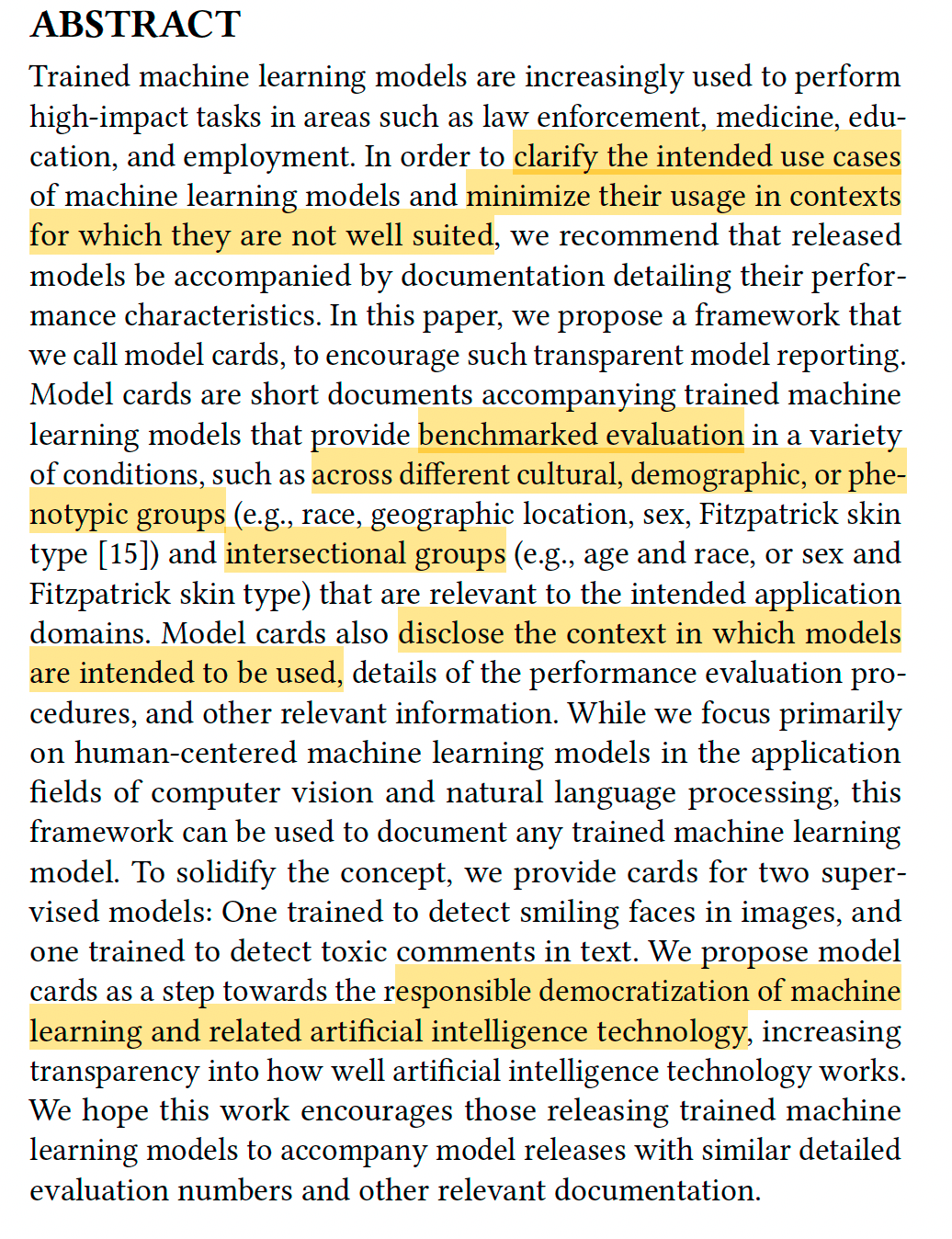

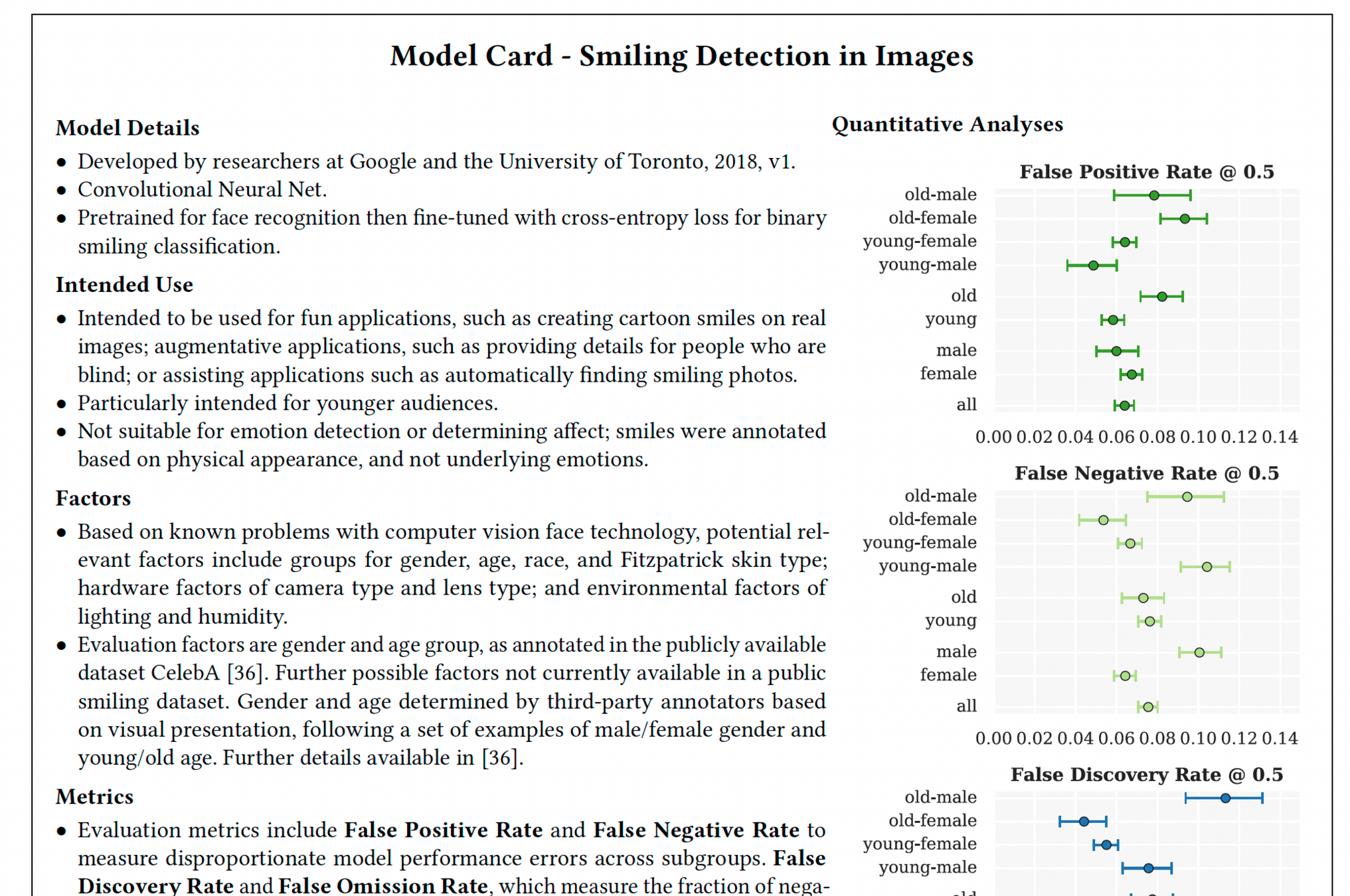

In “Model Cards for Model Reporting” (2019), Margaret Mitchell and colleagues propose a standardized framework for documenting machine learning models to improve transparency and accountability. Model cards are short documents that accompany trained models, summarizing their intended uses, limitations, and performance across different demographic, cultural, or phenotypic groups. By making these details explicit, model cards help developers, policymakers, and users understand how models work, where they might fail, and how biases or fairness issues could affect outcomes. The authors present model cards as an essential tool for promoting responsible AI development and for aligning technical practice with ethical and social values.

According to Mitchell et al. (2019), “Model Cards for Model Reporting,” what is the primary purpose of a model card?

CONCEPTS AND TERMS

Model Details. Basic information about the model.

– Person or organization developing model

– Model date

– Model version

– Model type

– Information about training algorithms, parameters,

fairness constraints or other applied approaches,

and features

– Paper or other resource for more information

– Citation details

– License

– Where to send questions or comments about the model

Intended Use. Use cases that were envisioned during development.

– Primary intended uses

– Primary intended users

– Out-of-scope use cases.

Factors.

Factors could include demographic or phenotypic

groups, environmental conditions, technical attributes, or

others listed in Section 4.3.

– Relevant factors

– Evaluation factors

Metrics. Metrics should be chosen to reflect potential realworld

impacts of the model.

– Model performance measures

– Decision thresholds

– Variation approaches

Evaluation Data. Details on the dataset(s) used for the

quantitative analyses in the card.

– Datasets

– Motivation

– Preprocessing

Training Data. May not be possible to provide in practice.

When possible, this section should mirror Evaluation Data.

If such detail is not possible, minimal allowable information

should be provided here, such as details of the distribution

over various factors in the training datasets.

Quantitative Analyses

– Unitary results

– Intersectional results

Ethical Considerations

Caveats and Recommendations

HMIA 2025

PRE-CLASS

Zazen Codes Model Cards for Model Reporting [15m]

According to the video transcript, what is the main reason model cards are considered essential in machine learning?

A. They improve model training speed and reduce

coding errors.

B. They make it easier to convert models into

production-ready APIs.

C. They promote transparency, accountability, and

ethical awareness by documenting how models

work, their intended uses, and limitations.

D. They automatically correct data bias during the

training process.

To read a model card, first understand its purpose is to provide transparency and guidance on an AI model, similar to a nutrition label. Then, review the sections, which typically cover the model's basic details (name, version), intended use and limitations, evaluation metrics and data, training data information, and ethical considerations, including potential biases and fairness evaluations.

1 Introduction

2 Safeguard Results

3 Agentic Safety

4 Alignment Assessment

5 Welfare Assessment

6 Reward Hacking

7 Responsible Scaling

What is CBRN?

Get Your Dialog Working

Walk your neighbor through it

Scan the chapter your section came from and identify 5 concepts/terms that we should know.

What is sandbagging?

What is on Anthropic's alignment fails list?

window.dialogData = [

{

"speaker": "Assistant",

"text": "<p>Section 2.6 of the <em>Claude 4 System Card</em> is titled “Bias evaluations.” If that's the section you meant, why don't you start by telling me what issues you think this section is addressing? Or if I've got the wrong one, let's get that straightened out first.</p>"

},

{

"speaker": "Human",

"text": "<p>It seems they want to measure/detect the degree to which the substance of Claude's responses are biased in certain ways. They talk about political and discriminatory bias. My first thought is whether those are the only two kinds of bias we should be worried about.</p>"

}

]systemCardTalkn_n.js.js

chapter

section

HMIA 2025

PRE-CLASS

Get a Receipt

HMIA 2025

CLASS

HMIA 2025

Resources

"The Checklist" by Atul Gawande The New Yorker December 2, 2007

Book The Checklist Manifesto on Internet Archive

Chapter 2 from The Checklist Manifesto "The Checklist" pp. 32-47.

Chapter 4 from The Checklist Manifesto “The Idea” pp 72-85

Review pages from Perrow on Weber's bureaucracy and Weber himself on recordkeeping(in FILES).

The Shuttle Inquiry (NYT pdf in FILES)

Sections on auditing in Data and Society Algorithmic Accountability 2018

Metaxa et al. 2021 "Auditing Algorithms", Foundations and Trends® in Human-Computer Interaction: Vol. 14, No. 4, pp 272–344. DOI: 10.1561/1100000083. Sections 1.1 What is an audit? 1.2 Differentiating Algorithm Audits from Other Testing 3.1 What is an Algorithm Audit? 3.2 Algorithm Auditing Domains

Ryan Notification Norms