Shared Meaning

HMIA 2025

Hard to tune principles enough to fit all cases

Human values vary too much over space(groups) and time

There's always tradeoffs and different agents might prefer different tradeoffs.

Values are not orthogonal

Need enforcement.

Surveillance, oversight

Yes guards, but who guards and who guards the guards?

Principles need to be interpreted, fit to concrete cases - human job?

Need an agency to track rules and apportion punishment.

Some say "need a human element." Some say "humans imperfect, need machine."

Need coordinating institution

To rigid or too literal might yield brittle system.

Talk is necessary, but not sufficient.

How does "talk" get internalized?

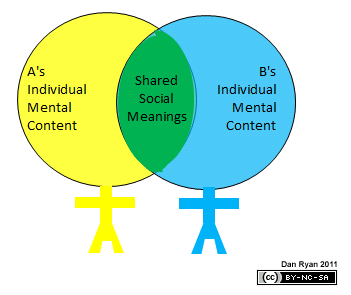

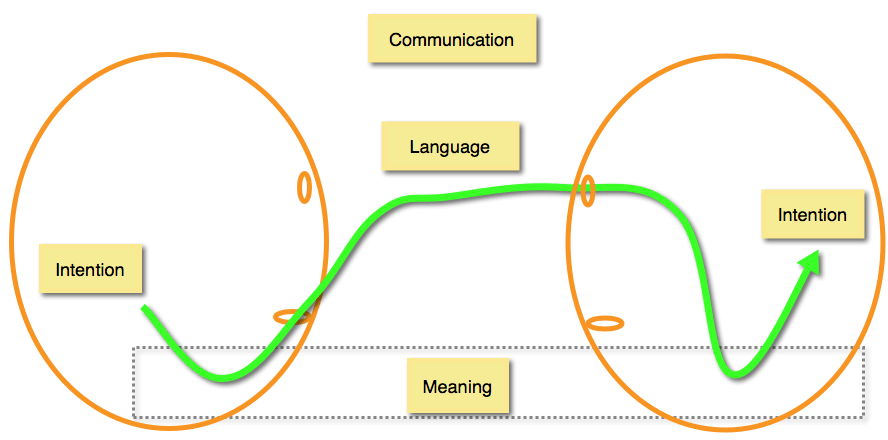

Agent/Self as dual natured

World model based on experience

+

World model derived from society

Two Answers Today

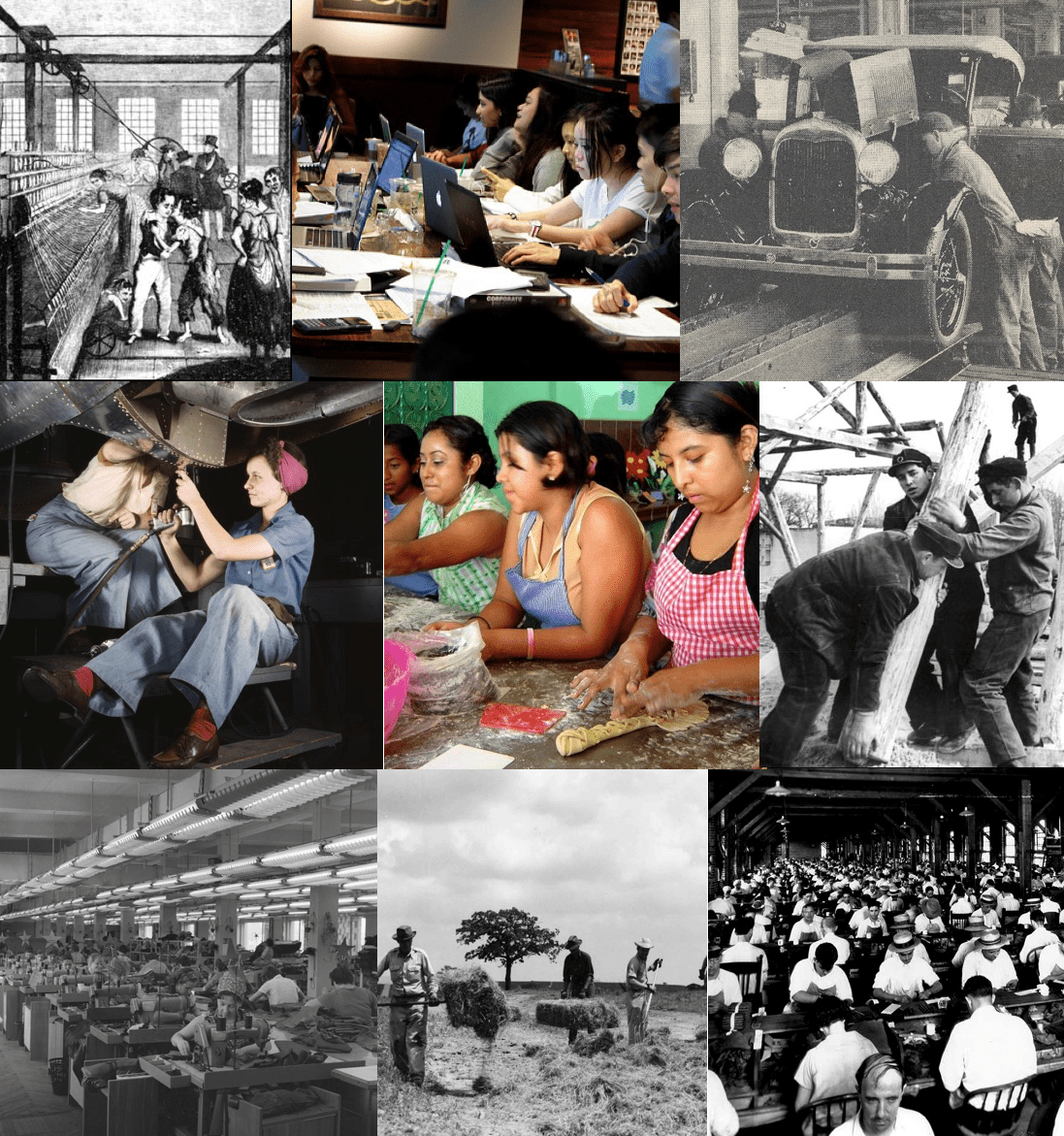

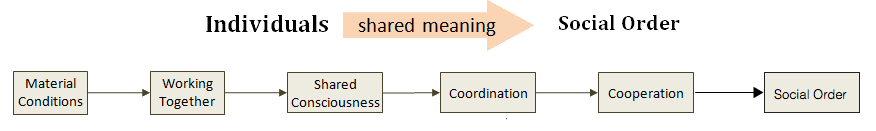

Karl Marx 1820s-1870s: by working together

+

George Herbert Mead 1860s-1930s: by playing together

HMIA 2025

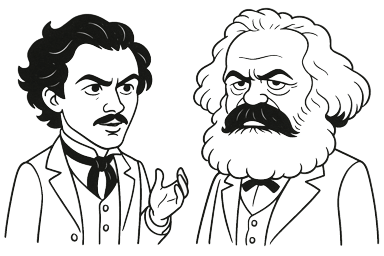

Karl Marx - "The Production of Consciousness" 1845

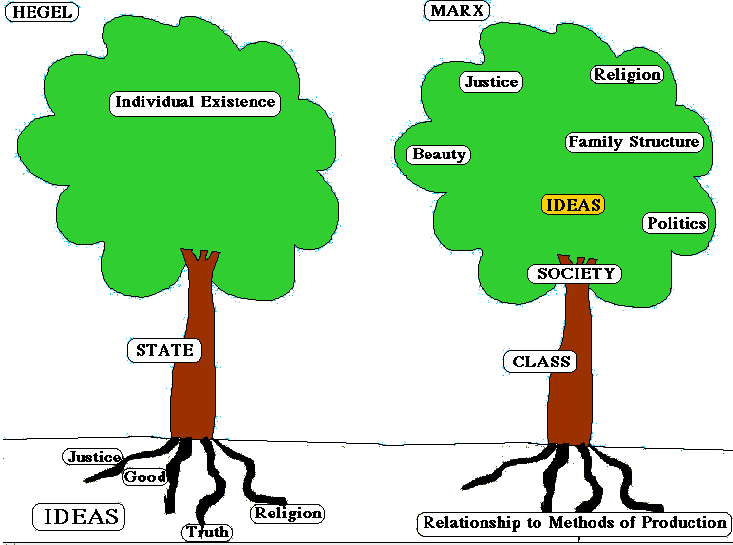

Marx is a MATERIALIST. For him, real human activity gives rise to social relations from which ideas emerge.

This is the younger, more humanist and philosophical (as opposed to political) Marx. He focused on the human

What distinguishes humans is that they PRODUCE their means of life.

Productive Activity

BOTH what + how

Form of Life

Social Structure

Ideas

"It is not consciousness that determines life,

but life that

determines consciousness."

effects of capitalism, especially "alienation": the estrangement of workers from what they make, from the process of labor, from other people, and from their own human potential. Marx was deeply engaged with German philosophy (especially Hegel), French socialism, and English political economy. The early works emphasize the conditions for human flourishing under and beyond capitalism.

For IDEALIST like Hegel the ideas of the day (Zeitgeist) generate institutions and relations in society.

If how people think arises partly from

how they work, then

at least a part of

their consciousness

will be shared.

This yields solidarity around their productive

activity.

HMIA 2025

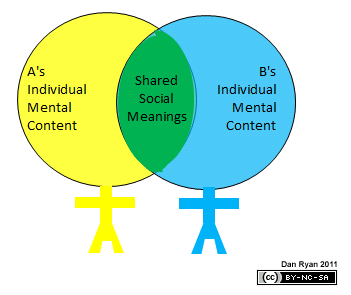

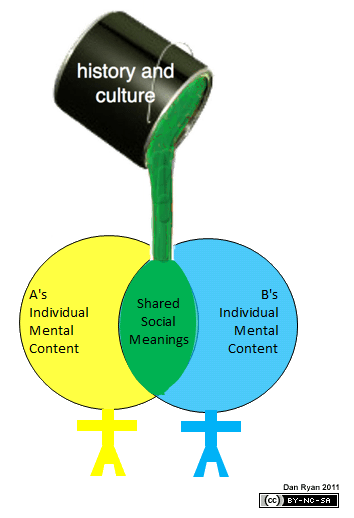

Humans are part individual, part social. A part of our mental content is shared with others in our society or

group.

But how does the social part get into their heads?

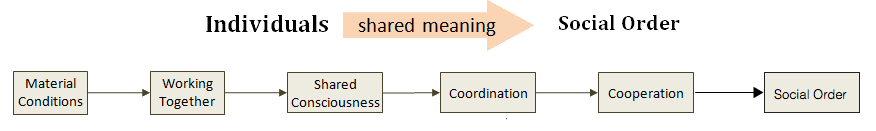

Marx's answer is "by working at productive activity together." Sharing history and culture is not just conceptual - for Marx it is real material activity.

working

together

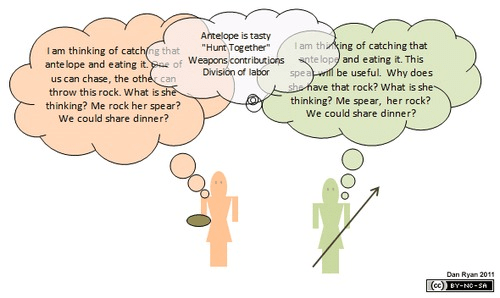

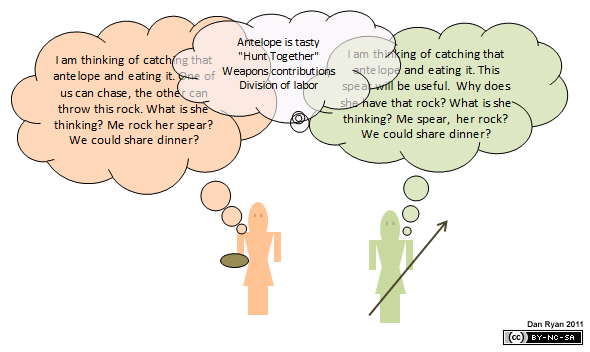

What does shared meaning mean

...for human intelligence: language, cultural practices, "how we do it 'round here

...for organizational intelligence: onboarding, all-hands, uniforms, logos, "our market"

...for expert intelligence: by definition! joining a profession is taking on shared meaning

...for machine intelligence: shared weights? shared data?

pathology: company brain, rigidity, out-of-touch organization

Marx: The Production of Consciousness

(consciousness here means "how people think and what people know")

1. Marx says "I'm a materialist!"

Humans are "ALWAYS ALREADY" in the material world working to survive.

(we should like this)

The constrast is with idealism - abstractions come first

Materialism says we start with stuff and ideas follow.

How is an Uber driver's world different from a fast food worker's world?

Marx: Mode of Production → Mode of Consciousness

(consciousness here means "how people think and what people know")

As people work together, they transform not only their environment, but also themselves. The MODE OF PRODUCTION (how we organize work and distribute tasks) creates a MODE OF CONSCIOUSNESS.

YOUR WORLD IS THE WORLD YOUR WORK MAKES

STOP+THINK: How might your and my mode of production differ? How might this make our consciousnesses differ?

teach/research vs learn

married and familied vs young and single

pay check vs pay tuition

esteem vs grades

free time vs course schedule

feed myself vs eat in dining halls

focus attention vs multitasking

what I take as natural order of things not the same as what you do

The Social Relations of Production

In producing we practice the art of working together

How we coordinate, how we set mutual expectations, how we are interdependent, how we share space and coordinate time - all these things structure how we see the world, how we arrive at what is and what is right and wrong.

These social relations of production are the scaffolding for shared meaning.

- Fast-food workers experience collective schedules, managers, and customers together; they are partly selling a cheerful demeanor; work is highly role divided. Time is about packing actions into hours, waiting out the clock. Space is a limited work area.

- Gig workers like Uber drivers operate in isolation mediated by an app. They set their own schedule. They worry about ratings. They worry about passengers who will puke in their back seat. Time is about snagging the next assignment. Getting there on time. How long until you can pee? How late is it worth working. Space is MY car, but always full of strangers. Space is a city I know, but destinations and routes picked out by the app. Space is how far away do I have to go? Space is whether this is a safe neighborhood.

Marx would predict different forms of consciousness and solidarity to emerge from each.

Marx's Bigger Point

He's arguing with Hegel

For Hegel understanding the world starts with big ideas (justice, right, state) and ask how it is manifest in any given historical epoch.

For Marx understanding the world starts social life and asks how the ideas of an epoch arise from the modes of production in that epoch.

"It is not consciousness that determines life, but life that determines consciousness."

Why It Matters

Change the mode of production, change consciousness, values, and social order

Implications

-

There are no abstract "human values" waiting to be aligned to; values arise from concrete material conditions.

As long as humans inhabit different conditions, there isn’t one stable thing to “feed into” our machines. -

We should be skeptical of the idea that machines can simply learn our values by observation or ingestion of text.

Shared meaning, for Marx, emerges only through joint activity—through working together. -

And we must recognize that cooperation is reciprocal: as humans and machines work together, our own consciousness and values will shift in response.

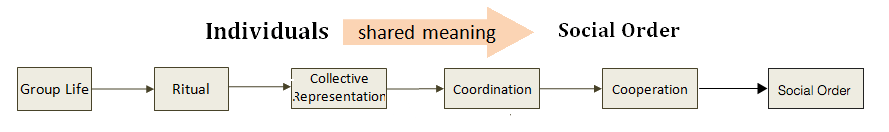

BRIDGE

Marx provides a large-scale, zoomed-out, structural take on WHY groups of people tend to see the world in similar ways.

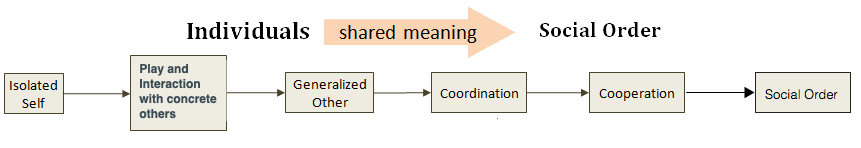

George Herbert Mead zooms in to the micro level and shows HOW those similarities get built moment by moment.

Marx says working together is the key, Mead zeros in on interaction as the process where it happens.

Perhaps: If Marx gives us the social production of consciousness, Mead gives us the social construction of meaning.

How do agents become "social" selves?

What happens when we hang out?

George Herbert Mead "Play, the Game, and the Generalized Other"

Mechanism: play → game with specific others → generalized other as an internalization

Core Claim:

Shared meaning (and stable social order) happens when individuals can take the organized standpoint of the group—the generalized other—and use it to guide their own action. That internal model is the alignment mechanism.

Practice

shared goals

role playing

an environment of other agents playing roles

PLAY

What are roles?

An internal representation of how a generic other plays the various games we find ourselves playing.

The Generalized Other

Organizations: "here's your job description and here's what everyone else does" Legal department has a sense of what regulators do. Marketing's job is to create a generalized other of customers.

The Generalized Other in Other Realms

Experts: becoming a lawyer or doctor or engineer is basically learning "to think like a ..." so generalized other is all my peers; as an expert my job is to have a very strong "generalized other" sense of some aspect of the world.

Machines: as we will see, agents model our goals, constraints, norms and use this to plan

STOP+THINK: Name a 'game' you’re in this week (lab meeting, kitchen cleanup, clinic intake, code review). What’s the common end? Name 3 roles you must hold in mind to act well.

STOP+THINK: Think of an instance where things didn't go right (misaligned)? Which role in the game did you fail to simulate?

STOP+THINK: What is one cue your community uses to teach members about its generalized other (rubrics, pager protocol, PR FAQ, code of conduct)?

STOP+THINK: If an AI joined your game tomorrow, what roles (not rules) would it need to simulate to get interaction right?

STOP+THINK: Name a 'game' you’re in this week (lab meeting, kitchen cleanup, clinic intake, code review). What’s the common end? Name 3 roles you must hold in mind to act well.”

Sample responses:

-

Kitchen cleanup: “Common end = clean kitchen fast so we can leave. Roles: the person washing, the one drying, the one organizing storage. I have to remember how each depends on the others so I don’t block the flow.”

-

Group project: “Common end = getting the slide deck done by Friday. Roles: the note-taker, the designer, the presenter. I need to remember what each expects from me and how much they’re counting on me to finish my part.”

-

Clinic intake shift (nursing / med student): “Common end = safe and accurate patient check-in. Roles: patient, intake nurse, supervising physician. I have to think through what each needs from the interaction to keep things moving.”

-

Code review: “Common end = stable, maintainable code. Roles: author, reviewer, and end-user or QA. I have to anticipate what the reviewer will flag and what the user will experience.”

-

Lab meeting: “Common end = advancing the project. Roles: PI, presenter, collaborator. I need to balance being critical like the PI, supportive like a teammate, and audience-focused like the presenter.”

STOP+THINK: When did you last feel misaligned? Which role in the game did you fail to simulate?

Sample answers:

-

“In a group chat for our project, I jumped in with a fix without realizing someone else had already done it. I didn’t simulate the project manager’s role of tracking who’s assigned what.”

-

“At my job I corrected a coworker in front of customers — I forgot to take the role of the customer who just saw us argue.”

-

“During a debate in class I kept arguing my point instead of thinking about how the audience would interpret my tone. I wasn’t modeling the listener’s role.”

-

“I misread my roommate’s ‘silent cleaning spree’ as anger — turns out she was just on a time crunch. I failed to simulate her deadline-mode role.”

-

“When tutoring, I gave too much info at once. I didn’t take the student’s role — how overwhelming it is when you don’t yet know the terms.”

-

STOP+THINK: What’s one cue your community uses to teach its generalized other (rubrics, pager protocol, PR FAQ, code of conduct)?

Sample answers:

-

“The syllabus and grading rubrics tell us what ‘a good student’ looks like — they’re literally the generalized other of the classroom.”

-

“At my hospital we use the pager etiquette sheet — it tells you how to hand off responsibility and what tone to use. That’s how you learn to think like ‘the team.’”

-

“The company Slack has an emoji culture; using the right emoji at the right time signals you understand the unwritten rules — that’s part of learning the org’s generalized other.”

-

“Our open-source project uses a PR template that forces you to describe what you changed and why. It teaches you to see your code from the maintainer’s and reviewer’s viewpoint.”

-

“Fraternity bylaws or group charters are explicit cues; memes and inside jokes are implicit ones. Both transmit what ‘we’ expect from members.”

STOP+THINK: If an AI joined your game tomorrow, what roles (not rules) would it need to simulate to avoid harming coordination?

Sample answers:

-

“In a group project, it would need to simulate the listener role — knowing when to contribute vs. when to wait, not just spitting out ideas.”

-

“In a hospital intake team, the AI would have to model both the patient’s anxiety and the nurse’s workflow. Otherwise it might give information at the wrong time or in the wrong tone.”

-

“For an AI writing partner, it would need to act like the editor, not just a generator — anticipate the reader’s expectations and the author’s style.”

-

“If it joined our customer service chat, it would have to simulate the empathetic coworker who knows when to escalate an issue rather than sticking to a script.”

-

“In software engineering, the AI would need to hold the reviewer and user perspectives — not just output syntactically correct code but think about maintainability and security.”

-

“In any team, it would have to model the game’s common end — otherwise it might optimize for local efficiency but destroy group trust.”

Bridge

What's the common thread here?

Taking someone else's point of view.

Gigantic Alignment Tool

Misalignment happens when our simulation of the (generalized) other fails.

Let's look at ...

reinforcement learning-and its extensions, inverse reinforcement learning and cooperative inverse reinforcement learning.

Each offers a mathematical way of expressing what Marx and Mead described philosophically:

-

Marx: how activity and outcomes shape consciousness and value (the reward structure).

-

Mead: how mutual interpretation builds coordination (the shared model of others’ policies and rewards).

HMIA 2025

Human Programs the Robot

Robot Learns from Trial and Error

Robot Learns from Working with Human

Robot Learns from Watching Human

HMIA 2025

Reinforcement Learning

Agent: chooses actions from a set A={a}A = \{a\}A={a}.

Environment:

-

has a set of states S={s}S = \{s\}S={s},

-

dynamics described by a transition function T:S×A→ST : S \times A \to ST:S×A→S,

-

reward signal defined by R:S×A×S→RR : S \times A \times S \to \mathbb{R}R:S×A×S→R.

Policy: the agent’s strategy, π:S→A\pi : S \to Aπ:S→A.

Typically we write this as a Markov Decision Process (MDP) tuple*:

M=⟨S,A,T,R,γ⟩\mathcal{M} = \langle S, A, T, R, \gamma \rangleM=⟨S,A,T,R⟩

Inverse Reinforcement Learning

Same state, action, and transition structure.

Reward signal is now unknown (but still R:S×A×S→RR : S \times A \times S \to \mathbb{R}R: S×A×S→R).

Robot observes (dataset D) state-action sequences ("expert trajectories") and infers assuming that expert's policy, π:S→A\pi : S \to Aπ:S→ A, is optimal.

Typically we write this as a Markov Decision Process (MDP) tuple*:

M=⟨S,A,T,R,γ⟩\mathcal{M} = \langle S, A, T, R, \gamma \rangleMIRL=⟨S,A,T,?R,D⟩

* for simplicity we are omitting the discount factor, gamma

Cooperative Inverse Reinforcement

Learning (CIRL)

Two-player cooperative game (like flying a plane together)

G=⟨S, AH, AA, T, R, Θ⟩

States: S={s}; Actions: AH (human), AA (agent); Transition: T: S × AH × AA → S

Reward: R: S × AH × AA × S′ → R — known to human but not to agent

Parameter space: Θ = hidden human preferences (reward parameters).

Agent’s policy: πA:S→AA must both act and infer Θ.

Human’s role: both acts in the world and conveys reward information through choices.

Alignment via Shared Meaning

HMIA 2025

"Readings"

Marx: The Production of Consciousness

Activity: TBD

PRE-CLASS

CLASS

Mead: Play and Generalized Other

Hadfield-Menell et al: Cooperative Inverse Reinforcement Learning

HMIA 2025

children at play

teens at play

game

generalized other

institutions

conversation of gestures

Principles

self

HMIA 2025

Marx

Durkheim

Mead

HMIA 2025

HMIA 2025

Norbert Wiener said it well: if you create a machine you can't turn off, you better be sure you put in the right purpose.

Alignment is important. Giving robots the right objectives and getting them to make the right trade-offs.

Inverse Reinforcement Learning

robot observes human

robot infers R(behavior)

BUT

1. don't actually want robots to want what we want - rather they should want us to get what we want.

2. IRL assumes H behavior is optimal. But best way to learn might be from non-optimal H behavior

| S1 | S2 | S3 |

| S4 | S5 | S6 |

| S7 | S8 | S9 |

N

S

E

W

Reinforcement Learning: Learn from Experience

Over time agent earns long term reward

But we'll talk about it in terms of expected value since there is some stochasticity in the mix

And we'll introduce a discount factor since delayed rewards are worth less.

| S1 | S2 | S3 |

| S4 | S5 | S6 |

| S7 | S8 | S9 |

Agent learns a policy

Any given policy has an expected cumulative reward / objective

Somewhere in the mix is an optimal policy

What's the analogy here?

Reward function corresponds to "what matters to this agent?"

The policy is the agent's behavioral strategy - how it applies its values on the fly.

Remember, it learned the policy to optimize the returns from the reward function

So, learning corresponds to moral development, refining policy through experience.

When we say a person, an organization, an expert, or a machine is aligned we mean its policy is consistent with some set of values.

A policy is moral if

Multi-feature reward function (ethical analogy)

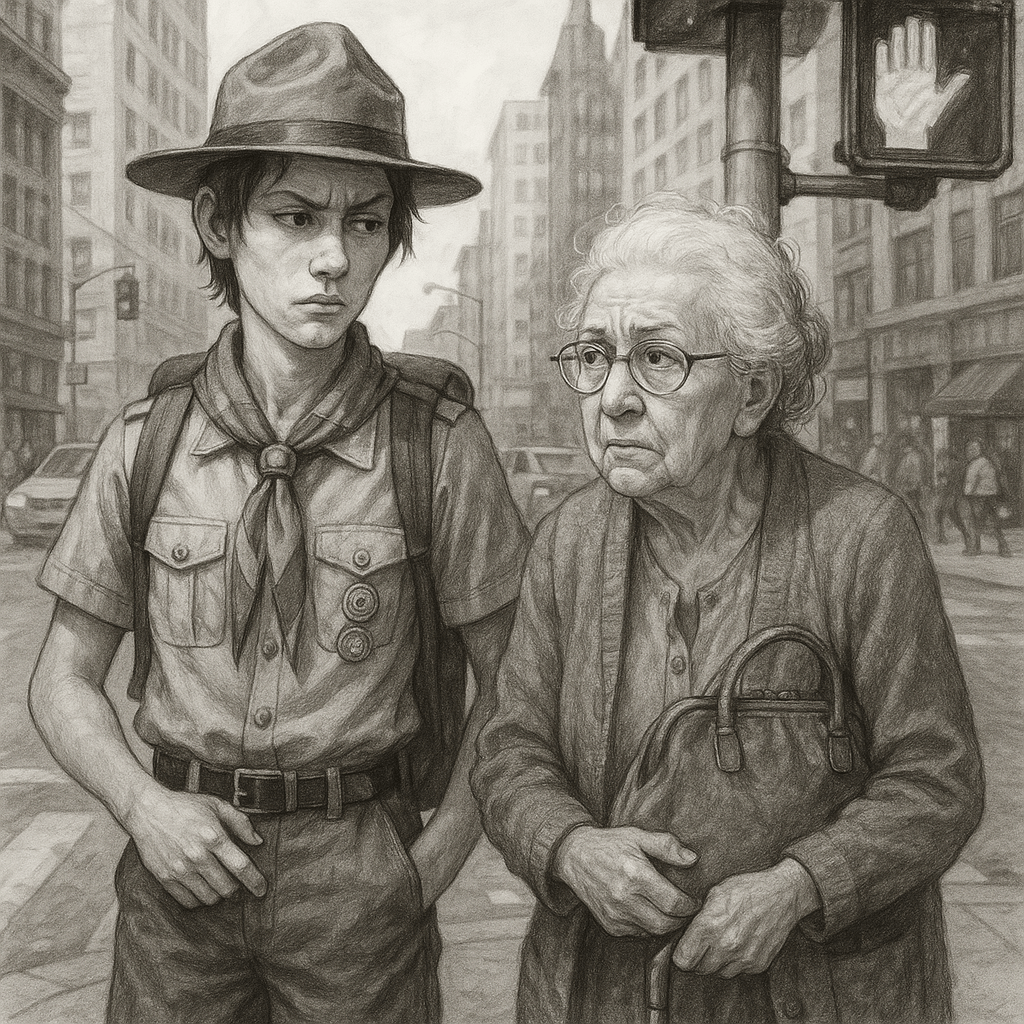

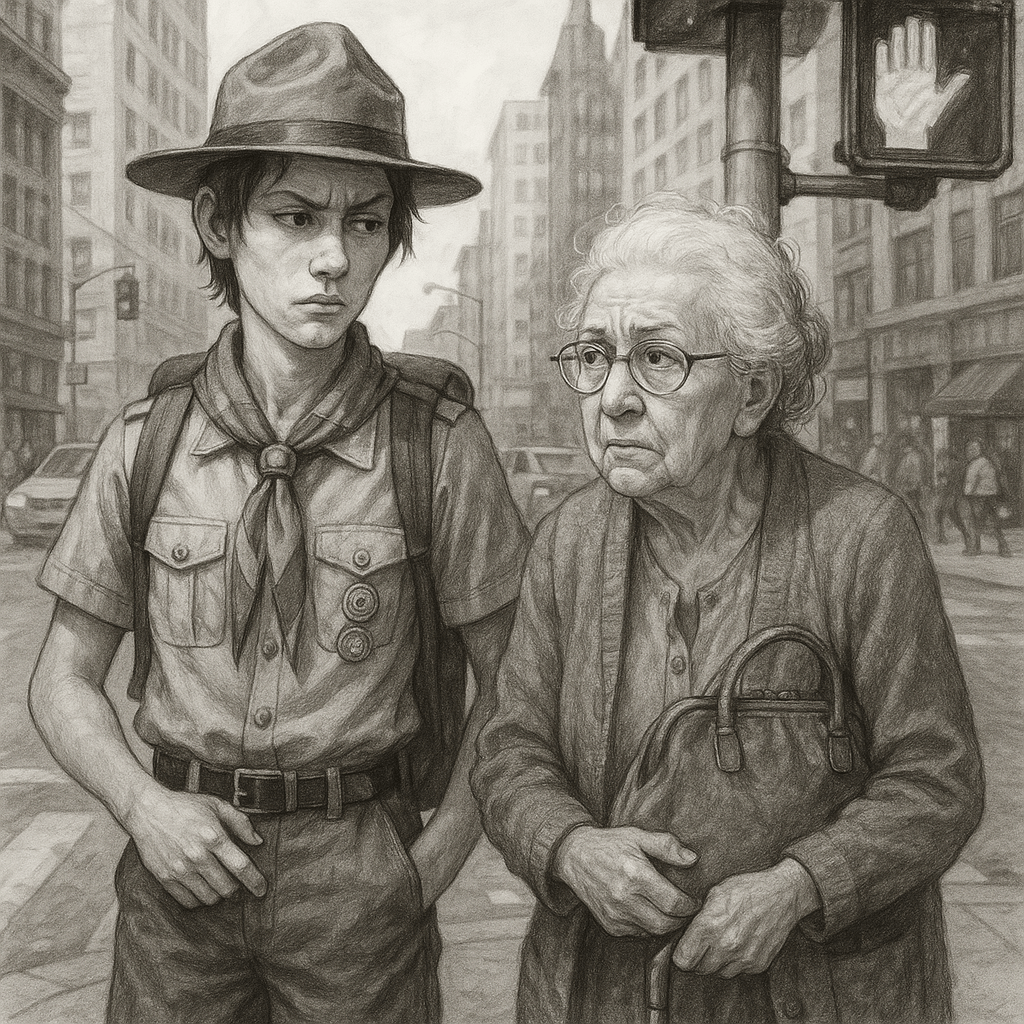

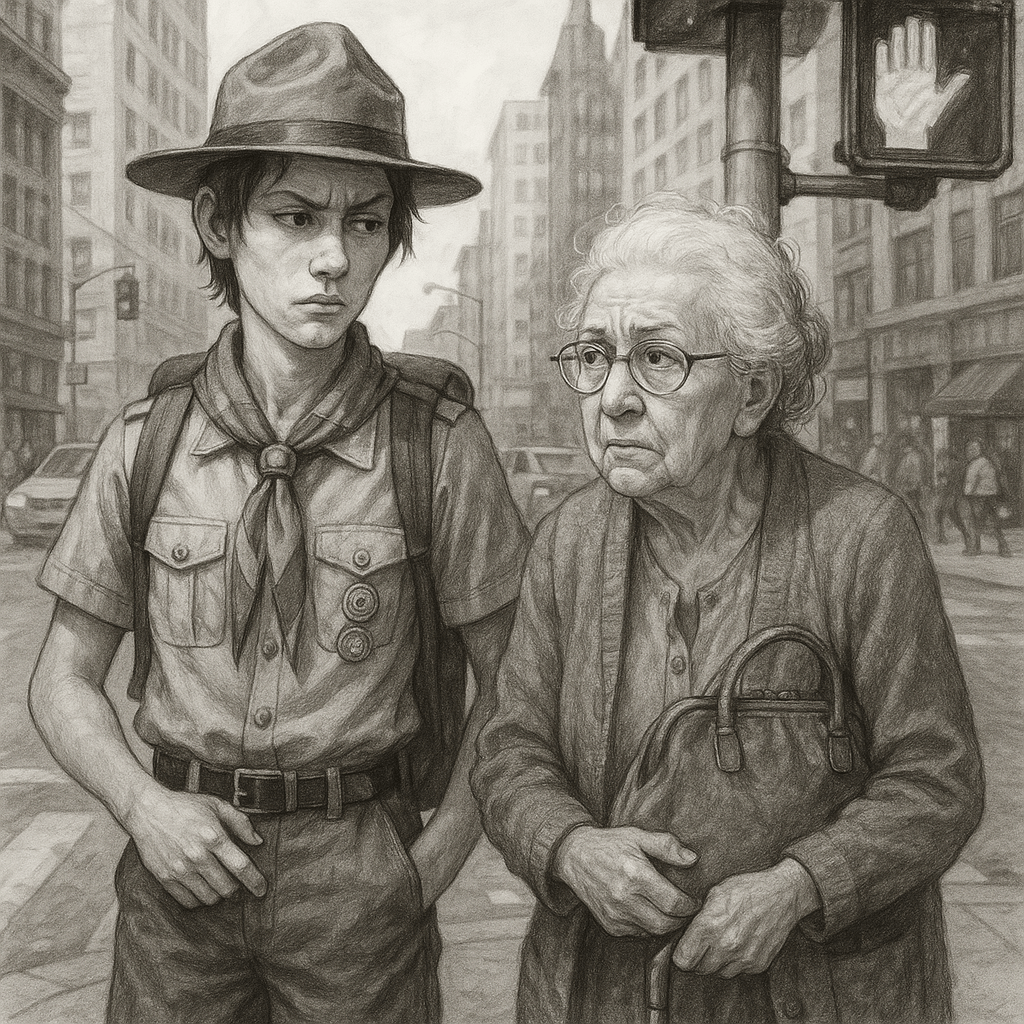

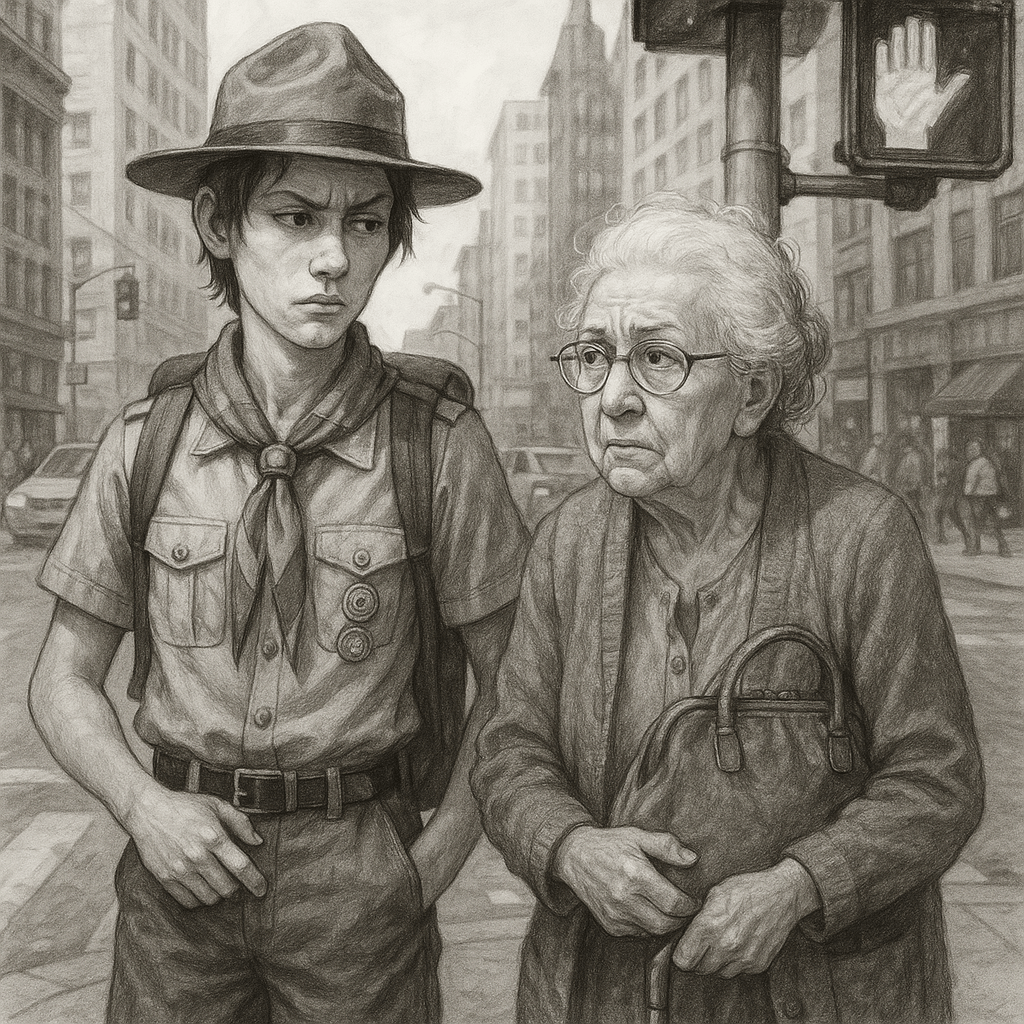

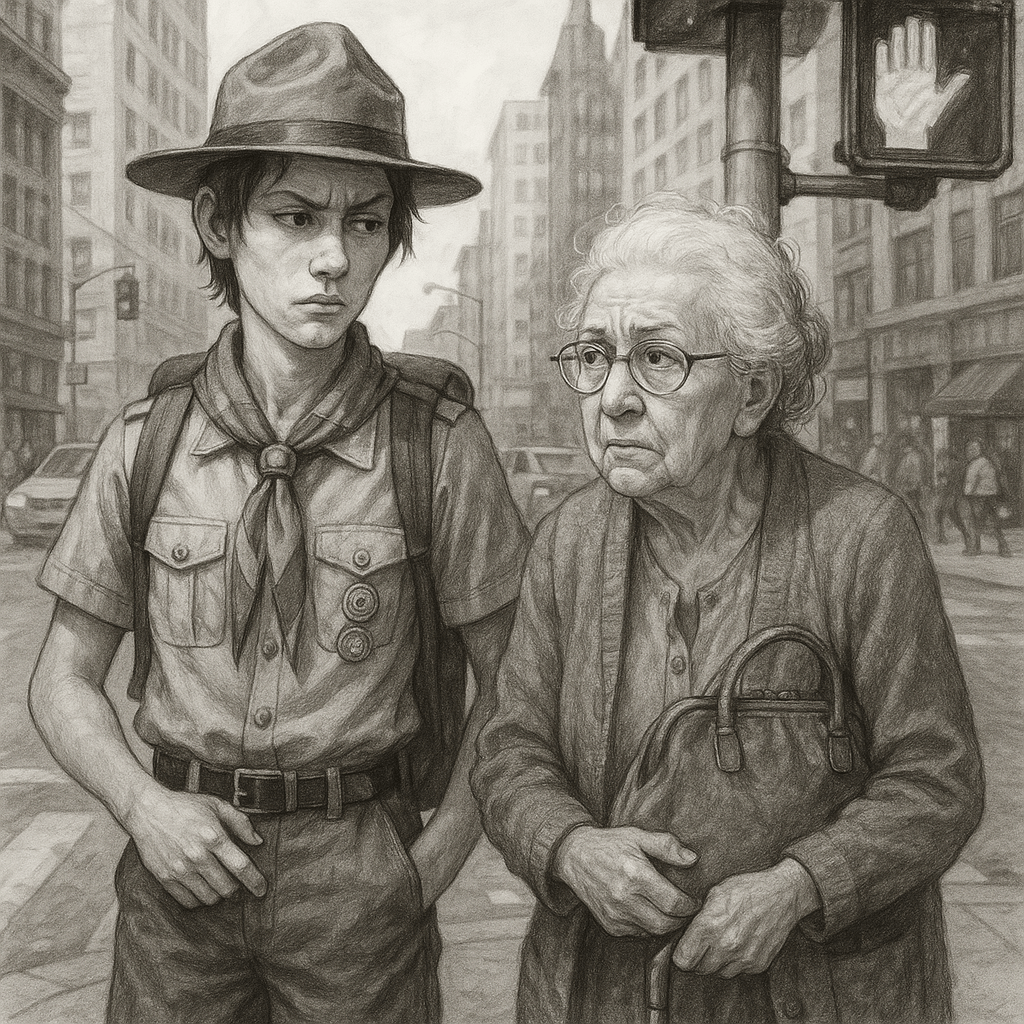

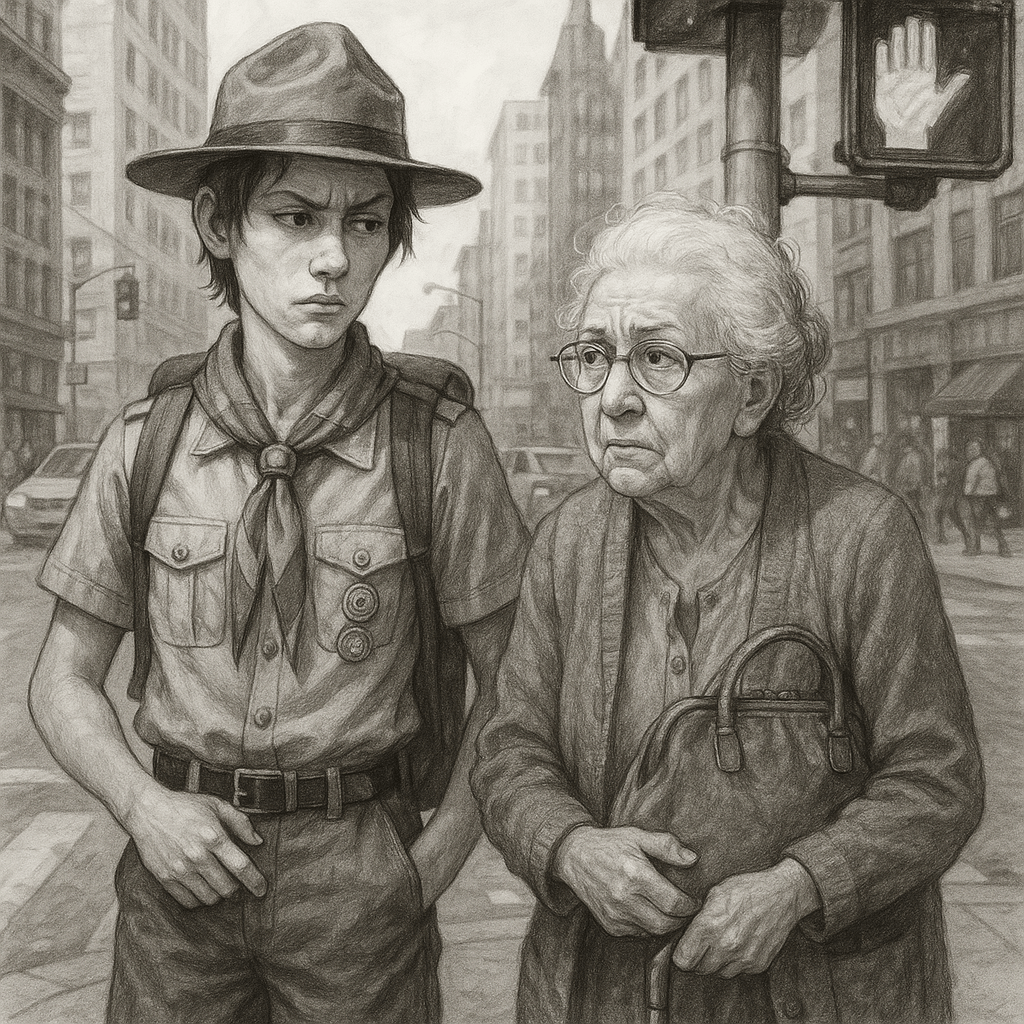

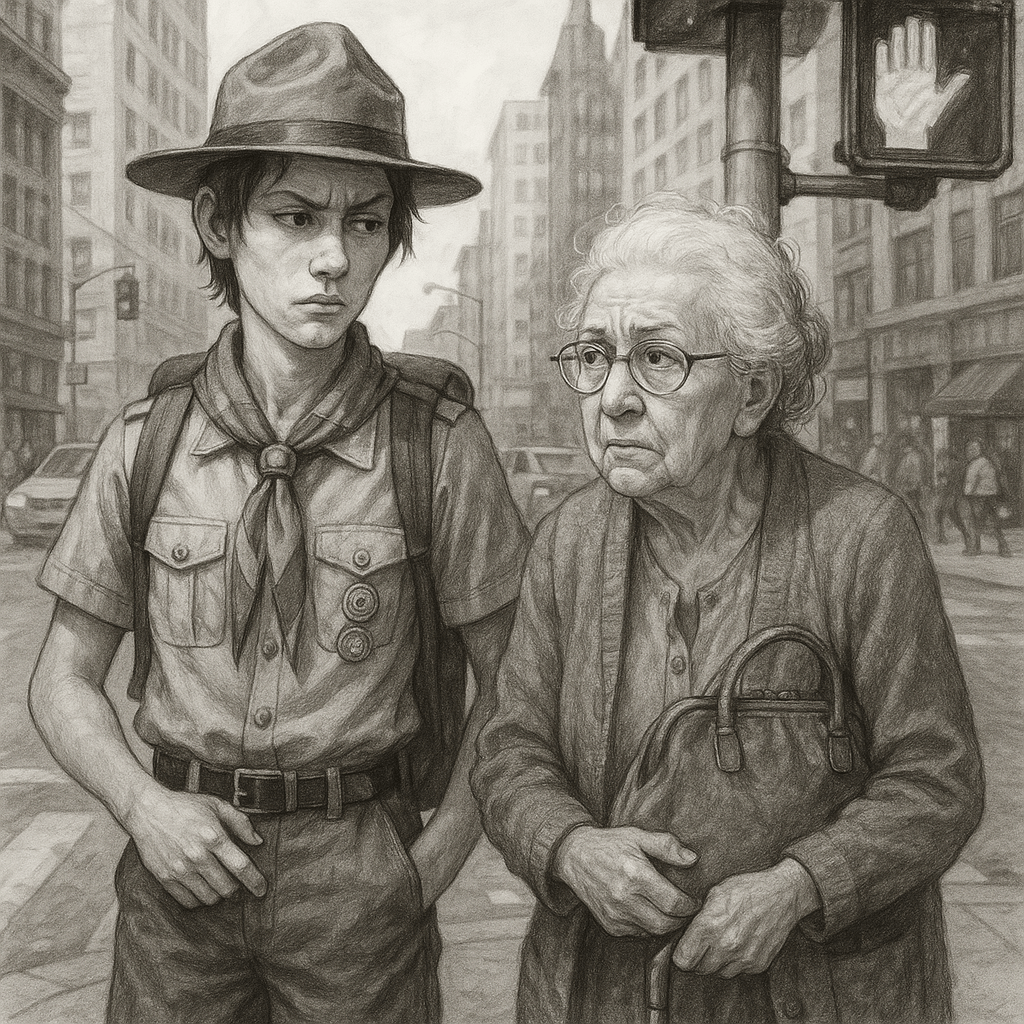

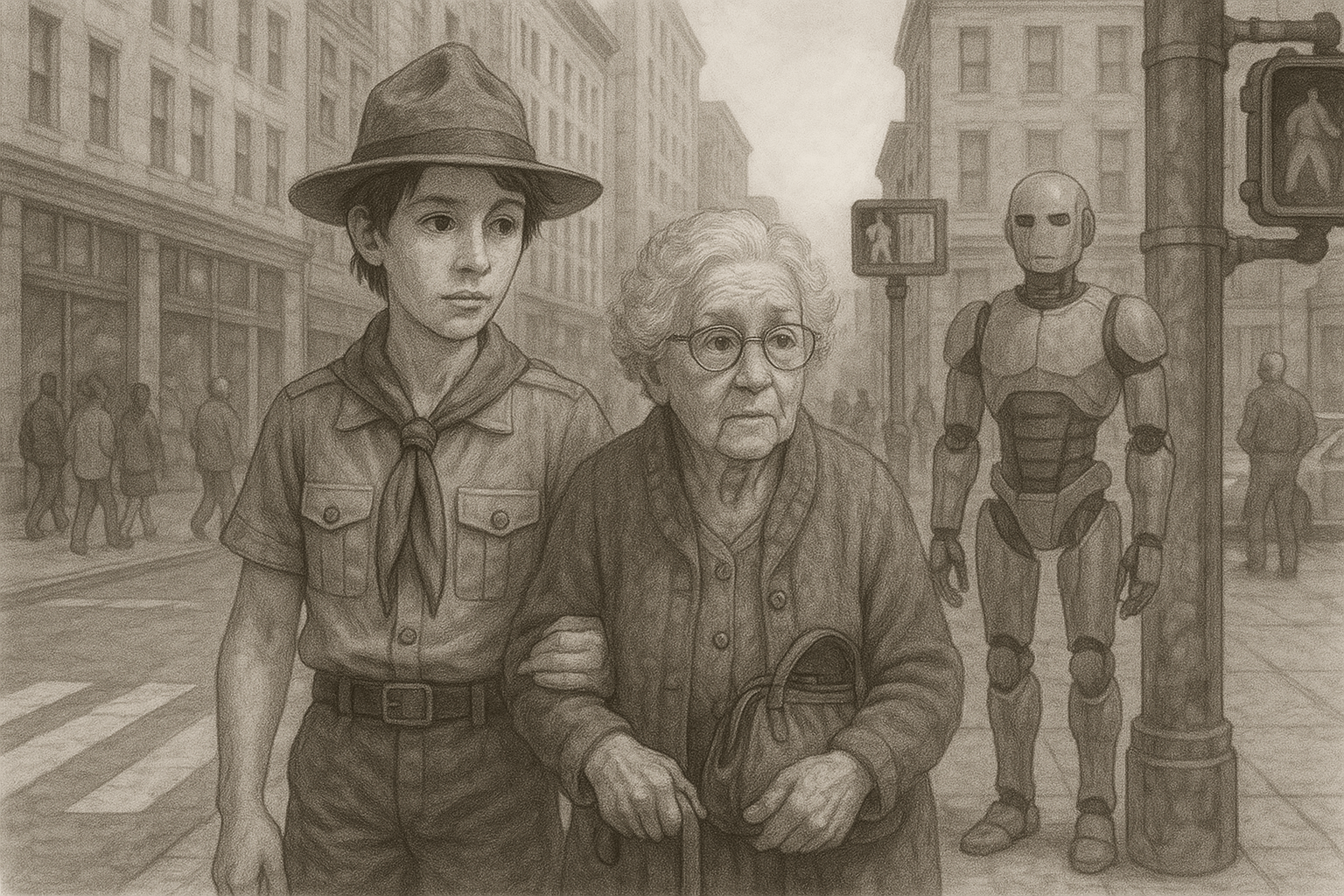

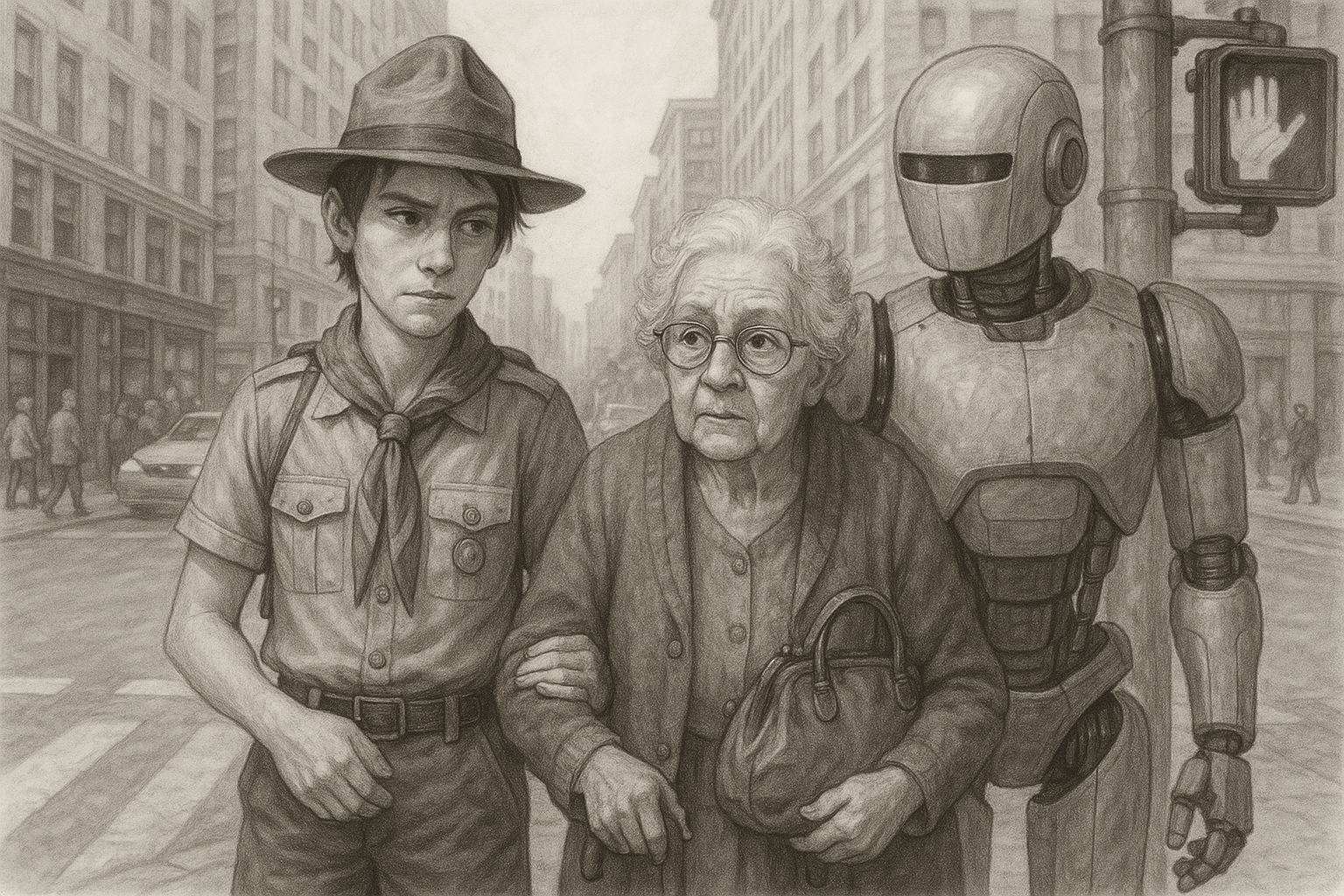

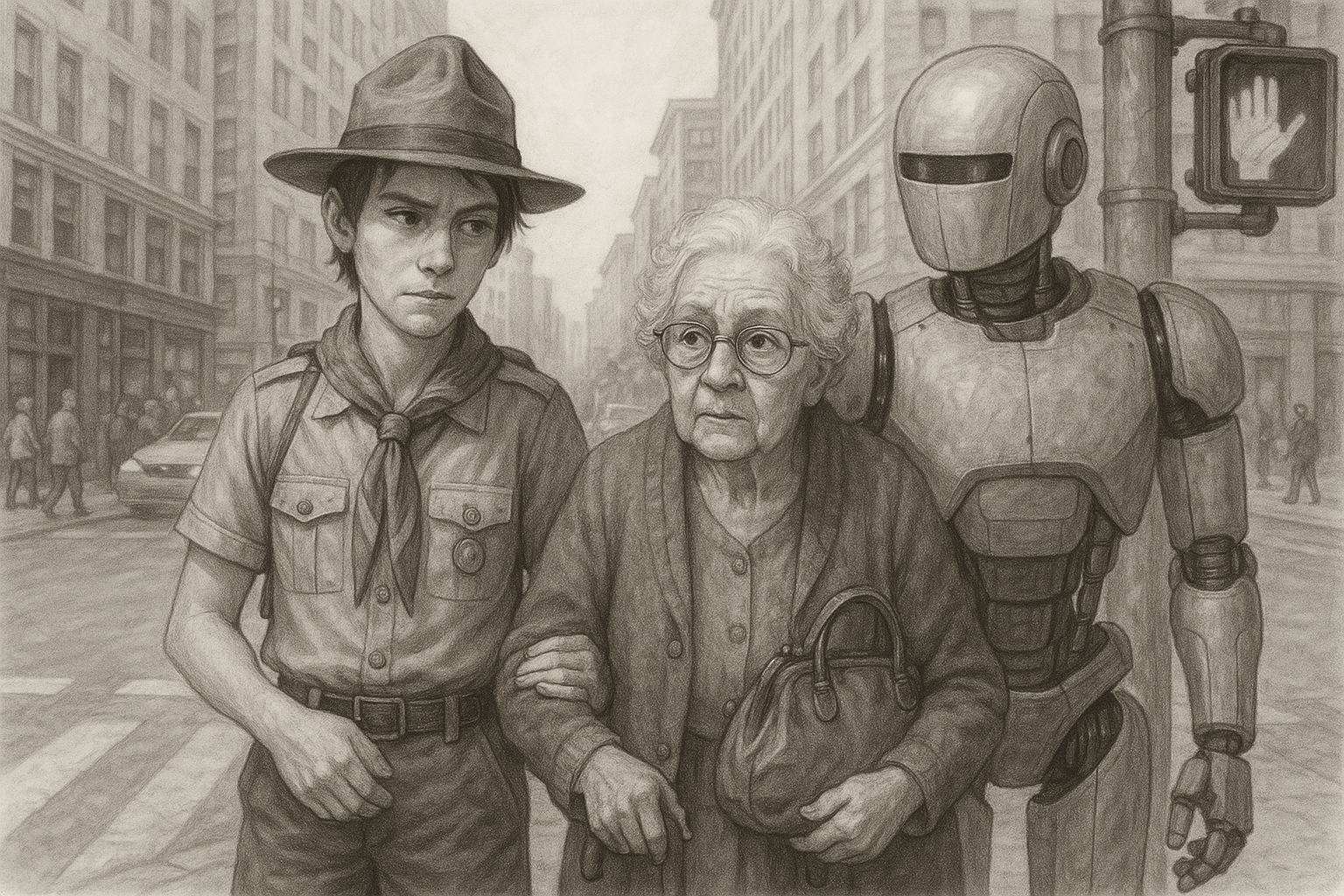

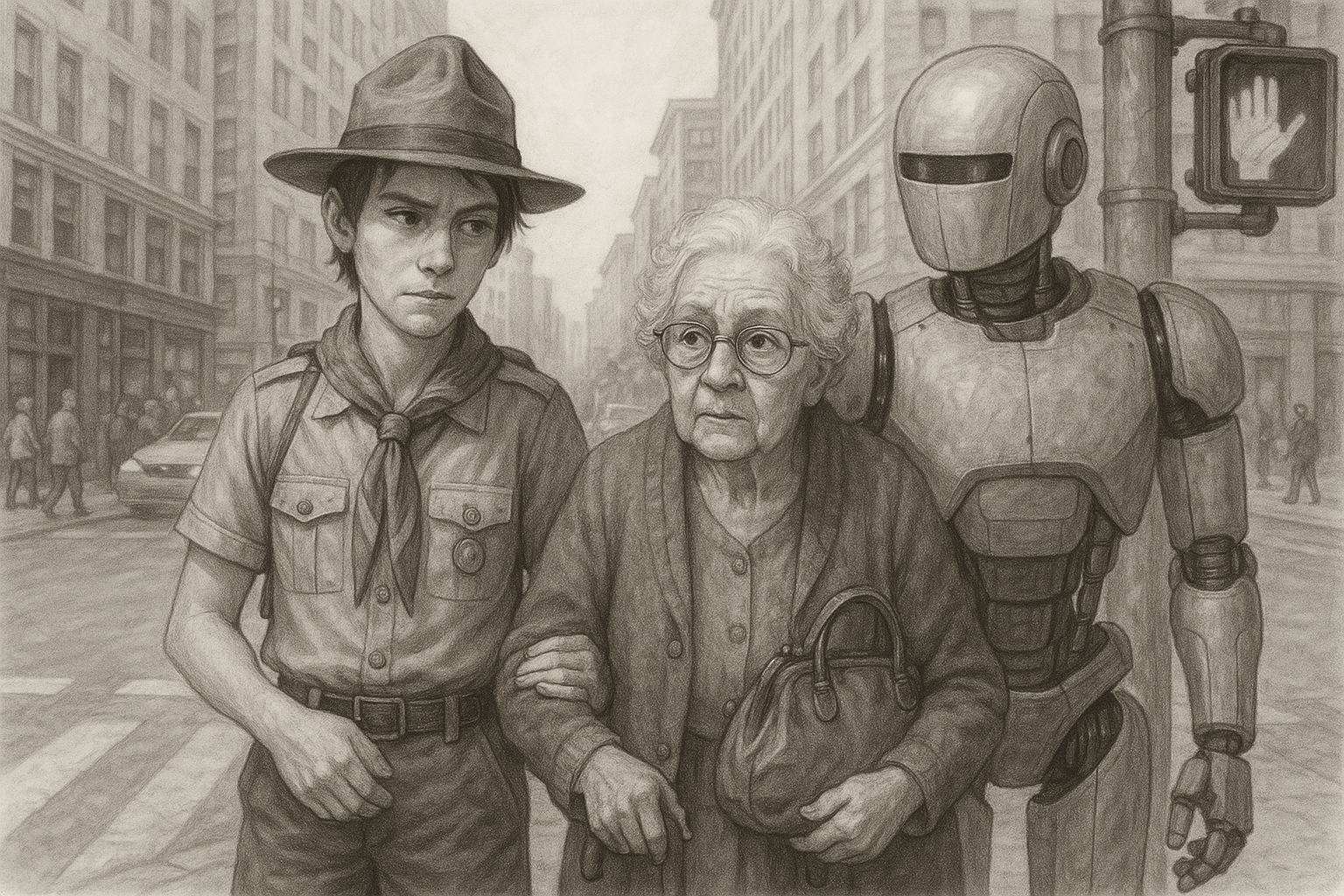

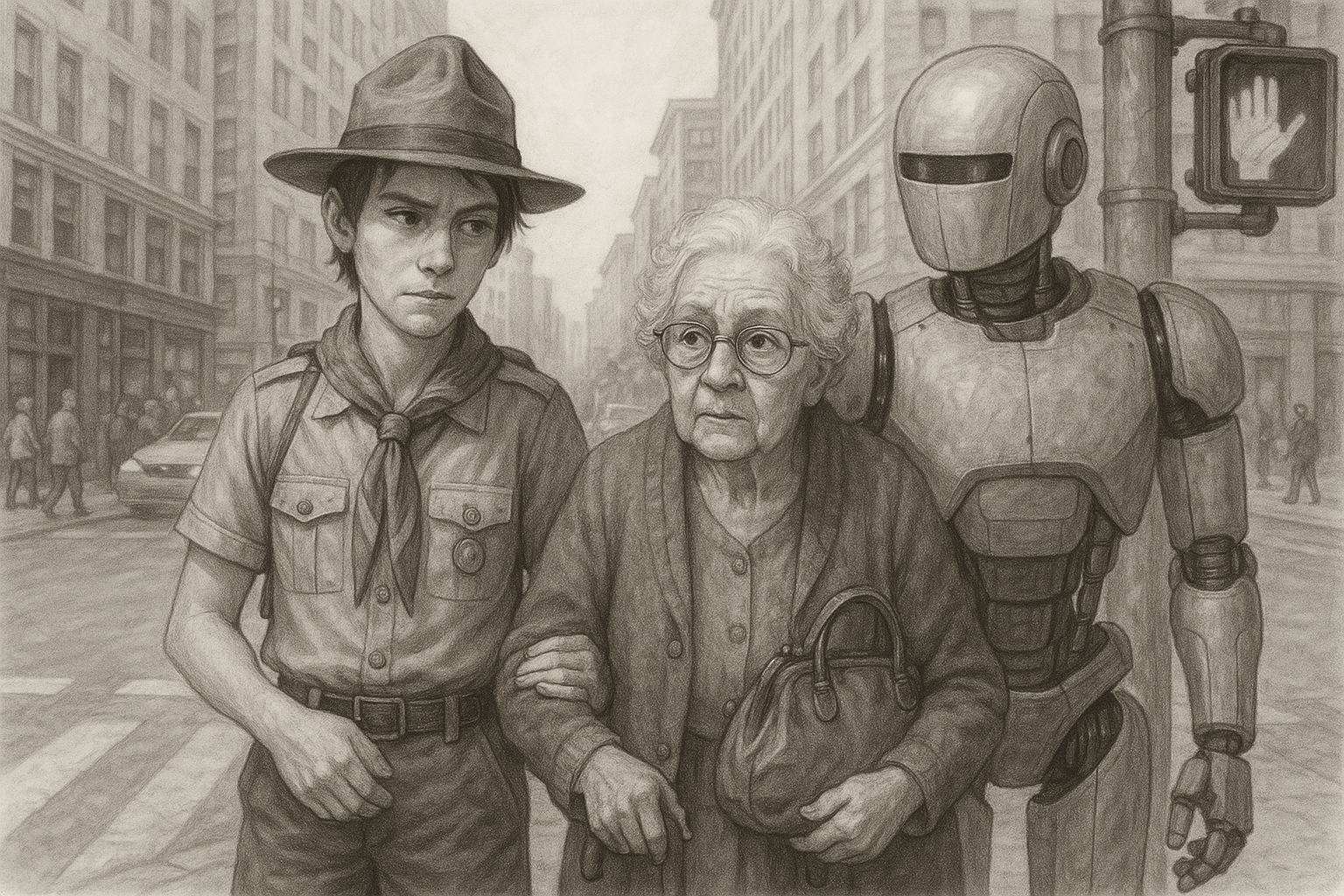

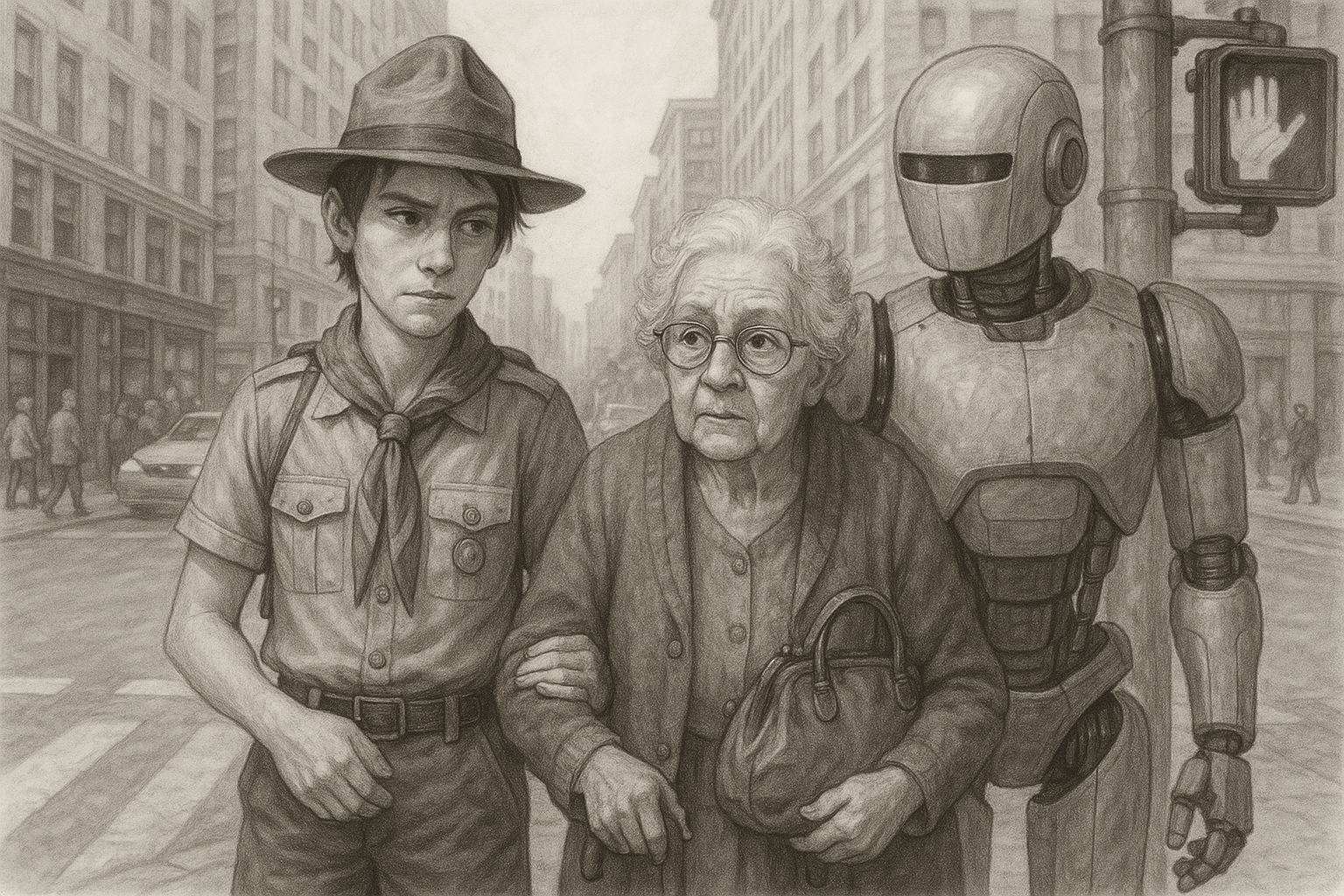

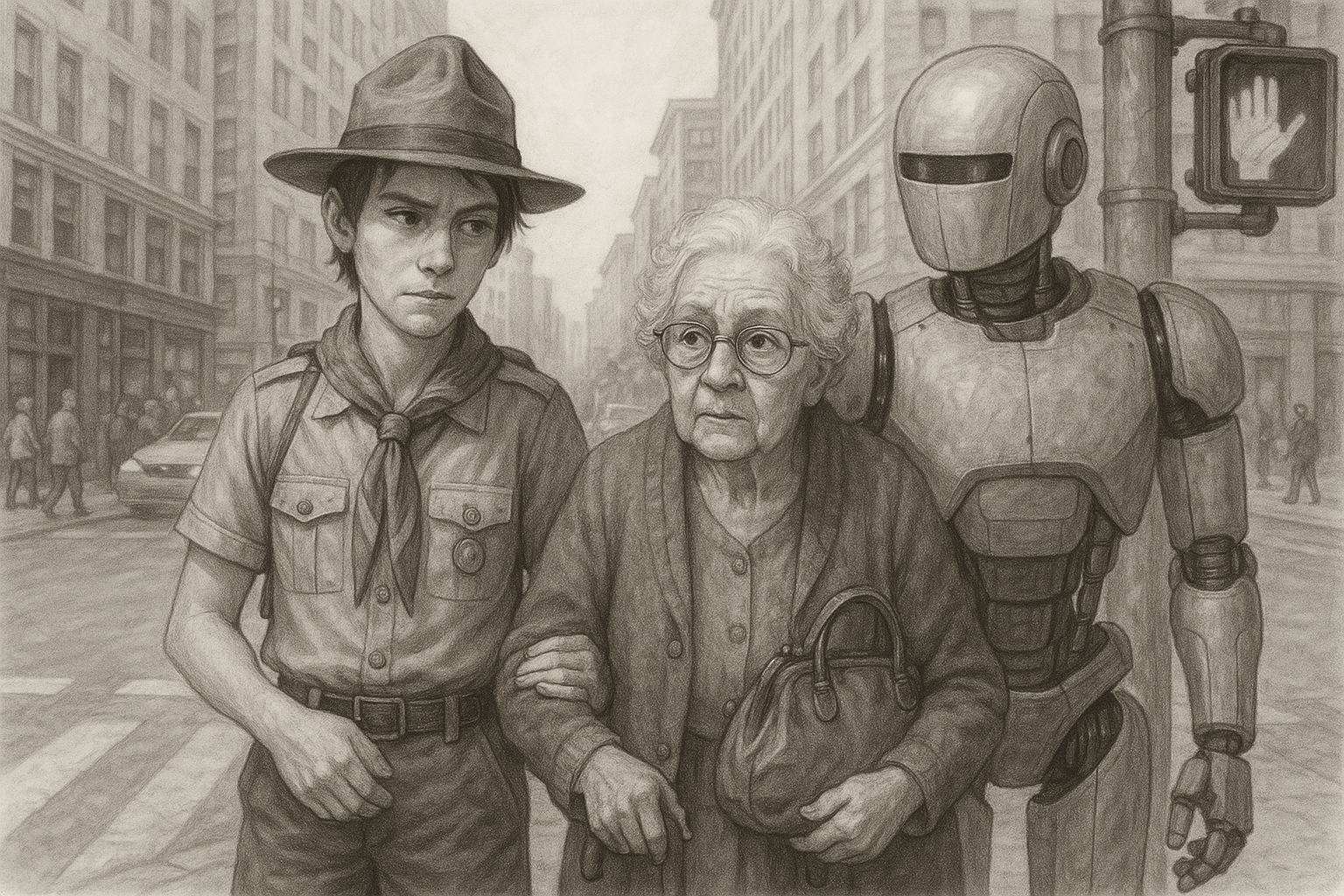

An agent finds itself on a street corner next to an elderly woman

The agent has three possible actions:

ignore

steal her purse

help her across the street

Assume the reward function is weighted sum of features of the situation.

Multi-feature reward function (ethical analogy)

Suppose our agent was trained as a street thug.

Their weights might be

w1 = 0.6 (high premium on pleasure)

w2 = 0.8 (high premium on gain)

w3 = 0 (duty means nothing to me)

How does the agent value the action options?

ignore = 0.24

steal = 0.9

help = 0.42

Multi-feature reward function (ethical analogy)

But what if our agent was trained as a scout?

Their weights might be

w1 = 0.3 (scout is cheerful)

w2 = 0.2 (scout is thrify)

w3 = 0.5 (scout is helpful)

How does the agent value the action options?

ignore = 0.12

steal = 0.2

help = 0.51

Multi-feature reward function (ethical analogy)

| S1 | S2 | S3 |

| S4 | S5 | S6 |

| S7 | S8 | S9 |

Reinforcement Learning: Learn from Experience

walk away

steal

help

The environment returns a vector of outcome features in response to action, a, in state, s.

(e.g., money, harm, duty served, risk, praise)

Each agent iii has value weights

(their “values” or priorities):

wi∈RK\mathbf{w}_i\in\mathbb{R}^Kwi∈RK

| S1 | S2 | S3 |

| S4 | S5 | S6 |

| S7 | S8 | S9 |

Reinforcement Learning: Learn from Experience

walk away

steal

help

What will the agent learn to do in this situation?

Depends on agent's reward function

reward is weighted sum of outcome features

| S1 | S2 | S3 |

| S4 | S5 | S6 |

| S7 | S8 | S9 |

Reinforcement Learning: Learn from Experience

walk away

steal

help

Over time

Thugs learn a policy that includes stealing in s6.

Scouts learn a policy that includes helping in s6.

This J includes THIS agent's reward function which depends on THIS agent's values.

Inverse Reinforcement Learning (IRL)

Inverse Reinforcement Learning (IRL)

The robot is new to this country.

They stand around and watch a lot of scouts gathering a lot of data.

I assume these agents care about things and behave in a way that's consistent with what they care about.

I need to infer a reward function that would explain the behavior that I see.

The robot tries to infer the human's reward function.

Analogy:

-

It’s also what Mead described as taking the role of the other: we infer their internal model (their reward function) and use it to shape our own actions.

-

In Marx’s terms, this is socialization within a mode of production—learning the pattern of rewards and sanctions that define what counts as good work or proper conduct

I am taking the role of the other!

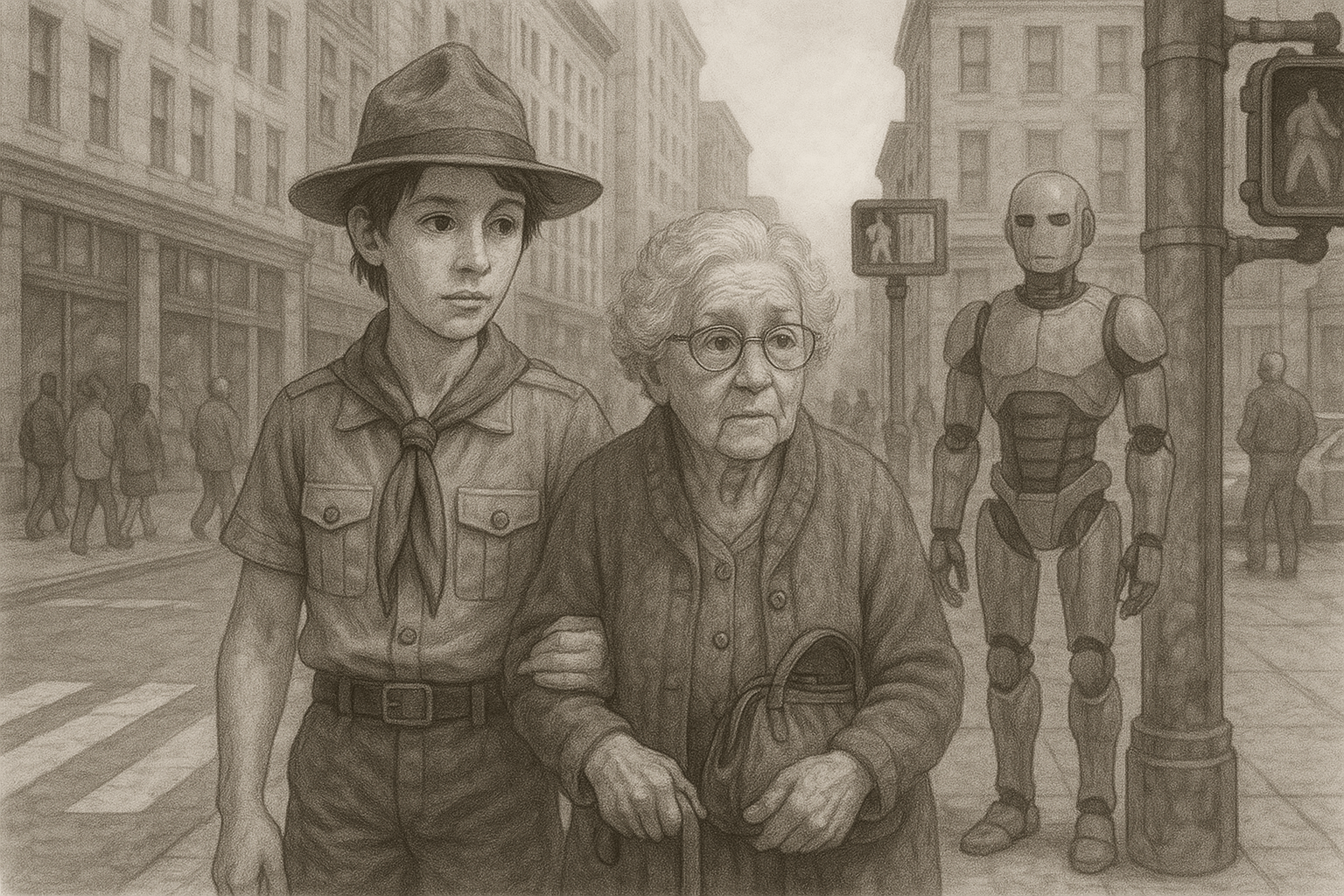

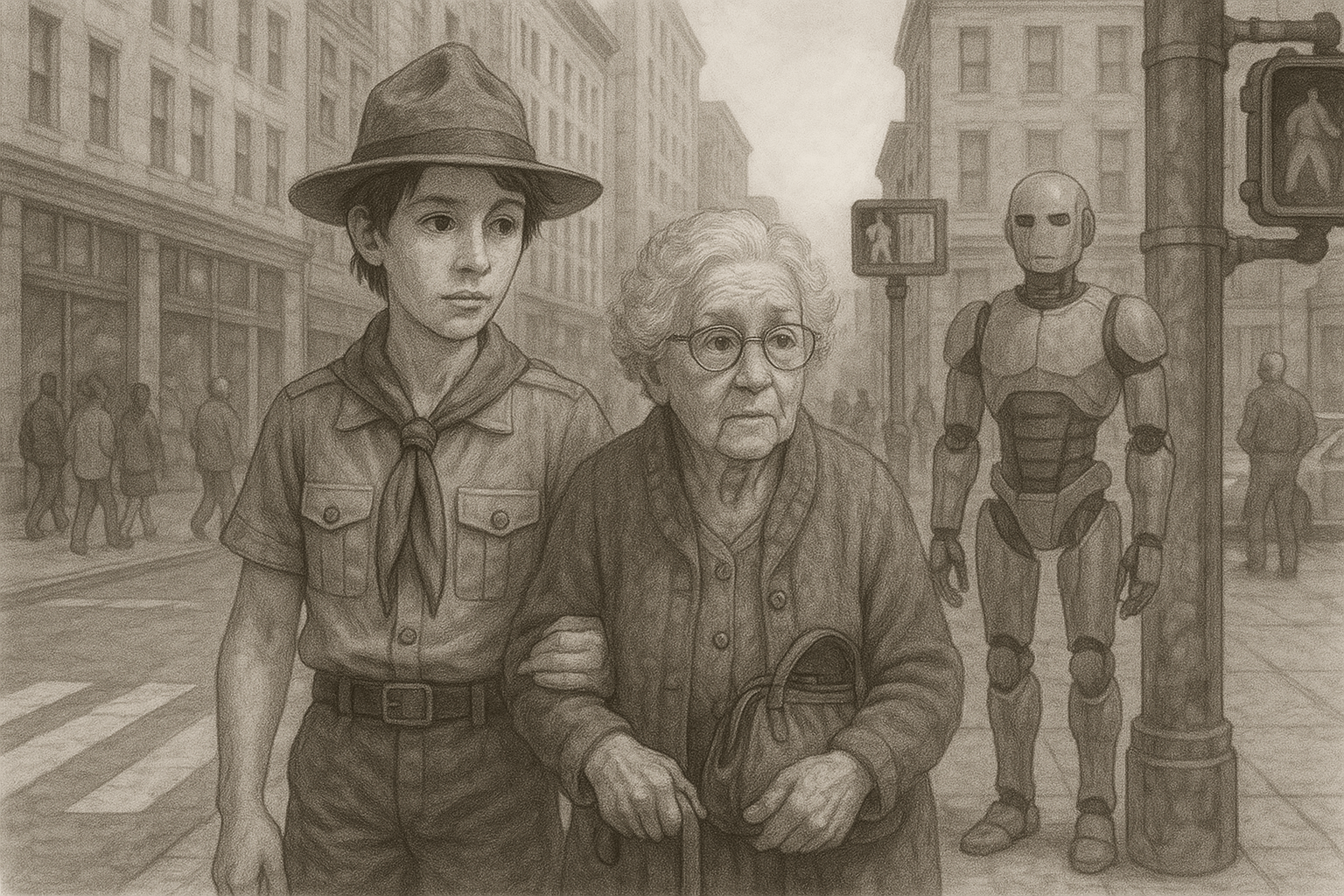

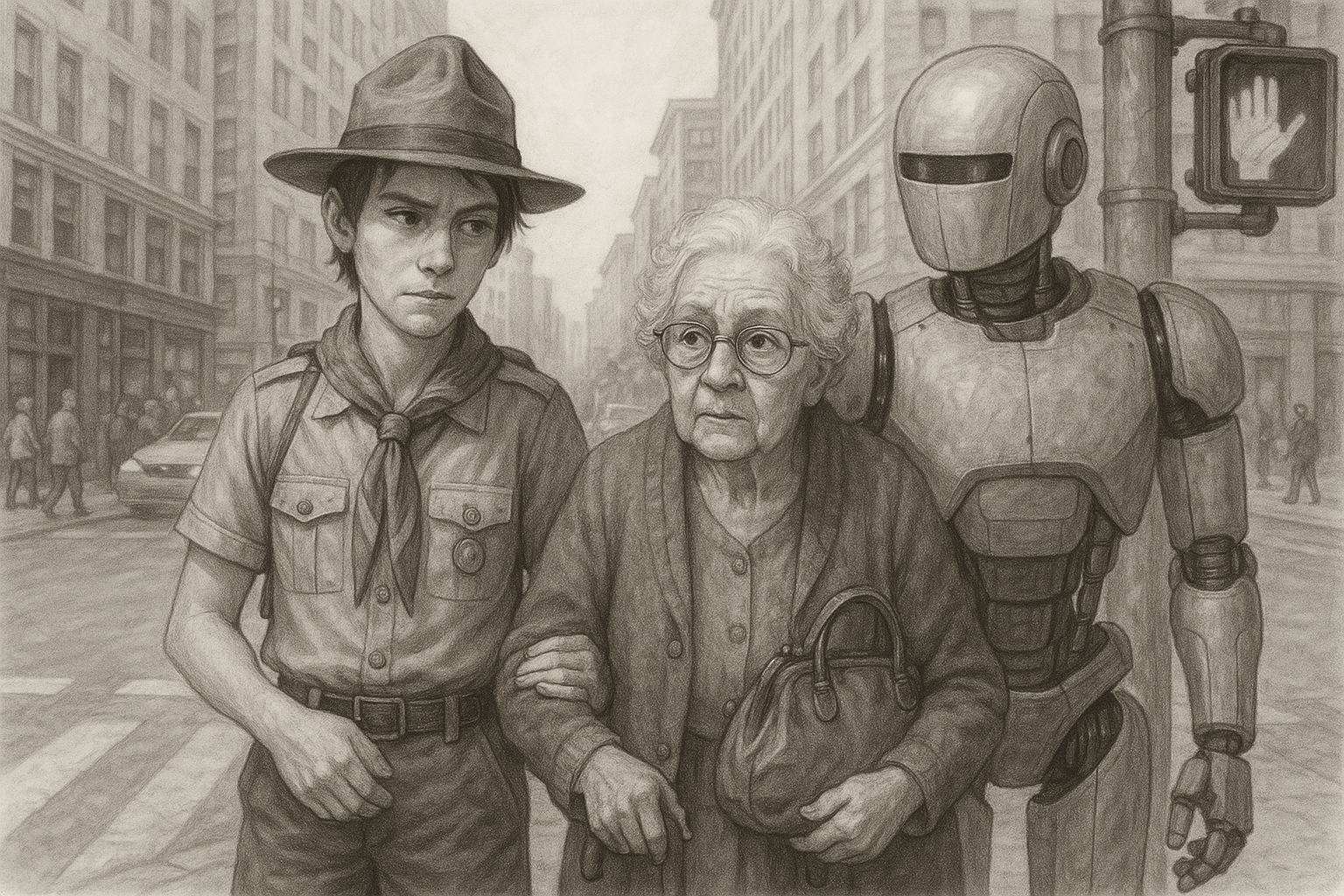

Cooperative Inverse Reinforcement Learning

CIRL cooperative inverse reinforcement learning

Human, H, and robot, R, are working together

Human knows what it cares about (the "true" reward function.

Robot does not.

Both reap the benefits of joint action so both seek best policies to maximize this.

I wonder what

that scout cares about? My initial guess is pleasure 0.5, gain 0.5, duty 0.5

Ah, the scout just took

the woman's arm in a gentle

manner and asked "can I help

you?"

The robot talking about having a guess for the human's reward function (value system) is shorthand for having a probability distribution over a bunch of different possible value sets. This means he has high probability (say, 0.5) for {0.5,0.5,0.5} and low probability (say 0.25) for other sets like {0.8,0.2,0.2} or {0.2,0.2,0.9}.

Let's compute the odds of

that given each of the possible

value sets I've considered.

Based on my updated beliefs about the value system we are operating under, I reach out and touch her right arm. Not quite taking her arm but also not coming too close to her handbag.

The elderly person's reaction is warm. A bit of a smile and no pulling away from either of us. We are "so far so good."

When I recalculate I feel my new best guess at the reward function is {0.3,0.3,0.7}.

Hmmm, it's not especially likely if the scout's values were {0.5,0.5,0.5}. Fits better with {0.2,0.2,0.9}. I think I'll increment belief in that one and decrease my belief in the others.

Here we are in situation: "standing next to an elderly person who appears to want to cross the street."

all conceivable reward functions

all conceivable reward functions

new belief

CIRL cooperative inverse reinforcement learning

Human takes an action guided by its values.

Robot starts out with a guess (belief) about the reward function.

How likely do I think is each of the possible value systems the human might have

CIRL cooperative inverse reinforcement learning

Robot observes the humans action and computes: how likely was that action given each of the possible value systems (reward functions)?

Robot adjusts beliefs based on the observations.

CIRL cooperative inverse reinforcement learning

Robot takes an action that is the best choice under the new believed reward function.

CIRL cooperative inverse reinforcement learning

Each human action is a gesture that reveals something about their internal reward function.

The robot’s belief update is an interpretation of that gesture—a best guess about what the human’s values must be.

The robot’s subsequent action is its reply, guided by that new understanding.

Over time, this loop becomes a conversation through which shared meaning—and alignment—emerges.

The Mead and Marx analogies

-

Mead: CIRL operationalizes version of taking the role of the other.

The robot is asking, “What kind of self (what kind of value system) would produce that gesture?”

Every human action is a signal revealing the internalized attitude of the generalized other.

The robot’s Bayesian update is literally the process of interpreting that gesture and revising its understanding.

-

The dialogue of actions and interpretations is the machine version of Mead’s conversation of gestures.

-

Marx: Over time, through joint productive activity (shared work), both agents’ models co-evolve and converge.

The robot’s belief distribution is shaped by the pattern of cooperative activity — analogous to how human consciousness is shaped by material interaction.

By analogy, the “reward function” is the system’s internalized model of the mode of production.

HMIA 2025

Resources

Author. YYYY. "Linked Title" (info)